上一节我们知道了SRP的作用和基本用法,现在来看一下我们的主角--URP是个怎样的管线把!

Unity2019.3及之后的版本才能看到URP这个package,前身是LWRP,可以创建默认管线项目然后手动导入,或者直接创建URP项目。导入之后我们开始分析URP的代码:

回到我们最开始学习SRP时的感觉,那时我们填充了一个Render函数后,写了几个简单的shader完成了我们想要的效果,现在我们重新来看URP中的Render函数是怎样的:

protected override void Render(ScriptableRenderContext renderContext, Camera[] cameras) { BeginFrameRendering(renderContext, cameras); GraphicsSettings.lightsUseLinearIntensity = (QualitySettings.activeColorSpace == ColorSpace.Linear); GraphicsSettings.useScriptableRenderPipelineBatching = asset.useSRPBatcher; SetupPerFrameShaderConstants(); SortCameras(cameras); foreach (Camera camera in cameras) { BeginCameraRendering(renderContext, camera); #if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER //It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect. VFX.VFXManager.PrepareCamera(camera); #endif RenderSingleCamera(renderContext, camera); EndCameraRendering(renderContext, camera); } EndFrameRendering(renderContext, cameras); }

刚看到代码的笔者我一脸懵逼,由于封装了很多方法,而且有些还看不到源码,所以一时间不知道某些方法都干了什么操作,但只能硬着头皮上啊,把能看到的好好解析一下,看能不能推断出隐藏的方法做了什么小操作。

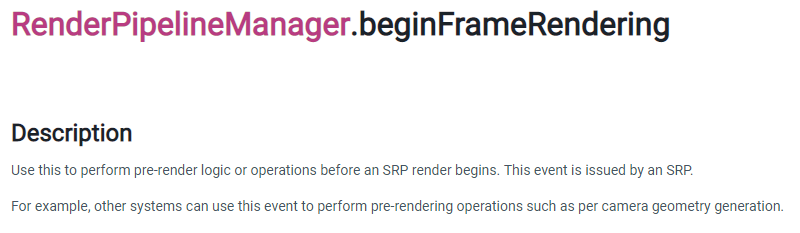

首先是BeginFrameRendering方法

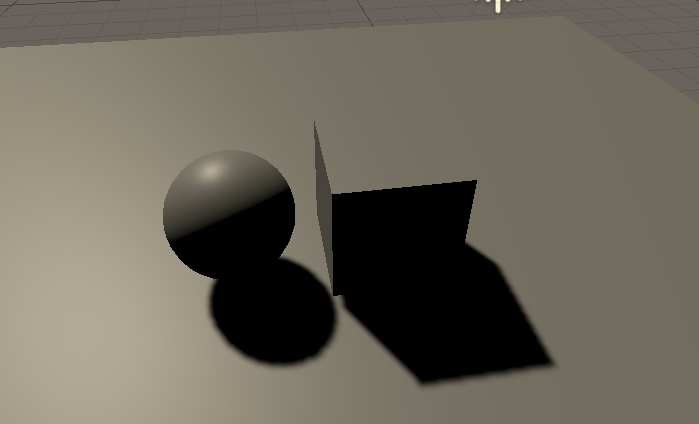

这是官方对于这个方法的描述,这几句话基本上等于没说,而且我们在做SRP的时候也发现了即便是没有这个方法也可以正常渲染,所以为了探究这个问题我查阅了许多资料,结果还是没找到结论,于是气急败坏的我直接把Begin和End两个方法注释掉,果然如我所料,没有对任何效果产生影响,如下图(注释后):

所以暂且先不探究这个方法做了什么,继续往下走:

GraphicsSettings.lightsUseLinearIntensity = (QualitySettings.activeColorSpace == ColorSpace.Linear);

GraphicsSettings.useScriptableRenderPipelineBatching = asset.useSRPBatcher;

这回是两个图形设置:第一个是线性空间的选择,第二个是是否使用SRPBatcher(这个之后我们会说)

基本上PBR都会用Linear空间,在此就不去详述了。

接下来是SetupPerFrameShaderConstants方法:

static void SetupPerFrameShaderConstants() { // When glossy reflections are OFF in the shader we set a constant color to use as indirect specular SphericalHarmonicsL2 ambientSH = RenderSettings.ambientProbe; Color linearGlossyEnvColor = new Color(ambientSH[0, 0], ambientSH[1, 0], ambientSH[2, 0]) * RenderSettings.reflectionIntensity; Color glossyEnvColor = CoreUtils.ConvertLinearToActiveColorSpace(linearGlossyEnvColor); Shader.SetGlobalVector(PerFrameBuffer._GlossyEnvironmentColor, glossyEnvColor); // Used when subtractive mode is selected Shader.SetGlobalVector(PerFrameBuffer._SubtractiveShadowColor, CoreUtils.ConvertSRGBToActiveColorSpace(RenderSettings.subtractiveShadowColor)); }

主要设置了未开启环境反射时的默认颜色、阴影颜色。我们之前的SRP里没有写。但是为了证实这个代码真实的在起作用,我还是调皮的把这个方法的修改了一下,将glossyEnvColor设为白色,将Enviorment Reflection宏关掉,然后有了以下对比图:

注释SetupPerFrameShaderConstants前:

注释后:

结果竟然完全一样,又是一脸懵逼!!

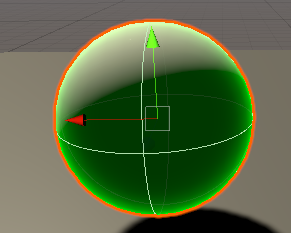

于是我把整个方法的内容都禁用掉,还是一样,这是,我有了一个猜想,就是只要设置一次_GlossyEnvironmentColor,这个颜色就会被缓存在某个地方,于是我把代码注释取消,将颜色改为绿色,然后在注释代码,之后果然是如下效果:

疑问解决了,接着往下走,调用了SortCameras方法,方法实现如下:

private void SortCameras(Camera[] cameras) { Array.Sort<Camera>(cameras, (Comparison<Camera>) ((lhs, rhs) => (int) ((double) lhs.depth - (double) rhs.depth))); }

可以看到相机排序主要是通过相机的深度进行的,这个影响者先渲染哪个相机,后渲染哪个相机,问题不大,继续前进!!

foreach (Camera camera in cameras) { BeginCameraRendering(renderContext, camera); #if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER //It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect. VFX.VFXManager.PrepareCamera(camera); #endif RenderSingleCamera(renderContext, camera); EndCameraRendering(renderContext, camera); }

根据相机排序好的顺序,逐相机渲染。我们可以看到主要有三个方法,VISUAL_EFFECT先忽略不计(手动滑稽),之后有时间专门讲一下。

首先看BeginCameraRendering和EndCameraRendering,好吧,看不到,官方是这样介绍的:

忽然觉得这个描述好像在哪里见过?这不就是上个描述的Copy+Paste吗,不过有些关键词还是引起了我的注意:planar reflections

于是猜测可能是做了一些off-screen的相关设置吧。但是为了确定其实际作用,还是老方法--注释!

然而发现注释前后都是可以渲染到纹理,如下:

既然不影响渲染,那么继续往下走,等影响到了在探究原因!

那么就到了最关键的RenderSingleCamera函数了,函数如下:

public static void RenderSingleCamera(ScriptableRenderContext context, Camera camera) { if (!camera.TryGetCullingParameters(IsStereoEnabled(camera), out var cullingParameters)) return; var settings = asset; UniversalAdditionalCameraData additionalCameraData = null; if (camera.cameraType == CameraType.Game || camera.cameraType == CameraType.VR) camera.gameObject.TryGetComponent(out additionalCameraData); InitializeCameraData(settings, camera, additionalCameraData, out var cameraData); SetupPerCameraShaderConstants(cameraData); ScriptableRenderer renderer = (additionalCameraData != null) ? additionalCameraData.scriptableRenderer : settings.scriptableRenderer; if (renderer == null) { Debug.LogWarning(string.Format("Trying to render {0} with an invalid renderer. Camera rendering will be skipped.", camera.name)); return; } string tag = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? camera.name: k_RenderCameraTag; CommandBuffer cmd = CommandBufferPool.Get(tag); using (new ProfilingSample(cmd, tag)) { renderer.Clear(); renderer.SetupCullingParameters(ref cullingParameters, ref cameraData); context.ExecuteCommandBuffer(cmd); cmd.Clear(); #if UNITY_EDITOR // Emit scene view UI if (cameraData.isSceneViewCamera) ScriptableRenderContext.EmitWorldGeometryForSceneView(camera); #endif var cullResults = context.Cull(ref cullingParameters); InitializeRenderingData(settings, ref cameraData, ref cullResults, out var renderingData); renderer.Setup(context, ref renderingData); renderer.Execute(context, ref renderingData); } context.ExecuteCommandBuffer(cmd); CommandBufferPool.Release(cmd); context.Submit(); }

虽然看起来很多,但是实际上我们终于看到了熟悉的代码,在上篇SRP中我们用到了Culling方法,不着急,一点一点看:

首先通过TryGetCullingParameters方法获取相机视锥裁剪参数,然后获取asset中的设置,asset中的设置大致都在这个面板上:

额,图片传不上去,可能是因为超过上限?总之找到UniversalRP那个文件的Inspector就可以看见了。

URP中所有相机都挂着UniversalAdditionalCameraData脚本,这个脚本是覆写Camera的Inspector的数据,其实可以当作以前相机组件上的参数。

在InitializeCameraData中传入相机参数返回一个相机信息,我们看看这个方法的实现:

static void InitializeCameraData(UniversalRenderPipelineAsset settings, Camera camera, UniversalAdditionalCameraData additionalCameraData, out CameraData cameraData) { const float kRenderScaleThreshold = 0.05f; cameraData = new CameraData(); cameraData.camera = camera; cameraData.isStereoEnabled = IsStereoEnabled(camera); int msaaSamples = 1; if (camera.allowMSAA && settings.msaaSampleCount > 1) msaaSamples = (camera.targetTexture != null) ? camera.targetTexture.antiAliasing : settings.msaaSampleCount; cameraData.isSceneViewCamera = camera.cameraType == CameraType.SceneView; cameraData.isHdrEnabled = camera.allowHDR && settings.supportsHDR; Rect cameraRect = camera.rect; cameraData.isDefaultViewport = (!(Math.Abs(cameraRect.x) > 0.0f || Math.Abs(cameraRect.y) > 0.0f || Math.Abs(cameraRect.width) < 1.0f || Math.Abs(cameraRect.height) < 1.0f)); // If XR is enabled, use XR renderScale. // Discard variations lesser than kRenderScaleThreshold. // Scale is only enabled for gameview. float usedRenderScale = XRGraphics.enabled ? XRGraphics.eyeTextureResolutionScale : settings.renderScale; cameraData.renderScale = (Mathf.Abs(1.0f - usedRenderScale) < kRenderScaleThreshold) ? 1.0f : usedRenderScale; cameraData.renderScale = (camera.cameraType == CameraType.Game) ? cameraData.renderScale : 1.0f; bool anyShadowsEnabled = settings.supportsMainLightShadows || settings.supportsAdditionalLightShadows; cameraData.maxShadowDistance = Mathf.Min(settings.shadowDistance, camera.farClipPlane); cameraData.maxShadowDistance = (anyShadowsEnabled && cameraData.maxShadowDistance >= camera.nearClipPlane) ? cameraData.maxShadowDistance : 0.0f; if (additionalCameraData != null) { cameraData.maxShadowDistance = (additionalCameraData.renderShadows) ? cameraData.maxShadowDistance : 0.0f; cameraData.requiresDepthTexture = additionalCameraData.requiresDepthTexture; cameraData.requiresOpaqueTexture = additionalCameraData.requiresColorTexture; cameraData.volumeLayerMask = additionalCameraData.volumeLayerMask; cameraData.volumeTrigger = additionalCameraData.volumeTrigger == null ? camera.transform : additionalCameraData.volumeTrigger; cameraData.postProcessEnabled = additionalCameraData.renderPostProcessing; cameraData.isStopNaNEnabled = cameraData.postProcessEnabled && additionalCameraData.stopNaN && SystemInfo.graphicsShaderLevel >= 35; cameraData.isDitheringEnabled = cameraData.postProcessEnabled && additionalCameraData.dithering; cameraData.antialiasing = cameraData.postProcessEnabled ? additionalCameraData.antialiasing : AntialiasingMode.None; cameraData.antialiasingQuality = additionalCameraData.antialiasingQuality; } else if(camera.cameraType == CameraType.SceneView) { cameraData.requiresDepthTexture = settings.supportsCameraDepthTexture; cameraData.requiresOpaqueTexture = settings.supportsCameraOpaqueTexture; cameraData.volumeLayerMask = 1; // "Default" cameraData.volumeTrigger = null; cameraData.postProcessEnabled = CoreUtils.ArePostProcessesEnabled(camera); cameraData.isStopNaNEnabled = false; cameraData.isDitheringEnabled = false; cameraData.antialiasing = AntialiasingMode.None; cameraData.antialiasingQuality = AntialiasingQuality.High; } else { cameraData.requiresDepthTexture = settings.supportsCameraDepthTexture; cameraData.requiresOpaqueTexture = settings.supportsCameraOpaqueTexture; cameraData.volumeLayerMask = 1; // "Default" cameraData.volumeTrigger = null; cameraData.postProcessEnabled = false; cameraData.isStopNaNEnabled = false; cameraData.isDitheringEnabled = false; cameraData.antialiasing = AntialiasingMode.None; cameraData.antialiasingQuality = AntialiasingQuality.High; } // Disables post if GLes2 cameraData.postProcessEnabled &= SystemInfo.graphicsDeviceType != GraphicsDeviceType.OpenGLES2; cameraData.requiresDepthTexture |= cameraData.isSceneViewCamera || cameraData.postProcessEnabled; var commonOpaqueFlags = SortingCriteria.CommonOpaque; var noFrontToBackOpaqueFlags = SortingCriteria.SortingLayer | SortingCriteria.RenderQueue | SortingCriteria.OptimizeStateChanges | SortingCriteria.CanvasOrder; bool hasHSRGPU = SystemInfo.hasHiddenSurfaceRemovalOnGPU; bool canSkipFrontToBackSorting = (camera.opaqueSortMode == OpaqueSortMode.Default && hasHSRGPU) || camera.opaqueSortMode == OpaqueSortMode.NoDistanceSort; cameraData.defaultOpaqueSortFlags = canSkipFrontToBackSorting ? noFrontToBackOpaqueFlags : commonOpaqueFlags; cameraData.captureActions = CameraCaptureBridge.GetCaptureActions(camera); bool needsAlphaChannel = camera.targetTexture == null && Graphics.preserveFramebufferAlpha && PlatformNeedsToKillAlpha(); cameraData.cameraTargetDescriptor = CreateRenderTextureDescriptor(camera, cameraData.renderScale, cameraData.isStereoEnabled, cameraData.isHdrEnabled, msaaSamples, needsAlphaChannel); }

又是好大一坨,不着急,慢慢欣赏代码艺术。

好吧,没什么好欣赏的,都是一些基本参数的赋值,在其中其实我们可以看到一些效果的使用前提:如Postprocess需要OpenGLES2以上等

接下来就是SetupPerCameraShaderConstants方法:

static void SetupPerCameraShaderConstants(CameraData cameraData) { Camera camera = cameraData.camera; float scaledCameraWidth = (float)cameraData.camera.pixelWidth * cameraData.renderScale; float scaledCameraHeight = (float)cameraData.camera.pixelHeight * cameraData.renderScale; Shader.SetGlobalVector(PerCameraBuffer._ScaledScreenParams, new Vector4(scaledCameraWidth, scaledCameraHeight, 1.0f + 1.0f / scaledCameraWidth, 1.0f + 1.0f / scaledCameraHeight)); Shader.SetGlobalVector(PerCameraBuffer._WorldSpaceCameraPos, camera.transform.position); float cameraWidth = (float)cameraData.camera.pixelWidth; float cameraHeight = (float)cameraData.camera.pixelHeight; Shader.SetGlobalVector(PerCameraBuffer._ScreenParams, new Vector4(cameraWidth, cameraHeight, 1.0f + 1.0f / cameraWidth, 1.0f + 1.0f / cameraHeight)); Matrix4x4 projMatrix = GL.GetGPUProjectionMatrix(camera.projectionMatrix, false); Matrix4x4 viewMatrix = camera.worldToCameraMatrix; Matrix4x4 viewProjMatrix = projMatrix * viewMatrix; Matrix4x4 invViewProjMatrix = Matrix4x4.Inverse(viewProjMatrix); Shader.SetGlobalMatrix(PerCameraBuffer._InvCameraViewProj, invViewProjMatrix); }

主要是对四个Shader全局变量的赋值:_ScaledScreenParams、_WorldSpaceCameraPos、_ScreenParams、_InvCameraViewProj

具体用来做什么之后我们讲解URP的Shader时会讲到(不过看字面意思也知道时干啥的~啊哈哈哈)

接下来就是重点了,URP将渲染方案封装在ForwardRenderer中,由于篇幅原因,下节我们来学习一下ForwardRenderer!!