删除节点

通过以下命令删除节点,nodename指需要删除的节点名。

./kk delete node <nodeName> -f config-sample.yaml

现在我的需求是删除node8。

[root@master1 ~]# sudo ./kk delete node node8 -f config-sample.yaml Are you sure to delete this node? [yes/no]: yes INFO[16:56:39 CST] Resetting kubernetes node ... [master1 192.168.131.60] MSG: node1 node10 node11 node12 node13 node14 node15 node16 node17 node18 node19 node2 node20 node21 node22 node23 node24 node25 node26 node27 node28 node3 node4 node5 node6 node7 node8 node9 [master1 192.168.131.60] MSG: node/node8 already cordoned WARNING: ignoring DaemonSet-managed Pods: kube-system/calico-node-lgp6x, kube-system/kube-proxy-tncvq, kube-system/nodelocaldns-5ngfw, kube-system/openebs-ndm-vfsx9, kubesphere-logging-system/fluent-bit-rljhx, kubesphere-monitoring-system/node-exporter-x7mjk node/node8 drained [master1 192.168.131.60] MSG: node "node8" deleted INFO[16:56:41 CST] Successful.

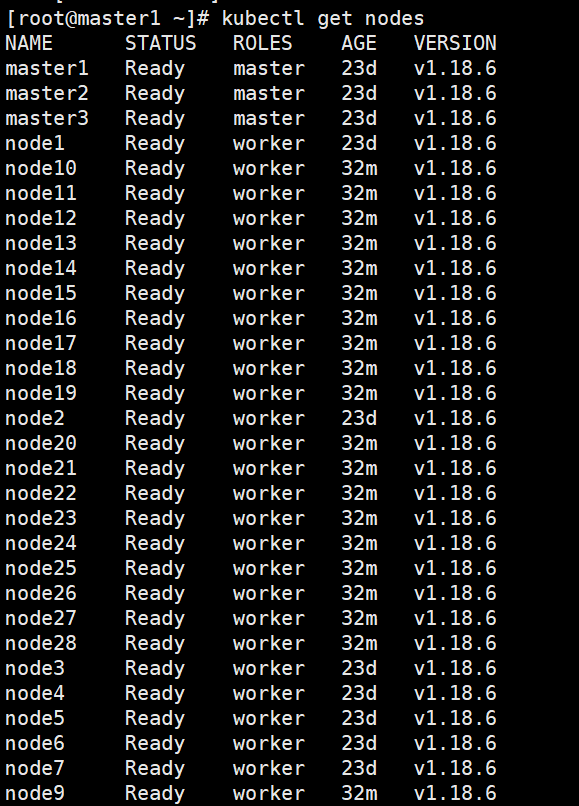

此时查看集群node节点发现已经没有node8了,并且config-sample.yaml集群配置文件中node8信息也被删除。

config-sample.yaml

apiVersion: kubekey.kubesphere.io/v1alpha1

kind: Cluster

metadata:

name: pansoft-pre

spec:

hosts:

- {name: master1, address: 192.168.131.60, internalAddress: 192.168.131.60, privateKeyPath: "~/.ssh/id_rsa"}

- {name: master2, address: 192.168.131.61, internalAddress: 192.168.131.61, privateKeyPath: "~/.ssh/id_rsa"}

- {name: master3, address: 192.168.131.62, internalAddress: 192.168.131.62, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node1, address: 192.168.131.63, internalAddress: 192.168.131.63, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node2, address: 192.168.131.64, internalAddress: 192.168.131.64, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node3, address: 192.168.131.65, internalAddress: 192.168.131.65, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node4, address: 192.168.131.66, internalAddress: 192.168.131.66, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node5, address: 192.168.131.67, internalAddress: 192.168.131.67, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node6, address: 192.168.131.68, internalAddress: 192.168.131.68, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node7, address: 192.168.131.69, internalAddress: 192.168.131.69, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node9, address: 192.168.131.91, internalAddress: 192.168.131.91, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node10, address: 192.168.131.92, internalAddress: 192.168.131.92, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node11, address: 192.168.131.93, internalAddress: 192.168.131.93, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node12, address: 192.168.131.94, internalAddress: 192.168.131.94, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node13, address: 192.168.131.95, internalAddress: 192.168.131.95, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node14, address: 192.168.131.96, internalAddress: 192.168.131.96, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node15, address: 192.168.131.97, internalAddress: 192.168.131.97, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node16, address: 192.168.131.98, internalAddress: 192.168.131.98, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node17, address: 192.168.131.99, internalAddress: 192.168.131.99, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node18, address: 192.168.131.100, internalAddress: 192.168.131.100, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node19, address: 192.168.131.101, internalAddress: 192.168.131.101, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node20, address: 192.168.131.102, internalAddress: 192.168.131.102, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node21, address: 192.168.131.103, internalAddress: 192.168.131.103, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node22, address: 192.168.131.104, internalAddress: 192.168.131.104, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node23, address: 192.168.131.105, internalAddress: 192.168.131.105, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node24, address: 192.168.131.106, internalAddress: 192.168.131.106, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node25, address: 192.168.131.107, internalAddress: 192.168.131.107, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node26, address: 192.168.131.108, internalAddress: 192.168.131.108, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node27, address: 192.168.131.109, internalAddress: 192.168.131.109, privateKeyPath: "~/.ssh/id_rsa"}

- {name: node28, address: 192.168.131.110, internalAddress: 192.168.131.110, privateKeyPath: "~/.ssh/id_rsa"}

roleGroups:

etcd:

- master1

- master2

- master3

master:

- master1

- master2

- master3

worker:

- node[9:28]

- node[1:7]

controlPlaneEndpoint:

domain: lb.kubesphere.local

# vip

address: "192.168.131.60"

port: "16443"

kubernetes:

version: v1.18.6

imageRepo: kubesphere

clusterName: cluster.local

masqueradeAll: false # masqueradeAll tells kube-proxy to SNAT everything if using the pure iptables proxy mode. [Default: false]

maxPods: 110 # maxPods is the number of pods that can run on this Kubelet. [Default: 110]

nodeCidrMaskSize: 24 # internal network node size allocation. This is the size allocated to each node on your network. [Default: 24]

proxyMode: ipvs # mode specifies which proxy mode to use. [Default: ipvs]

network:

plugin: calico

calico:

ipipMode: Always # IPIP Mode to use for the IPv4 POOL created at start up. If set to a value other than Never, vxlanMode should be set to "Never". [Always | CrossSubnet | Never] [Default: Always]

vxlanMode: Never # VXLAN Mode to use for the IPv4 POOL created at start up. If set to a value other than Never, ipipMode should be set to "Never". [Always | CrossSubnet | Never] [Default: Never]

vethMTU: 1440 # The maximum transmission unit (MTU) setting determines the largest packet size that can be transmitted through your network. [Default: 1440]

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

registry:

registryMirrors: []

insecureRegistries: []

privateRegistry: ""

storage:

defaultStorageClass: localVolume

localVolume:

storageClassName: local

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.0.0

spec:

local_registry: ""

persistence:

storageClass: ""

authentication:

jwtSecret: ""

etcd:

monitoring: true # Whether to install etcd monitoring dashboard

endpointIps: 192.168.131.60,192.168.131.61,192.168.131.62 # etcd cluster endpointIps

port: 2379 # etcd port

tlsEnable: true

common:

mysqlVolumeSize: 20Gi # MySQL PVC size

minioVolumeSize: 20Gi # Minio PVC size

etcdVolumeSize: 20Gi # etcd PVC size

openldapVolumeSize: 2Gi # openldap PVC size

redisVolumSize: 2Gi # Redis PVC size

es: # Storage backend for logging, tracing, events and auditing.

elasticsearchMasterReplicas: 1 # total number of master nodes, it's not allowed to use even number

elasticsearchDataReplicas: 1 # total number of data nodes

elasticsearchMasterVolumeSize: 4Gi # Volume size of Elasticsearch master nodes

elasticsearchDataVolumeSize: 20Gi # Volume size of Elasticsearch data nodes

logMaxAge: 7 # Log retention time in built-in Elasticsearch, it is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log

# externalElasticsearchUrl:

# externalElasticsearchPort:

console:

enableMultiLogin: true # enable/disable multiple sing on, it allows an account can be used by different users at the same time.

port: 30880

alerting: # Whether to install KubeSphere alerting system. It enables Users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from.

enabled: true

auditing: # Whether to install KubeSphere audit log system. It provides a security-relevant chronological set of records,recording the sequence of activities happened in platform, initiated by different tenants.

enabled: true

devops: # Whether to install KubeSphere DevOps System. It provides out-of-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image

enabled: true

jenkinsMemoryLim: 2Gi # Jenkins memory limit

jenkinsMemoryReq: 1500Mi # Jenkins memory request

jenkinsVolumeSize: 8Gi # Jenkins volume size

jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters

jenkinsJavaOpts_Xmx: 512m

jenkinsJavaOpts_MaxRAM: 2g

events: # Whether to install KubeSphere events system. It provides a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters.

enabled: true

logging: # Whether to install KubeSphere logging system. Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd.

enabled: true

logsidecarReplicas: 2

metrics_server: # Whether to install metrics-server. IT enables HPA (Horizontal Pod Autoscaler).

enabled: true

monitoring: #

prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and provide high availability as well.

prometheusMemoryRequest: 400Mi # Prometheus request memory

prometheusVolumeSize: 20Gi # Prometheus PVC size

alertmanagerReplicas: 1 # AlertManager Replicas

multicluster:

clusterRole: host # host | member | none # You can install a solo cluster, or specify it as the role of host or member cluster

networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods).

enabled: true

notification: # It supports notification management in multi-tenant Kubernetes clusters. It allows you to set AlertManager as its sender, and receivers include Email, Wechat Work, and Slack.

enabled: true

openpitrix: # Whether to install KubeSphere Application Store. It provides an application store for Helm-based applications, and offer application lifecycle management

enabled: true

servicemesh: # Whether to install KubeSphere Service Mesh (Istio-based). It provides fine-grained traffic management, observability and tracing, and offer visualization for traffic topology

enabled: true