作业一:

代码展示:

import requests

from bs4 import BeautifulSoup

import re,os

import threading

import pymysql

import urllib

class MySpider:

def startUp(self,url):

headers = {

'Cookie': 'll="118200"; bid=6RFUdwTYOEU; _vwo_uuid_v2=D7971B6FDCF69217A8423EFCC2A21955D|41eb25e765bdf98853fd557b53016cd5; __gads=ID=9a583143d12c55e0-22dbef27e3c400c8:T=1606284964:RT=1606284964:S=ALNI_MYBPSHfsIfrvOZ_oltRmjCgkRpjRg; __utmc=30149280; ap_v=0,6.0; dbcl2="227293793:AVawqnPg0jI"; ck=SAKz; push_noty_num=0; push_doumail_num=0; __utma=30149280.2093786334.1603594037.1606300411.1606306536.8; __utmz=30149280.1606306536.8.5.utmcsr=accounts.douban.com|utmccn=(referral)|utmcmd=referral|utmcct=/; __utmt=1; __utmv=30149280.22729; __utmb=30149280.2.10.1606306536',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36'

}

self.open = False

try:

self.con = pymysql.connect(host='localhost',port=3306,user='root',passwd='hadoop',database='mydb',charset='utf8')

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.open = True

try:

self.cursor.execute("drop table if exists movies")

except Exception as err:

# print(err)

pass

try:

sql = """create table movies(

ranka varchar(32),

movie_name varchar(32),

direct varchar(64),

main_act varchar(128),

show_time varchar(64),

country varchar(128),

movie_type varchar(64),

score varchar(32),

count varchar(32),

quote varchar(128),

path varchar(64)

)character set =utf8

"""

self.cursor.execute(sql)

print("表格创建成功")

except Exception as err:

print(err)

print("表格创建失败")

except Exception as err:

print(err)

self.no = 0

# self.page = 0

self.Threads = []

# page_text = requests.get(url=url,headers=headers).text

# soup = BeautifulSoup(page_text,'lxml')

# print(soup)

# li_list = soup.select("ol[class='grid_view'] li"c)

urls = []

for i in range(10):

url = 'https://movie.douban.com/top250?start=' + str(i*25) + '&filter='

print(url)

page_text = requests.get(url=url,headers=headers).text

soup = BeautifulSoup(page_text,'lxml')

# print(soup)

li_list = soup.select("ol[class='grid_view'] li")

print(len(li_list))

for li in li_list:

ranka = li.select("div[class='item'] div em")[0].text

movie_name = li.select("div[class='info'] div a span[class='title']")[0].text

print(movie_name)

dir_act = li.select("div[class='info'] div[class='bd'] p")[0].text

dir_act = ' '.join(dir_act.split())

try:

direct = re.search(':.*:',dir_act).group()[1:-3]

except:

direct = "奥利维·那卡什 Olivier Nakache / 艾力克·托兰达 Eric Toledano "

# print(direct)

# print(dir_act)

s = dir_act.split(':')

# print(s)

try:

main_act = re.search(r'(D)*',s[2]).group()

except:

main_act = "..."

# print(main_act)

pattern = re.compile('d+',re.S)

show_time = pattern.search(dir_act).group()

# print(show_time)

countryAndmovie_type = dir_act.split('/')

country = countryAndmovie_type[-2]

movie_type = countryAndmovie_type[-1]

score = li.select("div[class='info'] div[class='star'] span")[1].text

# print(score)

count = re.match(r'd+',li.select("div[class='info'] div[class='star'] span")[3].text).group()

# print(score,count,quote)

img_name = li.select("div[class='item'] div a img")[0]["alt"]

try:

quote = li.select("div[class='info'] p[class='quote'] span")[0].text

except:

quote = ""

# print(img_name)

img_src = li.select("div[class='item'] div a img[src]")[0]["src"]

path = 'movie_img\' + img_name + '.jpg'

# print(img_name,img_src,path)

print(ranka, '2', movie_name, '3', direct, '4', main_act, '5', show_time, '6', country, '7', movie_type, '8', score, '9', count, '10', quote, '11', path)

try:

self.insertdb(ranka,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path)

self.no += 1

except Exception as err:

# print(err)

print("数据插入失败")

if url not in urls:

T = threading.Thread(target=self.download,args=(img_name,img_src))

T.setDaemon(False)

T.start()

self.Threads.append(T)

# print(len(li_list))

def download(self,img_name,img_src):

dir_path = 'movie_img'

if not os.path.exists(dir_path):

os.mkdir(dir_path)

# for img in os.listdir(movie_img):

# os.remove(os.path.join(movie_img,img))

file_path = dir_path + '/' + img_name + '.jpg'

with open(file_path,'wb') as fp:

data = urllib.request.urlopen(img_src)

data = data.read()

# print("正在下载:" + img_name)

fp.write(data)

# print(img_name+ "下载完成")

fp.close()

def insertdb(self,rank,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path):

if self.open:

self.cursor.execute("insert into movies(ranka,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path)values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(rank,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path))

else:

print("数据库未连接")

def closeUp(self):

if self.open:

self.con.commit()

self.con.close()

self.open = False

print("一共爬取了" ,self.no,"条数据")

url = 'https://movie.douban.com/top250'

myspider = MySpider()

myspider.startUp(url)

myspider.closeUp()

for t in myspider.Threads:

t.join()

print("End")

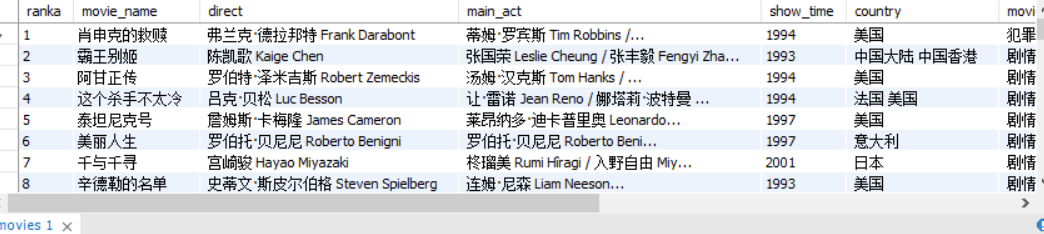

结果保存:

心得体会:

这一次的代码有的忘记了,还是看了很久才想起来要怎么操作。

作业二:

代码展示:

university:

import requests

from bs4 import BeautifulSoup

import re,os

import threading

import pymysql

import urllib

class MySpider:

def startUp(self,url):

headers = {

'Cookie': 'll="118200"; bid=6RFUdwTYOEU; _vwo_uuid_v2=D7971B6FDCF69217A8423EFCC2A21955D|41eb25e765bdf98853fd557b53016cd5; __gads=ID=9a583143d12c55e0-22dbef27e3c400c8:T=1606284964:RT=1606284964:S=ALNI_MYBPSHfsIfrvOZ_oltRmjCgkRpjRg; __utmc=30149280; ap_v=0,6.0; dbcl2="227293793:AVawqnPg0jI"; ck=SAKz; push_noty_num=0; push_doumail_num=0; __utma=30149280.2093786334.1603594037.1606300411.1606306536.8; __utmz=30149280.1606306536.8.5.utmcsr=accounts.douban.com|utmccn=(referral)|utmcmd=referral|utmcct=/; __utmt=1; __utmv=30149280.22729; __utmb=30149280.2.10.1606306536',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36'

}

self.open = False

try:

self.con = pymysql.connect(host='localhost',port=3306,user='root',passwd='hadoop',database='mydb',charset='utf8')

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

self.open = True

try:

self.cursor.execute("drop table if exists movies")

except Exception as err:

# print(err)

pass

try:

sql = """create table movies(

ranka varchar(32),

movie_name varchar(32),

direct varchar(64),

main_act varchar(128),

show_time varchar(64),

country varchar(128),

movie_type varchar(64),

score varchar(32),

count varchar(32),

quote varchar(128),

path varchar(64)

)character set =utf8

"""

self.cursor.execute(sql)

print("表格创建成功")

except Exception as err:

print(err)

print("表格创建失败")

except Exception as err:

print(err)

self.no = 0

# self.page = 0

self.Threads = []

# page_text = requests.get(url=url,headers=headers).text

# soup = BeautifulSoup(page_text,'lxml')

# print(soup)

# li_list = soup.select("ol[class='grid_view'] li"c)

urls = []

for i in range(10):

url = 'https://movie.douban.com/top250?start=' + str(i*25) + '&filter='

print(url)

page_text = requests.get(url=url,headers=headers).text

soup = BeautifulSoup(page_text,'lxml')

# print(soup)

li_list = soup.select("ol[class='grid_view'] li")

print(len(li_list))

for li in li_list:

ranka = li.select("div[class='item'] div em")[0].text

movie_name = li.select("div[class='info'] div a span[class='title']")[0].text

print(movie_name)

dir_act = li.select("div[class='info'] div[class='bd'] p")[0].text

dir_act = ' '.join(dir_act.split())

try:

direct = re.search(':.*:',dir_act).group()[1:-3]

except:

direct = "奥利维·那卡什 Olivier Nakache / 艾力克·托兰达 Eric Toledano "

# print(direct)

# print(dir_act)

s = dir_act.split(':')

# print(s)

try:

main_act = re.search(r'(D)*',s[2]).group()

except:

main_act = "..."

# print(main_act)

pattern = re.compile('d+',re.S)

show_time = pattern.search(dir_act).group()

# print(show_time)

countryAndmovie_type = dir_act.split('/')

country = countryAndmovie_type[-2]

movie_type = countryAndmovie_type[-1]

score = li.select("div[class='info'] div[class='star'] span")[1].text

# print(score)

count = re.match(r'd+',li.select("div[class='info'] div[class='star'] span")[3].text).group()

# print(score,count,quote)

img_name = li.select("div[class='item'] div a img")[0]["alt"]

try:

quote = li.select("div[class='info'] p[class='quote'] span")[0].text

except:

quote = ""

# print(img_name)

img_src = li.select("div[class='item'] div a img[src]")[0]["src"]

path = 'movie_img\' + img_name + '.jpg'

# print(img_name,img_src,path)

print(ranka, '2', movie_name, '3', direct, '4', main_act, '5', show_time, '6', country, '7', movie_type, '8', score, '9', count, '10', quote, '11', path)

try:

self.insertdb(ranka,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path)

self.no += 1

except Exception as err:

# print(err)

print("数据插入失败")

if url not in urls:

T = threading.Thread(target=self.download,args=(img_name,img_src))

T.setDaemon(False)

T.start()

self.Threads.append(T)

# print(len(li_list))

def download(self,img_name,img_src):

dir_path = 'movie_img'

if not os.path.exists(dir_path):

os.mkdir(dir_path)

# for img in os.listdir(movie_img):

# os.remove(os.path.join(movie_img,img))

file_path = dir_path + '/' + img_name + '.jpg'

with open(file_path,'wb') as fp:

data = urllib.request.urlopen(img_src)

data = data.read()

# print("正在下载:" + img_name)

fp.write(data)

# print(img_name+ "下载完成")

fp.close()

def insertdb(self,rank,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path):

if self.open:

self.cursor.execute("insert into movies(ranka,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path)values (%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s)",

(rank,movie_name,direct,main_act,show_time,country,movie_type,score,count,quote,path))

else:

print("数据库未连接")

def closeUp(self):

if self.open:

self.con.commit()

self.con.close()

self.open = False

print("一共爬取了" ,self.no,"条数据")

url = 'https://movie.douban.com/top250'

myspider = MySpider()

myspider.startUp(url)

myspider.closeUp()

for t in myspider.Threads:

t.join()

print("End")

item:

import scrapy

class UniversityProItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

sNo = scrapy.Field()

schoolName = scrapy.Field()

city = scrapy.Field()

officalUrl = scrapy.Field()

info = scrapy.Field()

mFile = scrapy.Field()

pass

pipeline:

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

from itemadapter import ItemAdapter

from itemadapter import ItemAdapter

import pymysql

class UniversityPipeline:

def open_spider(self, spider):

print("opened")

try:

self.con = pymysql.connect(host="localhost", port=3306, user="root", passwd='hadoop', db='mydb',

charset='utf8')

self.cursor = self.con.cursor(pymysql.cursors.DictCursor)

try:

self.cursor.execute("drop table if exists university")

sql = """create table university(

sNo int primary key,

schoolName varchar(32),

city varchar(32),

officalUrl varchar(64),

info text,

mFile varchar(32)

)character set = utf8

"""

self.cursor.execute(sql)

except Exception as err:

print(err)

print("university表格创建失败")

self.open = True

self.count = 1

# self.cursor.execute("delete from university")

except Exception as err:

print(err)

self.open = False

print("数据库连接失败")

def process_item(self, item, spider):

# print(item['sNo'])

# print(item['schoolName'])

# print(item['city'])

# print(item['officalUrl'])

# print(item['info'])

# print(item['mFile'])

print(self.count, item['schoolName'], item['city'], item['officalUrl'], item['info'], item['mFile'])

if self.open:

try:

self.cursor.execute(

"insert into university (sNo,schoolName,city,officalUrl,info,mFile) values(%s,%s,%s,%s,%s,%s)",

(self.count, item['schoolName'], item['city'], item['officalUrl'], item['info'], item['mFile']))

self.count += 1

except:

print("数据插入失败")

else:

print("数据库未连接")

return item

def close_spider(self, spider):

if self.open:

self.con.commit()

self.con.close()

self.open = False

print('closed')

print("一共爬取了", self.count, "个院校的排名以及院校信息")

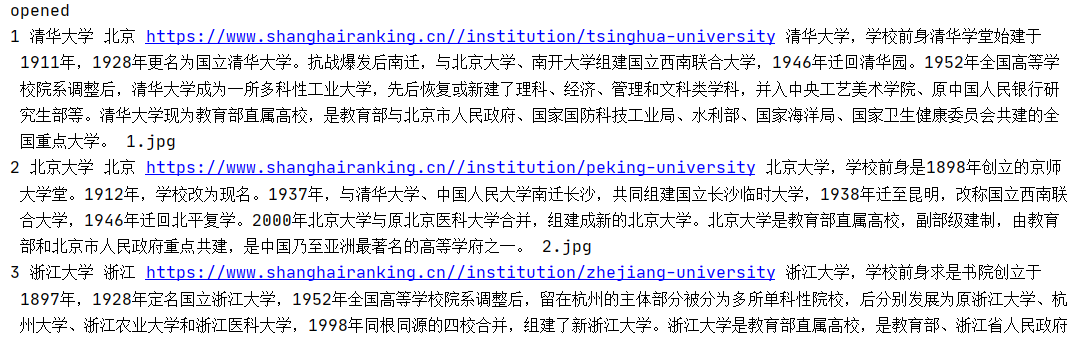

结果保存:

心得体会:

在原来的基础上操作了很久,才有的结果。虽然过程很艰难,但是做出来了还是很开心的事情。

作业三:

代码展示:

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

import time

import pymysql

import re

class MySpider:

def startUp(self, url):

print('begin')

self.driver = webdriver.Chrome(executable_path='D:ChromeCorechromedriver.exe')

self.driver.get(url)

self.count = 0

self.open = False

try:

self.db = pymysql.connect(host='127.0.0.1', port=3306, user='root', passwd='hadoop', database='mydb',

charset='utf8')

self.cursor = self.db.cursor()

self.cursor.execute("drop table if exists myCourse")

sql = """create table myCourse (

Id int,

cCourse char(128),

cCollege char(128),

cTeacher char(128),

cTeam char(128),

cCount char(128),

cProcess char(128),

cBrief text )"""

self.cursor.execute(sql)

self.open = True

except:

self.db.close()

self.open = False

print("数据库连接或者表格创建失败")

print(self.open)

self.driver.get(url)

"""

1. 全局性设定

2. 每个半秒查询一次元素,直到超出最大时间

3. 后面所有选择元素的代码不需要单独指定周期定等待了

"""

self.driver.implicitly_wait(10) # 隐式等待

time.sleep(1)

enter_first = self.driver.find_element_by_xpath(

"//div[@id='g-container']//div[@class='web-nav-right-part']//a[@class='f-f0 navLoginBtn']")

enter_first.click()

# time.sleep(1)

other_enter = self.driver.find_element_by_xpath("//span[@class='ux-login-set-scan-code_ft_back']")

other_enter.click()

# time.sleep(1)

phone_enter = self.driver.find_element_by_xpath("//ul[@class='ux-tabs-underline_hd']/li[2]")

phone_enter.click()

time.sleep(1)

iframe = self.driver.find_element_by_xpath("//div[@class='ux-login-set-container']//iframe")

self.driver.switch_to.frame(iframe)

phone_number = self.driver.find_element_by_xpath("//div[@class='u-input box']//input[@id='phoneipt']")

# phone_number = self.driver.find_element_by_id('phoneipt')

phone_number.send_keys('15879295053')

time.sleep(1)

phone_passwd = self.driver.find_element_by_xpath("//div[@class='u-input box']/input[2]")

phone_passwd.send_keys('*******')

time.sleep(1)

self.driver.find_element_by_xpath("//div[@class='f-cb loginbox']/a").click()

time.sleep(3)

self.driver.find_element_by_xpath("//div[@class='ga-click u-navLogin-myCourse']//span").click()

div_list = self.driver.find_elements_by_xpath("//div[@class='course-panel-wrapper']/div/div")

time.sleep(2)

for div in div_list:

div.click()

time.sleep(3)

all_handles = self.driver.window_handles

self.driver.switch_to_window(all_handles[-1])

time.sleep(2)

center = self.driver.find_element_by_xpath("//h4[@class='f-fc3 courseTxt']")

center.click()

all_handles = self.driver.window_handles

self.driver.switch_to_window(all_handles[-1])

self.count += 1

id = self.count

try:

course = self.driver.find_element_by_xpath("//span[@class='course-title f-ib f-vam']").text

college = self.driver.find_element_by_xpath("//a[@data-cate='课程介绍页']").get_attribute("data-label")

teachers = self.driver.find_elements_by_xpath("//div[@class='um-list-slider_con_item']//h3")

teacher = self.driver.find_element_by_xpath("//div[@class='um-list-slider_con_item']//h3").text

team = ""

for one in teachers:

team = team + " " + one.text

contain_count = self.driver.find_element_by_xpath(

"//div[@class='course-enroll-info_course-enroll_price-enroll']/span").text

count = re.findall("d+", contain_count)[0]

process = self.driver.find_element_by_xpath(

"//div[@class='course-enroll-info_course-info_term-info_term-time']//span[position()=2]").text

brief = self.driver.find_element_by_xpath("//div[@class='course-heading-intro_intro']").text

print(id, course, college, teacher, team, count, process, brief)

# print(type(id),type(course),type(college),type(teacher),type(team),type(count),type(process),type(brief))

except Exception as err:

print(err)

course = ""

college = ""

teacher = ""

team = ""

count = ""

process = ""

brief = ""

self.insertDB(id, course, college, teacher, team, count, process, brief)

# self.driver.close()#增加这条语句,爬取第四条语句的时候就报错了

self.driver.switch_to_window(all_handles[0])

def insertDB(self, id, course, college, teacher, team, count, process, brief):

try:

self.cursor.execute(

"insert into myCourse( Id, cCourse, cCollege, cTeacher, cTeam, cCount, cProcess, cBrief) values(%s,%s,%s,%s,%s,%s,%s,%s)",

(id, course, college, teacher, team, count, process, brief))

self.db.commit()

except Exception as err:

print("数据插入失败")

print(err)

def closeUp(self):

print(self.open)

if self.open:

self.db.commit()

self.open = False

self.driver.close()

print('一共爬取了', self.count, '条数据')

mooc_url = 'https://www.icourse163.org/'

spider = MySpider()

spider.startUp(mooc_url)

spider.closeUp()

结果保存:

心得体会:Selenium还是不是很会用,在智鑫的帮助下才理解了具体的用法。不过能够成功处理自动登录,感觉已经进步很多了。