PodCIDRs问题

Kubernetes v1.18.5 + Cilium 1.9.4 安装遇到的问题

- 报错信息

E0827 21:08:22.925379 1 controller_utils.go:245] Error while processing Node Add: failed to allocate cidr from cluster cidr at idx:0: CIDR allocation failed; there are no remaining CIDRs left to allocate in the accepted range I0827 21:08:22.925407 1 event.go:278] Event(v1.ObjectReference{Kind:"Node", Namespace:"", Name:"prod-be-k8s-wn6", UID:"8a72a498-c29a-4fb9-a798-7773f5a4f538", APIVersion:"v1", ResourceVersion:"", FieldPath:""}): type: 'Normal' reason: 'CIDRNotAvailable' Node prod-be-k8s-wn6 status is now: CIDRNotAvailable I0827 21:08:22.925420 1 shared_informer.go:230] Caches are synced for service account W0827 21:08:22.925499 1 actual_state_of_world.go:506] Failed to update statusUpdateNeeded field in actual state of world: Failed to set statusUpdateNeeded to needed true, because nodeName="prod-fe-k8s-wn1" does not exist

判断原因是因为Kubernetes Controller-manager --allocate-node-cidrs 为Pod预留的网段与 Cilium-agent的 --set ipam.mode=cluster-pool重叠,只是怀疑

- 修复方案修改cilium-agent的PodCIDRs IPAM管理方案使用 --set ipam.mode=kubernetes

Kubernetes svc externalIP

背景情况,work node(ECS)同时具有公网/内网地址时

Case

创建SVC时通过ECS的公网地址作为externalIPs地址时,发现无法通信,访问模式 比如prometheus 地址+端口,页面无法显示,但是端口是通的

但是该公网地址是CP节点的地址,使用了svc service.spec.externalTrafficPolicy默认策略Cluster,实现集群内部流量负载均衡分布功能,同时不会保留请求的Source ip,在集群内部会发生多跳

service.spec.externalTrafficPolicy:Local // 参数设置代表保留请求的Source ip并保留,但存在潜在的不均衡流量传播风险

解决方法

设置SVC的模式 NodePort即可,原因阿里云的公网地址在集群初始化时,地址不在Kubernetes node的地址范围中,既不是InternalIP也不是ExternalIP

Cilium masquerading

背景描述,在集群完成部署后,及路由配置完成后,发现Kubernetes集群外部节点连接集群内部的Pod的服务端口,无法连接,但ICMP有报文回应

初步怀疑是cilium networkpolicy作祟,修改CiliumNetworkPolicy && CiliumClusterWideNetworkPolicy ,ingress egress都试了,还是不通

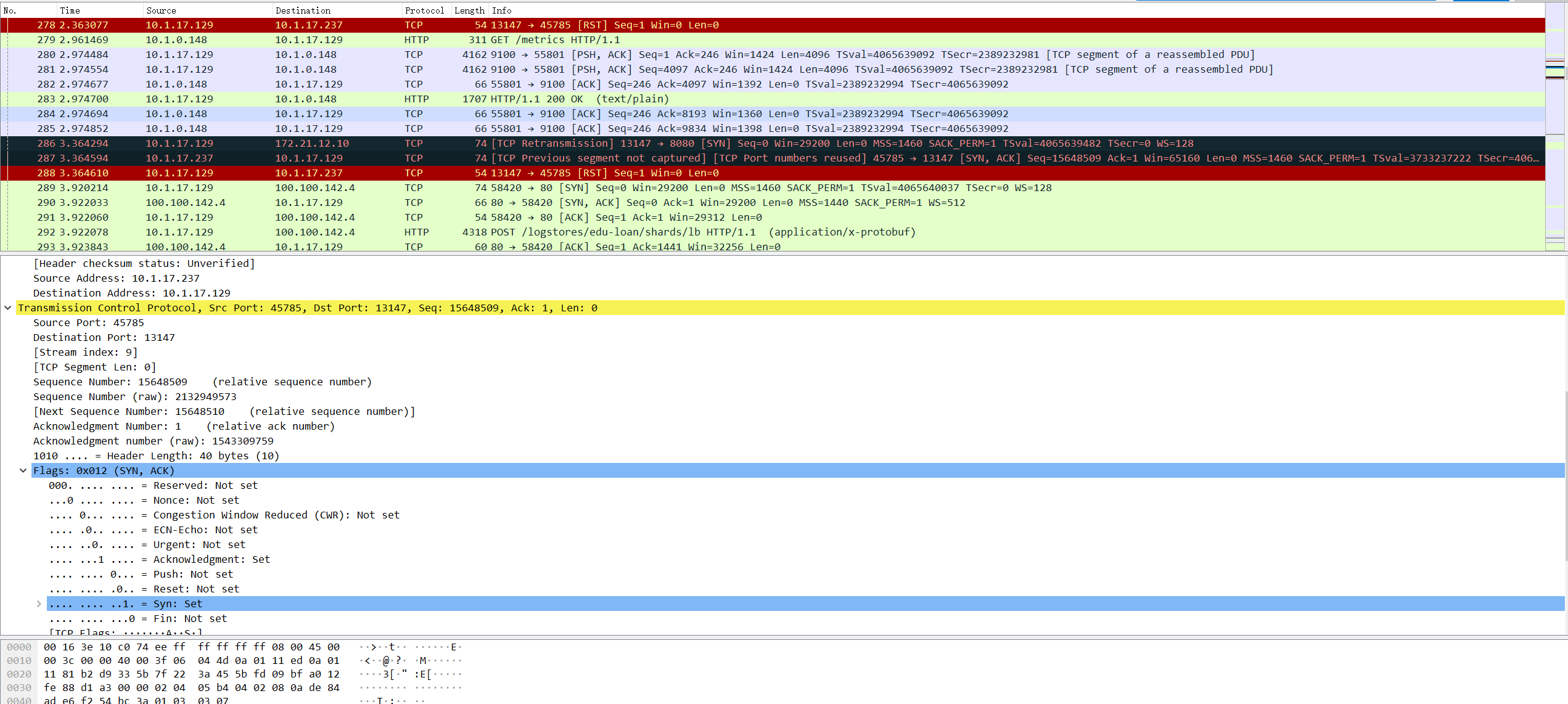

抓包后发现,如下

cloud route模式

- cilium networkpolicy保持默认

root@PROD-BE-K8S-WN7:/home/cilium# cilium endpoint list ENDPOINT POLICY (ingress) POLICY (egress) IDENTITY LABELS (source:key[=value]) IPv6 IPv4 STATUS ENFORCEMENT ENFORCEMENT 1384 Disabled Disabled 1 k8s:node-role.kubernetes.io/worker=worker ready reserved:host 3402 Disabled Disabled 1571 k8s:app=tomcat 172.21.12.32 ready k8s:io.cilium.k8s.policy.cluster=default k8s:io.cilium.k8s.policy.serviceaccount=default k8s:io.kubernetes.pod.namespace=default 3569 Disabled Disabled 4 reserved:health 172.21.12.20 ready root@PROD-BE-K8S-WN7:/home/cilium# cilium bpf policy get --all /sys/fs/bpf/tc/globals/cilium_policy_01384: POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT BYTES PACKETS Allow Ingress reserved:unknown ANY NONE 0 0 Allow Egress reserved:unknown ANY NONE 0 0 /sys/fs/bpf/tc/globals/cilium_policy_03402: POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT BYTES PACKETS Allow Ingress reserved:unknown ANY NONE 7596 118 Allow Ingress reserved:host ANY NONE 0 0 Allow Egress reserved:unknown ANY NONE 4444 60 /sys/fs/bpf/tc/globals/cilium_policy_03569: POLICY DIRECTION LABELS (source:key[=value]) PORT/PROTO PROXY PORT BYTES PACKETS Allow Ingress reserved:unknown ANY NONE 12049100 153645 Allow Ingress reserved:host ANY NONE 906423 10407 Allow Egress reserved:unknown ANY NONE 10679305 139853

- cilium bpf nat list | grep "10.1.16.186"

root@PROD-BE-K8S-WN7:/home/cilium# cilium bpf nat list | grep "10.1.16.186" Unable to open /sys/fs/bpf/tc/globals/cilium_snat_v6_external: Unable to get object /sys/fs/bpf/tc/globals/cilium_snat_v6_external: no such file or directory. Skipping. TCP IN 10.1.16.186:20940 -> 10.1.17.237:49667 XLATE_DST 172.21.12.32:8080 Created=69667sec HostLocal=0 TCP OUT 172.21.12.32:8080 -> 10.1.16.186:20940 XLATE_SRC 10.1.17.237:49667 Created=69667sec HostLocal=0

- cilium monitor | grep "10.1.16.186"

root@PROD-BE-K8S-WN7:/home/cilium# cilium monitor | grep "10.1.16.186" level=info msg="Initializing dissection cache..." subsys=monitor -> endpoint 3402 flow 0x0 identity 2->1571 state new ifindex lxc22edfa994e70 orig-ip 10.1.16.186: 10.1.16.186:20982 -> 172.21.12.32:8080 tcp SYN -> endpoint 3402 flow 0x0 identity 2->1571 state established ifindex lxc22edfa994e70 orig-ip 10.1.16.186: 10.1.16.186:20982 -> 172.21.12.32:8080 tcp RST -> endpoint 3402 flow 0x0 identity 2->1571 state established ifindex lxc22edfa994e70 orig-ip 10.1.16.186: 10.1.16.186:20982 -> 172.21.12.32:8080 tcp RST -> endpoint 3402 flow 0x0 identity 2->1571 state established ifindex lxc22edfa994e70 orig-ip 10.1.16.186: 10.1.16.186:20982 -> 172.21.12.32:8080 tcp RST

// 上面的输出代表请求被reset - tcpdump抓包

cloud route - ipMasqAgent.enabled=true

- cilium networkPolicy保持默认策略

- 当指定ipMasqAgent.enabled=true时,代表集群Pod与nonMasqueradeCIDRs的地址通信时,不会主动masquerade,意味着不需要做SNAT

root@HK-K8S-WN2:/home/cilium# cilium status --verbose KVStore: Ok Disabled Kubernetes: Ok 1.18 (v1.18.5) [linux/amd64] Kubernetes APIs: ["cilium/v2::CiliumClusterwideNetworkPolicy", "cilium/v2::CiliumEndpoint", "cilium/v2::CiliumNetworkPolicy", "cilium/v2::CiliumNode", "core/v1::Namespace", "core/v1::Node", "core/v1::Pods", "core/v1::Service", "discovery/v1beta1::EndpointSlice", "networking.k8s.io/v1::NetworkPolicy"] KubeProxyReplacement: Strict [eth0 (Direct Routing)] Cilium: Ok 1.9.10 (v1.9.10-4e26039) NodeMonitor: Listening for events on 2 CPUs with 64x4096 of shared memory Cilium health daemon: Ok IPAM: IPv4: 8/255 allocated from 172.20.2.0/24, Allocated addresses: 172.20.2.130 (default/prometheus-alertmanager-58dc496b97-b82ml [restored]) 172.20.2.167 (default/rabbitmq-0 [restored]) 172.20.2.207 (default/nginx-55d4fb7c6f-n9rxb [restored]) 172.20.2.215 (router) 172.20.2.222 (default/tomcat-74b4555889-z5pxt [restored]) 172.20.2.253 (default/zk-0 [restored]) 172.20.2.27 (default/redis-56bdbddbbb-r9fsx [restored]) 172.20.2.86 (health) BandwidthManager: Disabled Host Routing: BPF Masquerading: BPF (ip-masq-agent) [eth0] 172.20.0.0/20

- 查看nonMasqueradeCIDRs地址范围

root@HK-K8S-WN2:/home/cilium# cilium bpf ipmasq list IP PREFIX/ADDRESS 100.64.0.0/10 169.254.0.0/16 172.16.0.0/12 192.0.2.0/24 192.168.0.0/16 198.18.0.0/15 10.0.0.0/8 192.0.0.0/24 192.88.99.0/24 198.51.100.0/24 203.0.113.0/24 240.0.0.0/4

- 测试集群外部地址请求Pod端口

<root@proxy ~># curl -i 172.20.2.222:8080 HTTP/1.1 200 Content-Type: text/html;charset=UTF-8 Transfer-Encoding: chunked Date: Mon, 13 Sep 2021 11:27:07 GMT # 查看Pod节点的cilium-agent bpf nat列表时,并没有NAT转换,证实了不需要Destination ip地址转换 DNAT root@HK-K8S-WN2:/home/cilium# cilium bpf nat list | grep "172.19.0.195" Unable to open /sys/fs/bpf/tc/globals/cilium_snat_v6_external: Unable to get object /sys/fs/bpf/tc/globals/cilium_snat_v6_external: no such file or directory. Skipping.

- 查看cilium monitor 事件消息

root@HK-K8S-WN2:/home/cilium# cilium monitor | grep "172.19.0.195" level=info msg="Initializing dissection cache..." subsys=monitor -> endpoint 777 flow 0x0 identity 2->16701 state new ifindex lxce0b12341417a orig-ip 172.19.0.195: 172.19.0.195:56966 -> 172.20.2.222:8080 tcp SYN -> endpoint 777 flow 0x0 identity 2->16701 state established ifindex lxce0b12341417a orig-ip 172.19.0.195: 172.19.0.195:56966 -> 172.20.2.222:8080 tcp ACK -> endpoint 777 flow 0x0 identity 2->16701 state established ifindex lxce0b12341417a orig-ip 172.19.0.195: 172.19.0.195:56966 -> 172.20.2.222:8080 tcp ACK -> endpoint 777 flow 0x0 identity 2->16701 state established ifindex lxce0b12341417a orig-ip 172.19.0.195: 172.19.0.195:56966 -> 172.20.2.222:8080 tcp ACK, FIN -> endpoint 777 flow 0x0 identity 2->16701 state established ifindex lxce0b12341417a orig-ip 172.19.0.195: 172.19.0.195:56966 -> 172.20.2.222:8080 tcp ACK