1.本文要点说明

1.OpenCV双目相机

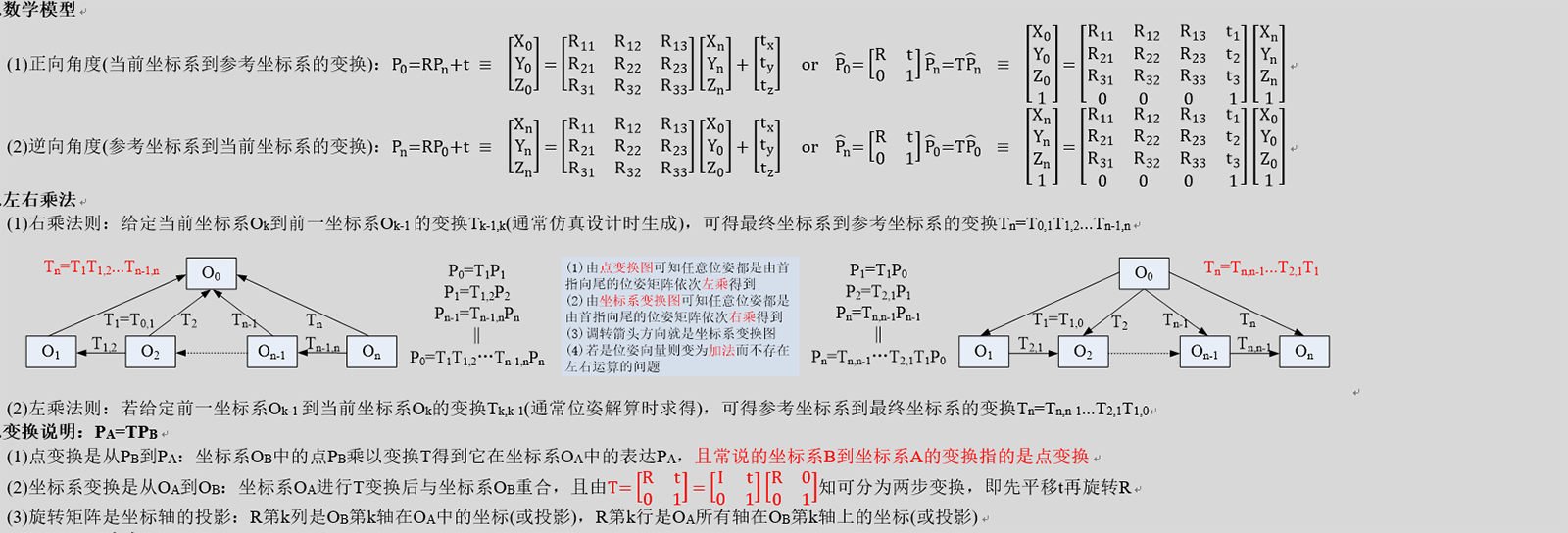

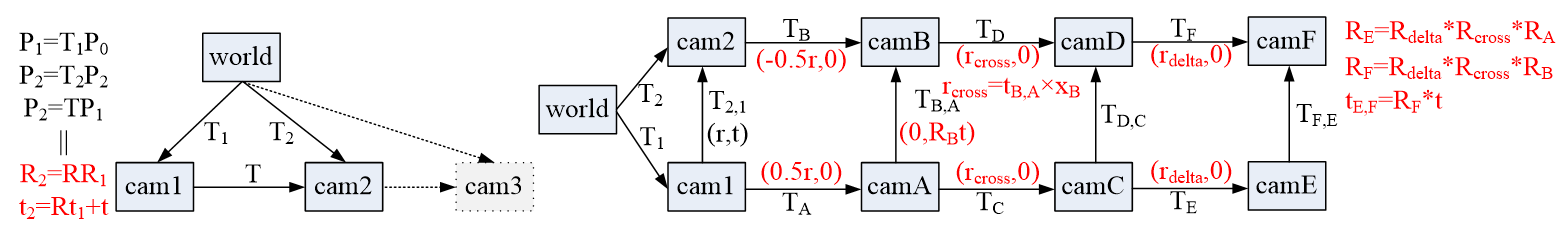

(1)左右位姿变换关系:所得变换是左相机到右相机的变换,可将左相机看作第一次观察而右相机看第二次观察,若是多目相机则继续第三次第四次等观察

(2)左右位姿公式关系:

(3)左右位姿矢量关系:左相机指定右相机=世界指向右相机*左相机指定世界

(4)生成立体相机:首先使左右像平面平行,然后使左右像平面的X轴重合(针对水平极线约束),最后还可以添加一个自定义的旋转以在期望的角度成像,注意只能旋转而不能平移

1)左右像平面平行要求rB,A=0:由矢量关系图可知这就要求rA+rB=0,为保证左右共视区域最大,显然最佳解是rA=0.5r和rB=-0.5r,此时可解得tB,A=RBt

2)左右像平面X轴重合要求tV,UY=0且tV,UZ=0:可将坐标系OA和OB进行某个旋转让其X轴为tB,A,显然此旋转为X轴叉乘tB,A。由于X轴叉乘tB,A是坐标系OA和OB到坐标系OU和OV的坐标系变换,所以坐标系OA和OB到坐标系OU和OV的点变换为其逆,即tB,A叉乘X轴。于是由叉乘得旋转轴,夹角公式得旋转角,设复合结果为rcross

3)为实现在期望的角度重成像,最后还可以添加一个自定义旋转rdelta,于是最终结果为RE=Rdelta*Rcross*RA,RF=Rdelta*Rcross*RB,tE,F=RF*t

注意:以上即是OpenCV中Radtan针孔成像模型和KB针孔成像模型的实现,只是没有自定义旋转这一步,OpenCV额外模块中的MeiUCM模型还提供了另外一种实现

1)为使左右像平面平行,给的解是rA=0和rB=-r,这相对于rA=0.5r和rB=-0.5r的解减少左右的共视区域

2)为左右像平面X轴重合,直接让tB,A是新坐标系的X轴,然后让(-tB,AY,tB,AX,0)是Y轴,最后X轴叉乘Y轴得Z轴。

2.实验测试代码

依赖于OpenCV、Ceres和Spdlog。

1 #include <opencv2/opencv.hpp> 2 #include <ceres/ceres.h> 3 #include <ceres/rotation.h> 4 #include <spdlog/spdlog.h> 5 #include <opencv2/ccalib/omnidir.hpp> 6 using namespace std; 7 using namespace cv; 8 9 class StereoRect 10 { 11 public: 12 static void TestMe(int argc = 0, char** argv = 0) 13 { 14 for (int k = 0; k < 999; ++k) 15 { 16 //0.GenerateData 17 Mat_<double> R(3, 3); ceres::EulerAnglesToRotationMatrix(Matx31d::randu(-15, 15).val, 0, R.ptr<double>()); 18 Mat_<double> t({ 3, 1 }, { -Matx21d::randu(10, 20)(0), Matx21d::randu(-5, 5)(0), Matx21d::randu(-5, 5)(0) });//left camera x must be negative in right camera system which echoes opencv 19 Matx31d P = P.randu(-999, 999); if ((P(2) < 0)) P(2) = -P(2);//P from phyCam1 to virCam1 == P from phyCam1 to phyCam2 to virCam2. virCam1 == virCam2 when no translation 20 21 //1.CalcByOpenCVPin 22 Matx33d R11, R12; 23 cv::stereoRectify(Matx33d::eye(), Mat(), Matx33d::eye(), Mat(), Size(480, 640), R, t, R11, R12, Matx34d(), Matx34d(), noArray()); 24 double infP11P12 = cv::norm(R11 * P, R12 * R * P, NORM_INF); 25 26 //2.CalcByOpenCVKB 27 Matx33d R21, R22; 28 fisheye::stereoRectify(Matx33d::eye(), Matx41d::zeros(), Matx33d::eye(), Matx41d::zeros(), Size(480, 640), R, t, R21, R22, Matx34d(), Matx34d(), noArray(), 0); 29 double infP21P22 = cv::norm(R21 * P, R22 * R * P, NORM_INF); 30 31 //3.CalcByDIYPin 32 Matx33d R31, R32; 33 Matx31d t3 = runRect(R31, R32, R, t); 34 double infP31P32 = cv::norm(R31 * P, R32 * R * P, NORM_INF); 35 36 //4.CalcByDIYMUCM 37 Matx33d R41, R42; 38 runRectTmp(R, t, R41, R42); 39 double infP41P42 = cv::norm(R41 * P, R42 * R * P, NORM_INF); 40 41 //5.CalcByOpenCVMUCM 42 Matx33d R51, R52; 43 omni_stereoRectifyModified(R, t, R51, R52); 44 double infP51P52 = cv::norm(R51 * P, R52 * R * P, NORM_INF); 45 46 //6.AnalyzeError 47 double infR11R21 = norm(R11, R21, NORM_INF); 48 double infR11R31 = norm(R11, R31, NORM_INF); 49 double infR41R51 = norm(R41, R51, NORM_INF); 50 double infR12R22 = norm(R12, R22, NORM_INF); 51 double infR12R32 = norm(R12, R32, NORM_INF); 52 double infR42R52 = norm(R42, R52, NORM_INF); 53 54 //7.PrintError 55 cout << endl << "LoopCount: " << k << endl; 56 if (infP11P12 > 1E-12 || infP21P22 > 1E-12 || infP31P32 > 1E-12 || infP41P42 > 1E-12 || infP51P52 > 1E-12 || 57 infR11R21 > 1E-12 || infR11R31 > 1E-12 || infR41R51 > 1E-12 || 58 infR12R22 > 1E-12 || infR12R32 > 1E-12 || infR42R52 > 1E-12) 59 { 60 cout << endl << "infP11P12: " << infP11P12 << endl; 61 cout << endl << "infP21P22: " << infP21P22 << endl; 62 cout << endl << "infP31P32: " << infP31P32 << endl; 63 cout << endl << "infP41P42: " << infP41P42 << endl; 64 cout << endl << "infP51P52: " << infP51P52 << endl; 65 cout << endl << "infR11R21: " << infR11R21 << endl; 66 cout << endl << "infR11R31: " << infR11R31 << endl; 67 cout << endl << "infR11R51: " << infR41R51 << endl; 68 cout << endl << "infR12R22: " << infR12R22 << endl; 69 cout << endl << "infR12R32: " << infR12R32 << endl; 70 cout << endl << "infR12R52: " << infR42R52 << endl; 71 if (1) 72 { 73 cout << endl << "R: " << R << endl << endl; 74 cout << endl << "t: " << t << endl << endl; 75 cout << endl << "P: " << P << endl << endl; 76 //cout << endl << "R11: " << endl << R11 << endl; 77 //cout << endl << "R12: " << endl << R12 << endl; 78 //cout << endl << "R21: " << endl << R21 << endl; 79 //cout << endl << "R22: " << endl << R22 << endl; 80 //cout << endl << "R31: " << endl << R31 << endl; 81 //cout << endl << "R32: " << endl << R32 << endl; 82 //cout << endl << "R41: " << endl << R41 << endl; 83 //cout << endl << "R42: " << endl << R42 << endl; 84 //cout << endl << "R51: " << endl << R51 << endl; 85 //cout << endl << "R52: " << endl << R52 << endl; 86 } 87 cout << endl << "Press any key to continue" << endl; std::getchar(); 88 } 89 90 91 } 92 } 93 94 static void TestVis(int argc = 0, char** argv = 0) 95 { 96 #if 0 97 //0.GenerateData 98 auto randomv = [](int min, int max)->int {return rand() % ((max)-(min)+1) + (min); }; 99 Mat_<double> r({ 3, 1 }, { 1, 1, 1 }); r = r / cv::norm(r, NORM_L2) * 3.14 / 180 * randomv(15, 45); 100 Mat_<double> t({ 3, 1 }, { -double(randomv(10, 20)), double(randomv(2, 5)) , double(randomv(6, 9)) });//left camera x must be negative in right camera system which echoes opencv 101 Mat_<double> R(3, 3); cv::Rodrigues(r, R); 102 Matx31d P = P.randu(99, 999); 103 104 //3.CalcByDIYPinKB 105 Matx33d R31, R32; 106 Matx31d t3 = runRect(R31, R32, R, t); 107 double infP31P32 = cv::norm(R31 * P, R32 * R * P, NORM_INF); 108 109 //4.CalcByDIYUCM 110 111 viz::WCoordinateSystem worldCSys(1); 112 Mat_<cv::Affine3d> camPoses0, camPoses1, camPoses2, camPoses3; 113 camPoses0.push_back(cv::Affine3d(Matx33d::eye()));//the first or left camera 114 camPoses0.push_back(cv::Affine3d(R.t()));//the second or right camera 115 camPoses1.push_back(cv::Affine3d((R11).t(), Vec3d(1, 1, 1))); 116 camPoses1.push_back(cv::Affine3d((R12 * R).t(), Vec3d(1, 1, 1))); 117 camPoses2.push_back(cv::Affine3d((R21).t(), Vec3d(2, 2, 2))); 118 camPoses2.push_back(cv::Affine3d((R22 * R).t(), Vec3d(2, 2, 2))); 119 camPoses3.push_back(cv::Affine3d((R31).t(), Vec3d(3, 3, 3))); 120 camPoses3.push_back(cv::Affine3d((R32 * R).t(), Vec3d(3, 3, 3))); 121 122 viz::WTrajectoryFrustums camTraj0(camPoses0, Matx33d(1, 0, 1, 0, 1, 1, 0, 0, 1), 1, viz::Color::white()); 123 viz::WTrajectoryFrustums camTraj1(camPoses1, Matx33d(1, 0, 1, 0, 1, 1, 0, 0, 1), 1, viz::Color::green()); 124 viz::WTrajectoryFrustums camTraj2(camPoses2, Matx33d(1, 0, 1, 0, 1, 1, 0, 0, 1), 1, viz::Color::blue()); 125 viz::WTrajectoryFrustums camTraj3(camPoses3, Matx33d(1, 0, 1, 0, 1, 1, 0, 0, 1), 1, viz::Color::red()); 126 127 static viz::Viz3d viz3d(__FUNCTION__); 128 viz3d.showWidget("worldCSys", worldCSys); 129 viz3d.showWidget("camTraj", camTraj); 130 viz3d.spin(); 131 #endif 132 } 133 134 public: 135 static Matx31d runRect(Matx33d& R1, Matx33d& R2, Matx33d R, Matx31d t, Vec3d delta = Vec3d(0, 0, 0), double ratio1 = 0.5) 136 { 137 //1.Let left and right image plane be coplanar 138 Matx31d r; cv::Rodrigues(R, r); 139 Matx33d RCop1; cv::Rodrigues(ratio1 * r, RCop1); 140 Matx33d RCop2; cv::Rodrigues(ratio1 * r - r, RCop2); 141 Matx31d tCop = RCop2 * t; 142 143 //2.Let left and right image plane are epipolar 144 Matx31d xAxis(tCop(0) > 0 ? 1 : -1, 0, 0); 145 Matx31d rEpi = Vec3d(tCop.val).cross(Vec3d(xAxis.val)); 146 if (norm(rEpi, NORM_L2) > 0.) rEpi *= (acos(fabs(tCop(0)) / norm(tCop, NORM_L2)) / norm(rEpi, NORM_L2)); 147 Matx33d REpi; cv::Rodrigues(rEpi, REpi); 148 149 //3.Additional rotation for reprojection at expectation angle 150 Matx33d RDelta; cv::Rodrigues(delta, RDelta); 151 152 //4.Final transformation 153 R1 = RDelta * REpi * RCop1; 154 R2 = RDelta * REpi * RCop2; 155 Matx31d tFinal = R2 * t; 156 return tFinal; 157 } 158 159 static Matx31d runRectTmp(Matx33d R, Matx31d t, Matx33d& R1, Matx33d& R2, Vec3d delta = Vec3d(0, 0, 0), double ratio1 = 0) 160 { 161 #if 1 162 //1.Let left and right image plane be coplanar 163 Matx31d r; cv::Rodrigues(R, r); 164 Matx33d RCop1; cv::Rodrigues(r * ratio1, RCop1); 165 Matx33d RCop2; cv::Rodrigues(r * (1 - ratio1), RCop2); 166 Matx31d tCop = -RCop2.t() * t; 167 168 //2.Let left and right image plane are epipolar 169 Vec3d e1(tCop.val); 170 e1 = e1 / cv::norm(e1); 171 Vec3d e2(-e1(1), e1(0), 0); 172 e2 = e2 / cv::norm(e2); 173 Vec3d e3 = e1.cross(e2); 174 e3 = e3 / cv::norm(e3); 175 Matx33d REpi(e1(0), e1(1), e1(2), e2(0), e2(1), e2(2), e3(0), e3(1), e3(2)); 176 177 //3.Additional rotation for reprojection at expectation angle 178 Matx33d RDelta; cv::Rodrigues(delta, RDelta); 179 180 //4.Final transformation 181 R1 = RDelta * REpi * RCop1; 182 R2 = RDelta * REpi * RCop2.t(); 183 Matx31d tFinal = R2 * t; 184 return tFinal; 185 #else 186 //1.Let left and right image plane be coplanar 187 Matx33d RCop = R.t(); 188 Matx31d tCop = -R.t() * t;//here should not has minus sign and use tCop(0) sign later like cv::stereoRectify and cv::fisheye::stereoRectify 189 190 //2.Let left and right image plane are epipolar 191 Vec3d e1(tCop.val); 192 e1 = e1 / cv::norm(e1); 193 Vec3d e2(-e1(1), e1(0), 0); 194 e2 = e2 / cv::norm(e2); 195 Vec3d e3 = e1.cross(e2); 196 e3 = e3 / cv::norm(e3); 197 Matx33d REpi(e1(0), e1(1), e1(2), e2(0), e2(1), e2(2), e3(0), e3(1), e3(2)); 198 199 //3.Additional rotation for reprojection at expectation angle 200 Matx33d RDelta; cv::Rodrigues(delta, RDelta); 201 202 //4.Final transformation 203 R1 = RDelta * REpi; 204 R2 = RDelta * REpi * RCop; 205 Matx31d tFinal = R2 * t; 206 return tFinal; 207 #endif 208 } 209 210 static void omni_stereoRectifyModified(InputArray R, InputArray T, OutputArray R1, OutputArray R2) 211 { 212 CV_Assert((R.size() == Size(3, 3) || R.total() == 3) && (R.depth() == CV_32F || R.depth() == CV_64F)); 213 CV_Assert(T.total() == 3 && (T.depth() == CV_32F || T.depth() == CV_64F)); 214 215 Mat _R, _T; 216 if (R.size() == Size(3, 3)) 217 { 218 R.getMat().convertTo(_R, CV_64F); 219 } 220 else if (R.total() == 3) 221 { 222 Rodrigues(R.getMat(), _R); 223 _R.convertTo(_R, CV_64F); 224 } 225 226 T.getMat().reshape(1, 3).convertTo(_T, CV_64F); 227 228 R1.create(3, 3, CV_64F); 229 Mat _R1 = R1.getMat(); 230 R2.create(3, 3, CV_64F); 231 Mat _R2 = R2.getMat(); 232 233 Mat R21 = _R.t(); 234 Mat T21 = -_R.t() * _T; 235 236 Mat e1, e2, e3; 237 e1 = T21.t() / norm(T21); 238 e2 = Mat(Matx13d(T21.at<double>(1) * -1, T21.at<double>(0), 0.0)); 239 e2 = e2 / norm(e2); 240 e3 = e1.cross(e2); 241 e3 = e3 / norm(e3); 242 e1.copyTo(_R1.row(0)); 243 e2.copyTo(_R1.row(1)); 244 e3.copyTo(_R1.row(2)); 245 _R2 = _R1 * R21; 246 } 247 }; 248 249 int main(int argc, char** argv) { StereoRect::TestMe(argc, argv); return 0; } 250 int main1(int argc, char** argv) { StereoRect::TestMe(argc, argv); return 0; } 251 int main2(int argc, char** argv) { StereoRect::TestVis(argc, argv); return 0; }