环境:win10、IDEA2016.3、maven3.3.9、git、scala 2.11.8、java1.8.0_101、sbt0.13.12

下载:

#git bash中执行:

git clone https://github.com/apache/spark.git

git tag

git checkout v2.1.0-rc5

git checkout -b v2.1.0-rc5导入IDEA,开始调试:

file–open–选中根目录pom.xml,open as project

编译:

等待IDEA index文件完成

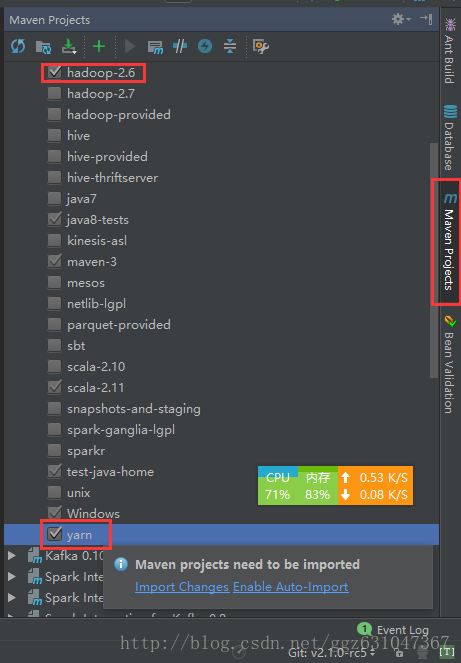

打开Maven Project–Profiles–勾选:hadoop-2.6、yarn

点击import changes,再次等待IDEA index文件完成

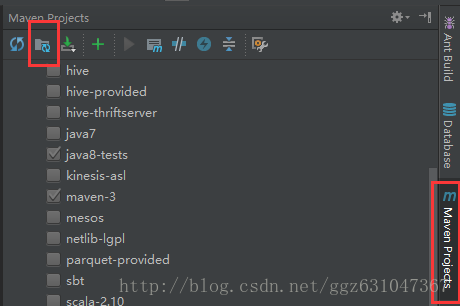

Maven Project–Generate Sources and Update Folders For All Projects

mvn -T 6 -Pyarn -Phadoop-2.6 -DskipTests clean package

bin/spark-shell错误:

Exception in thread "main" java.lang.ExceptionInInitializerError

at org.apache.spark.package$.<init>(package.scala:91)

at org.apache.spark.package$.<clinit>(package.scala)

at org.apache.spark.repl.SparkILoop.printWelcome(SparkILoop.scala:81)

at scala.tools.nsc.interpreter.ILoop$$anonfun$process$1.apply$mcZ$sp(ILoop.scala:921)

at scala.tools.nsc.interpreter.ILoop$$anonfun$process$1.apply(ILoop.scala:909)

at scala.tools.nsc.interpreter.ILoop$$anonfun$process$1.apply(ILoop.scala:909)

at scala.reflect.internal.util.ScalaClassLoader$.savingContextLoader(ScalaClassLoader.scala:97)

at scala.tools.nsc.interpreter.ILoop.process(ILoop.scala:909)

at org.apache.spark.repl.Main$.doMain(Main.scala:68)

at org.apache.spark.repl.Main$.main(Main.scala:51)

at org.apache.spark.repl.Main.main(Main.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:738)

at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:187)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:212)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:126)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Caused by: org.apache.spark.SparkException: Error while locating file spark-version-info.properties

at org.apache.spark.package$SparkBuildInfo$.liftedTree1$1(package.scala:75)

at org.apache.spark.package$SparkBuildInfo$.<init>(package.scala:61)

at org.apache.spark.package$SparkBuildInfo$.<clinit>(package.scala)

... 20 more

Caused by: java.lang.NullPointerException

at java.util.Properties$LineReader.readLine(Properties.java:434)

at java.util.Properties.load0(Properties.java:353)

at java.util.Properties.load(Properties.java:341)

at org.apache.spark.package$SparkBuildInfo$.liftedTree1$1(package.scala:64)解决方法:

查看spark-core的pom.xml,会发现:

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-antrun-plugin</artifactId>

<executions>

<execution>

<phase>generate-resources</phase>

<configuration>

<!-- Execute the shell script to generate the spark build information. -->

<target>

<exec executable="bash">

<arg value="${project.basedir}/../build/spark-build-info"/>

<arg value="${project.build.directory}/extra-resources"/>

<arg value="${project.version}"/>

</exec>

</target>

</configuration>

<goals>

<goal>run</goal>

</goals>

</execution>

</executions>

</plugin>此处因为文件路径问题,脚本执行失败,所以没有正常生成spark-version-info.properties文件。手动执行(spark-tags_2.11根据变量自己查找得出),因为没有安装模拟linux的环境,所以这句在git bash中执行:

build/spark-build-info /f/spark2/spark/core/target/extra-resources spark-tags_2.11sparkassembly argetscala-2.11jars中找到:spark-core_2.11-2.2.0-SNAPSHOT.jar

查看包内根目录是否有文件:spark-version-info.properties,如果没有添加进去,再尝试bin/spark-shell

加断点运行org.apache.spark.examples.SparkPi,在VM参数中添加-Dspark.master=local[*],点击执行等待build完成。

错误:

Exception in thread "main" java.lang.NoClassDefFoundError: scala/collection/Seq

at org.apache.spark.examples.SparkPi.main(SparkPi.scala)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at com.intellij.rt.execution.application.AppMain.main(AppMain.java:147)

Caused by: java.lang.ClassNotFoundException: scala.collection.Seq

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

... 6 more

Process finished with exit code 1解决方法:

Menu -> File -> Project Structure -> Modules -> spark-examples_2.11 -> Dependencies 添加依赖 jars -> {spark dir}/spark/assembly/target/scala-2.11/jars/

错误:

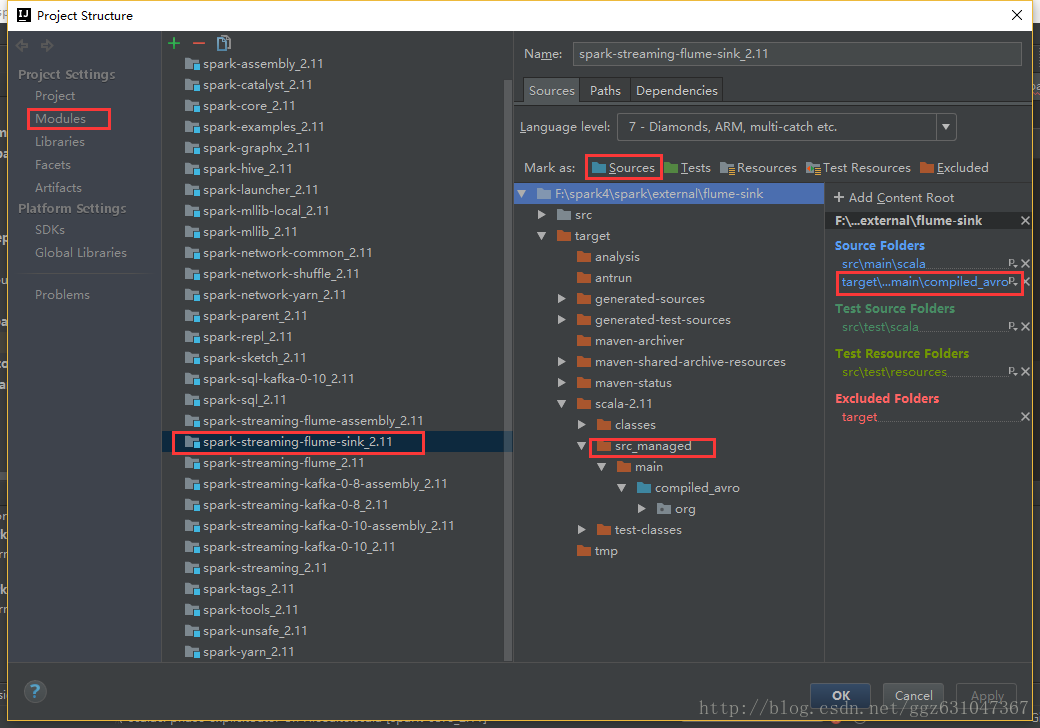

Error:(45, 66) not found: type SparkFlumeProtocol解决方法:

将子项目spark-streaming-flume-sink_2.11的compiled_avro也作为源码目录,等待构建完成。