使用kubeadm安装集群

虚拟机环境

- Linux版本:Debain10(buster)

- 虚拟机软件:VirtualBox

- K8S版本:1.19.7

TIP:

- 设置网络-让宿主机和虚拟机网络互通并能上网。

- 修改hostname,每台机器hostname唯一。

集群初始化

环境准备

# 安装基础软件

apt-get install -y make gcc g++ lsof curl wget apt-transport-https ca-certificates gnupg2 software-properties-common

# 安装ipvs工具

apt-get update && apt-get install -y ipvsadm ipset conntrack

# 关闭swap分区

# 临时关闭

swapoff -a

# 永久关闭

test -f /etc/fstab.bak || cp /etc/fstab /etc/fstab.bak

itemKey=`grep swap /etc/fstab|grep -v "#"|awk -F ' |/' '{print $4}'`

# 修改内核配置

echo "修改内核配置"

modprobe br_netfilter

modprobe ip_vs

modprobe ip_vs_rr

modprobe ip_vs_wrr

modprobe ip_vs_sh

modprobe nf_conntrack_ipv4

modprobe ipt_set

modprobe xt_ipvs

modprobe xt_u32

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

modprobe -- ipt_set

modprobe -- xt_ipvs

modprobe -- xt_u32

# 内核转发设置

echo '''

net.ipv4.ip_forward = 1

net.ipv4.ip_nonlocal_bind = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

''' > /etc/sysctl.d/k8s.conf

sysctl -p /etc/sysctl.d/k8s.conf

安装 Docker, kubeadm, kubelet, kubectl

K8S_VERSION=1.19.7

MIRROR_SOURCE="http://mirrors.aliyun.com"

sourceListFile="/etc/apt/sources.list"

wget ${MIRROR_SOURCE}/docker-ce/linux/debian/gpg -O /tmp/docker.key && apt-key add /tmp/docker.key

wget ${MIRROR_SOURCE}/kubernetes/apt/doc/apt-key.gpg -O /tmp/k8s.key && apt-key add /tmp/k8s.key

apt-add-repository "deb ${MIRROR_SOURCE}/docker-ce/linux/debian $(lsb_release -sc|tr -d '

') stable"

apt-add-repository "deb ${MIRROR_SOURCE}/kubernetes/apt kubernetes-xenial main"

apt-get update

apt-get install -y docker-ce kubeadm=${K8S_VERSION}-00 kubelet=${K8S_VERSION}-00 kubectl=${K8S_VERSION}-00

systemctl enable kubelet

systemctl enable docker

配置docker

# 可替换为阿里云自己的加速地址

cat > /etc/docker/daemon.json <<EOF

{

"data-root": "/raid/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["http://hub-mirror.c.163.com"]

}

EOF

systemctl daemon-reload && systemctl restart docker

# 修改为自己的账号

echo "添加 用户帐号到docker 用户组"

usermod -a -G docker ashin

su ashin -c "id"

[可选] 安装ceph

# 安装Ceph

echo "安装Ceph"

sourceStr="deb ${MIRROR_SOURCE}/ceph/debian-octopus $(lsb_release -sc|tr -d '

') main"

cp ${sourceListFile} "$(sourceListFile).${data + %Y%m%d_%H%M%S}"

apt-add-repository "${sourceStr}"

env DEBAIN_FRONTEND=noninteractive DEBAIN_PRIORIRY=critlcal apt-get --assume-yes -q update

env DEBAIN_FRONTEND=noninteractive DEBAIN_PRIORIRY=critlcal apt-get --assume-yes -q --no-install-recommends install ca-certificates apt-transport-https

wget -q -O- ${MIRROR_SOURCE}/ceph/keys/release.asc |apt-key add -

env DEBAIN_FRONTEND=noninteractive DEBAIN_PRIORIRY=critlcal apt-get --assume-yes -q update

env DEBAIN_FRONTEND=noninteractive DEBAIN_PRIORIRY=critlcal apt-get --assume-yes -q --no-install-recommends install -o Dpkg::Options::=--force-confnew ceph-common ceph-fuse

ceph --version

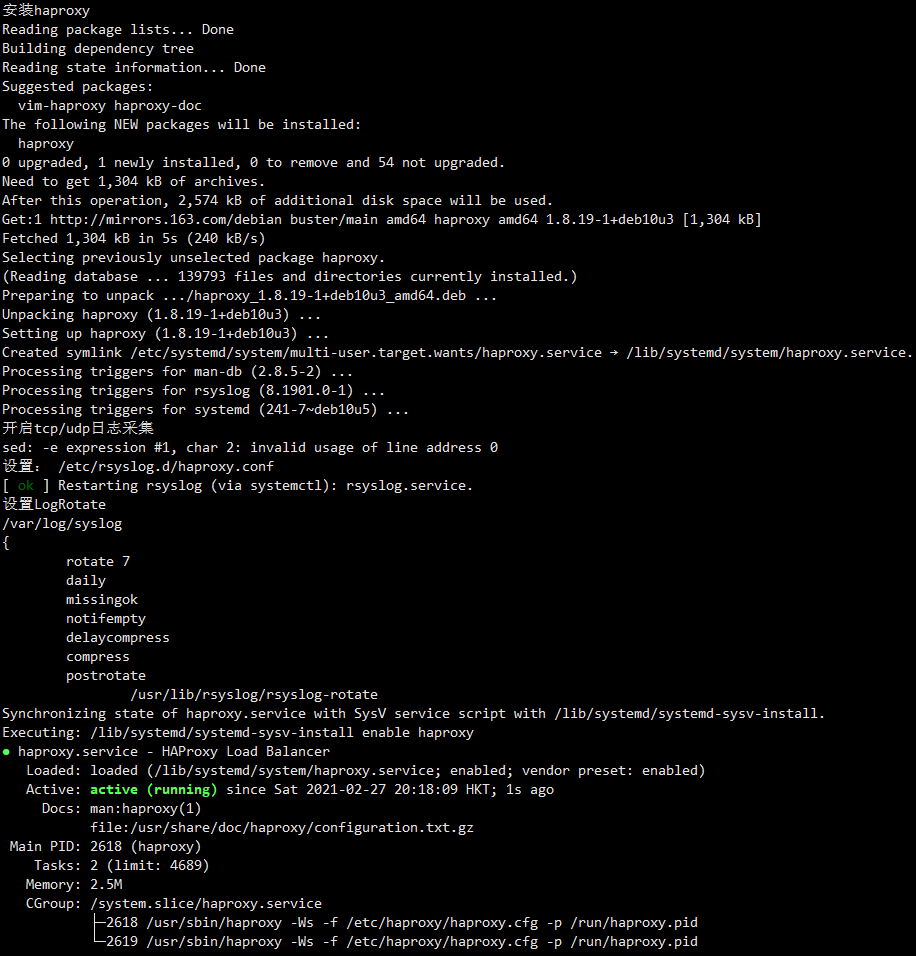

安装haproxy和keepalived

Haproxy

将backend地址改为真实master地址,生产环境一般会有多master来保证集群的高可用。

这里是本地环境,所以目前只有一个。

echo "安装haproxy"

apt-get install -y haproxy

mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bar

cat > /etc/haproxy/haproxy.cfg << EOF

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

daemon

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

timeout connect 10s

timeout client 1m

timeout server 1m

#---------------------------------------------------------------------

# kubernetes apiserver frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kubernetes-apiserver

mode tcp

bind :8443

option tcplog

default_backend kubernetes-apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kubernetes-apiserver

mode tcp

balance roundrobin

server master-api 192.168.1.51:6443 check

# 多个master地址

# server master2-api 192.168.1.52:6443 check

#---------------------------------------------------------------------

# collection haproxy statistics message

#---------------------------------------------------------------------

listen stats

bind :9090

mode http

balance

stats uri /admin?stats

stats auth admin:admin123

stats admin if TRUE

EOF

echo "开启tcp/udp日志采集"

rsyslogConf="/etc/rsyslog.conf"

sed -i "s/#module(load="imudp")/module(load="imudp")/" ${rsyslogConf}

sed -i "s/#input(type="imudp")/input(type="imudp")/" ${rsyslogConf}

sed -i "s/#module(load="imtcp")/module(load="imtcp")/" ${rsyslogConf}

sed -i "s/#input(type="imtcp")/input(type="imtcp")/" ${rsyslogConf}

sed 0n "7, 22p" ${rsyslogConf}

echo "设置: /etc/rsyslog.d/haproxy.conf"

echo "local0.* /var/log/haproxy/haproxy.log" > /etc/rsyslog.d/haproxy.conf

echo "local1.* /var/log/haproxy/haproxy.log" >> /etc/rsyslog.d/haproxy.conf

/etc/init.d/rsyslog restart

echo "设置LogRotate"

rsyslogRotate=/etc/logrotate.d/rsyslog

sed -i "/^/var/log/syslog$/i\/var/log/haproxy/haproxy.log" ${rsyslogRotate}

iptables -A INPUT -i lo -j ACCEPT

systemctl enable haproxy.service

systemctl start haproxy.service

systemctl status haproxy.service

ss -lnt | grep -E "8443|9090"

截图:

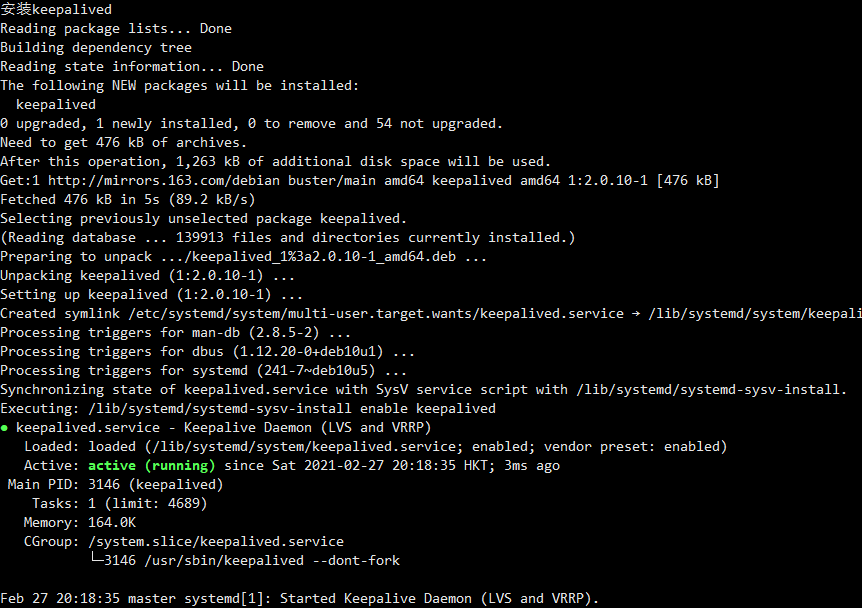

Keepalived

TIP:

- 将配置中的interface设置为本机的网卡(如enp0s3),可以使用

ip addr查看网卡信息。 - 虚拟IP(virtual_ipaddress)根据自己的IP来设置,后面可通过VIP来访问集群。

echo "安装keepalived"

apt-get install -y keepalived

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

default_interface enp0s3

script_user root

enable_script_security

}

vrrp_script check_haproxy {

script "/usr/bin/killall -0 haproxy"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface enp0s3

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass d0cker

}

virtual_ipaddress {

192.168.1.71

}

track_script {

check_haproxy

}

}

EOF

systemctl enable keepalived.service

systemctl start keepalived.service

systemctl status keepalived.service

截图:

设置防火墙

TIP: 本地环境也可直接关闭防火墙。

iptables -A INPUT -p tcp --dport 6443 -j ACCEPT

iptables -A INPUT -p tcp --dport 2379:2380 -j ACCEPT

iptables -A INPUT -p tcp --dport 10250 -j ACCEPT

iptables -A INPUT -p tcp --dport 10251 -j ACCEPT

iptables -A INPUT -p tcp --dport 10252 -j ACCEPT

iptables -A INPUT -p tcp --dport 30000:32767 -j ACCEPT

# 保存现有规则并设置永久生效

iptables-save > /etc/iptables.rules

iptables-restore < /etc/iptables.rules

初始化集群

kubeadm配置

TIP: ClusterConfiguration的controlPlaneEndpoint改为设置的VIP地址

apiVersion: kubeadm.k8s.io/v1beta2

kind: InitConfiguration

nodeRegistration:

name: master

ignorePreflightErrors:

- DirAvailable--etc-kubernetes-manifests

# taints:

# - effect: NoSchedule

# key: node-role.kubernetes.io/master

localAPIEndpoint:

advertiseAddress: 0.0.0.0

bindPort: 6443

---

# kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.19.7

imageRepository: registry.aliyuncs.com/google_containers

controlPlaneEndpoint: "192.168.1.71:8443"

etcd:

local:

dataDir: "/raid/etcd"

extraArgs:

listen-metrics-urls: http://0.0.0.0:2381

networking:

serviceSubnet: "10.254.0.0/16"

# 定义pod的ip网段,最好与主机网段不重叠

podSubnet: "172.10.0.0/16"

dnsDomain: "cluster.local"

apiServer:

timeoutForControlPlane: 4m0s

extraArgs:

# etcd-servers: "https://192.168.1.51:2379"

service-node-port-range: "30000-32767"

---

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

resolvConf: /etc/resolv.conf

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: "ipvs"

```shell

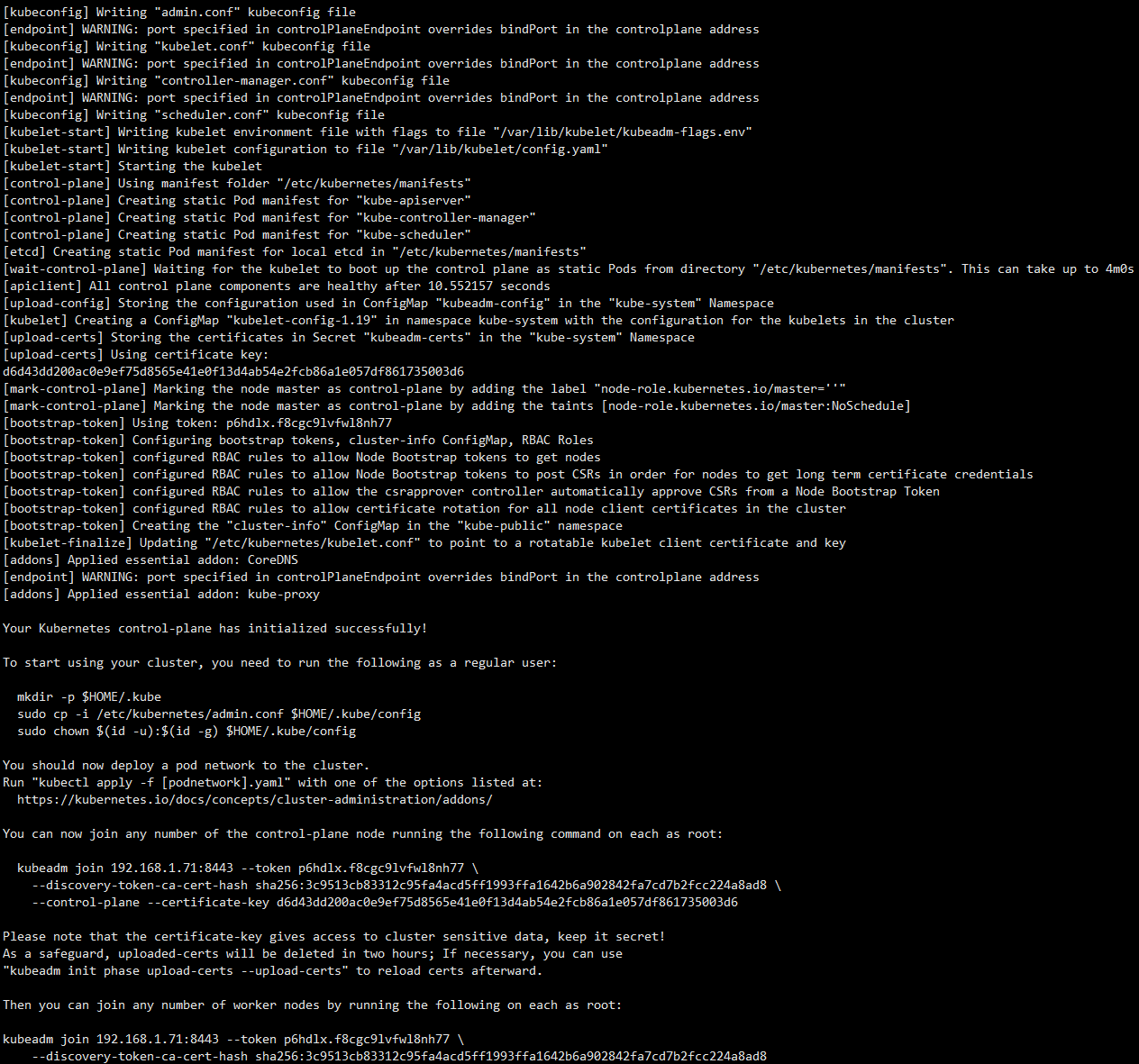

### 初始化master节点

```shell

kubeadm init --config=kubeadm-config.yaml --upload-certs

TIP:

- 初始化需要等待一段时间。

- 若出现失败,很可能是因为镜像没拉取成功,可以尝试

kubeadm reset后重新初始化。

截图:

此时kubectl get node会出现NotReady,原因是没有安装网络插件。

安装网络插件

下载calico

curl -O https://docs.projectcalico.org/v3.17/manifests/calico.yaml

修改DaemonSet里的calico-node容器的环境变量CALICO_IPV4POOL_CIDR为上面配置的podSubnet相同,我这里是172.10.0.0/16

env:

# 修改为pod网段,和kubeadm config里的podSubnet字段相同

- name: CALICO_IPV4POOL_CIDR

value: "172.10.0.0/16"

# 多网卡时需要修改为指定的网卡接口,否则可省略,默认是eth*

- name: IP_AUTODETECTION_METHOD

value: "interface=enp0s3"

- name: IP6_AUTODETECTION_METHOD

value: "interface=enp0s3"

# 异地主机需要修改service host,否则下面三个配置可以省略

- name: KUBERNETES_SERVICE_HOST

value: "192.168.1.71"

- name: KUBERNETES_SERVICE_PORT

value: "6443"

- name: KUBERNETES_SERVICE_PORT_HTTPS

value: "6443"

-

部署calico

kubectl apply -f calico.yaml

TIP:

安装过程可能会出现镜像超时问题

Get https://registry-1.docker.io/v2/calico/node/manifests/sha256:xxx net/http: TLS handshake timeout

尝试解决:

- 手动pull calico.yaml里面的相关镜像。

- 尝试

docker login或docker logout

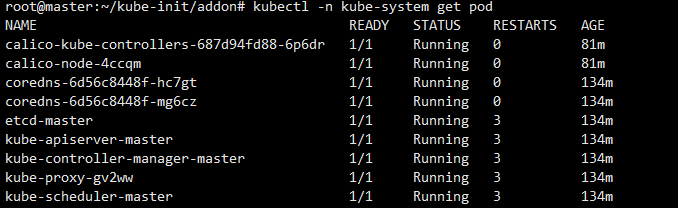

最后查看kube-system下的pod是否正常:

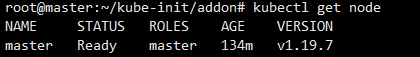

成功安装后过会master会变为Ready,说明已经成功了:

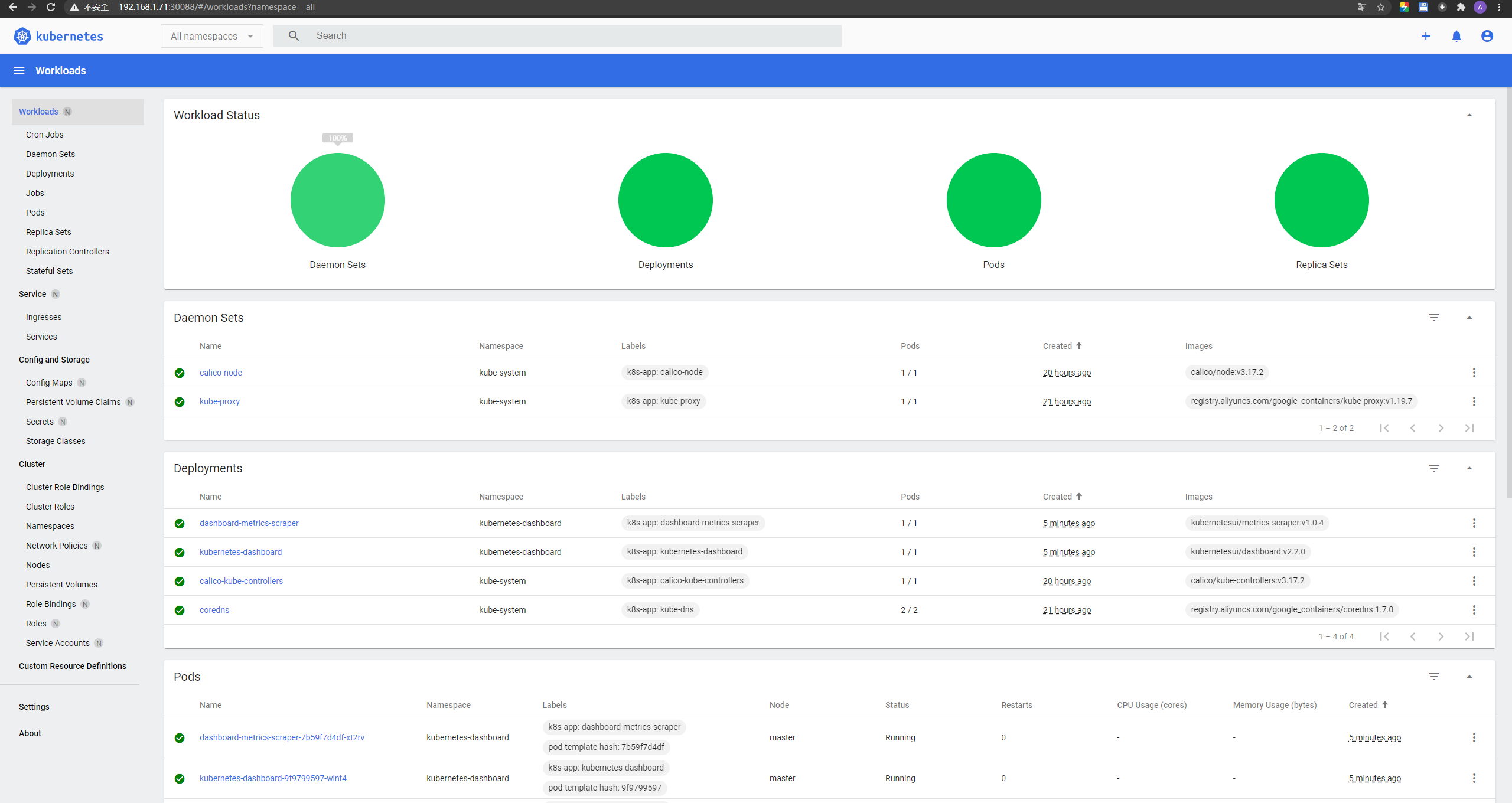

安装Dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0/aio/deploy/recommended.yaml

修改service-kubernetes-dashboard为nodeport, 以便在宿主机访问。

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30088

selector:

k8s-app: kubernetes-dashboard

...

然后kubectl apply -f recommended.yaml

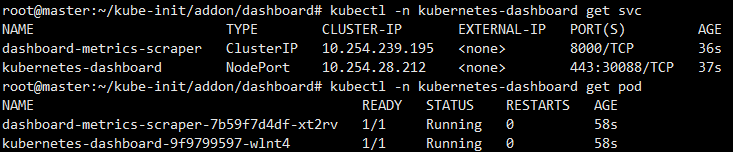

查看资源:

本地访问集群Vip:Nodeport:

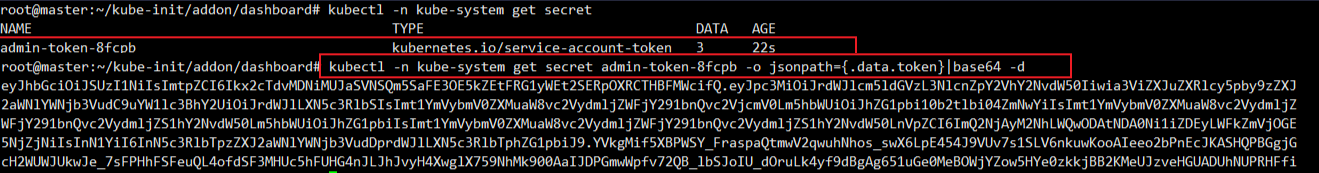

接着:创建LOGIN TOKEN:

# admin-token.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubectl apply -f admin-token.yaml

获取token,使用base64解密出来:

kubectl -n kube-system get secret admin-token-n99ll -o jsonpath={.data.token}|base64 -d

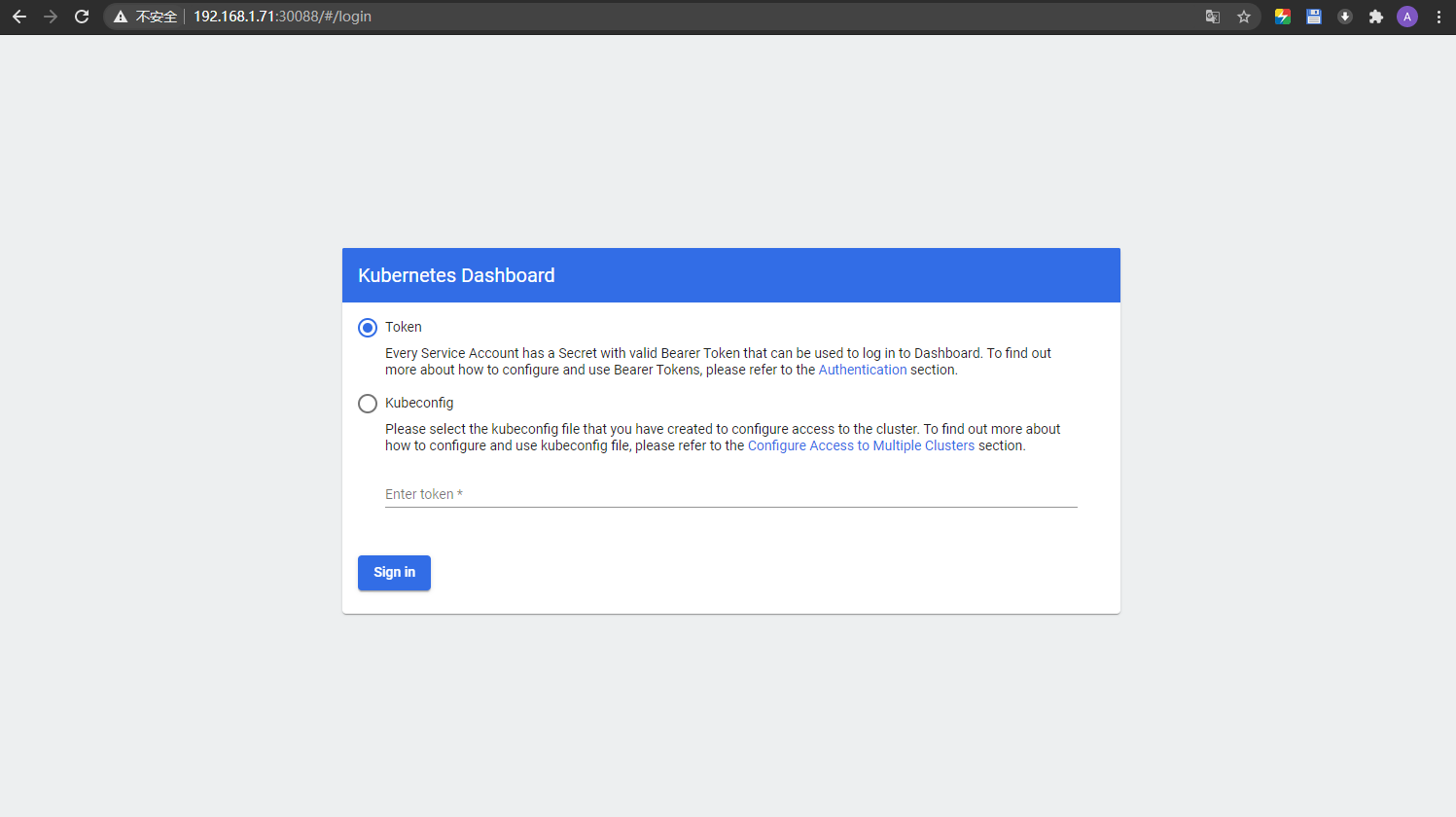

将token复制进去即可:

加入工作节点

与控制节点一样,需要先安装环境,如上。

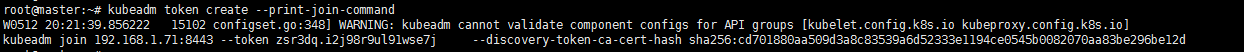

然后master获取token:

kubeadm token create --print-join-command

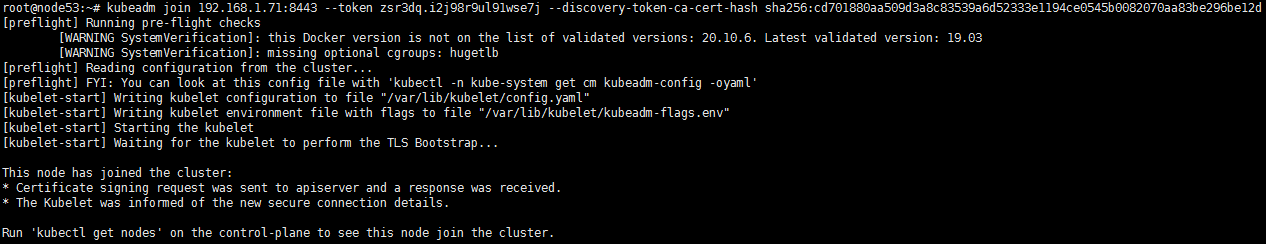

然后加入新节点,直接拿上面生成的指令在worker节点执行即可:

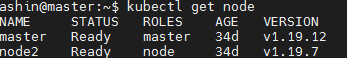

可以看到,已经成功加进去了:

加入控制节点

前提:控制节点的环境安装和master一样。

1. master节点获取join certificateKey。

kubeadm init phase upload-certs --upload-certs

2. 新节点执行kubeadm join。

kubeadm join xxx --token xxxxxxxxxx --discovery-token-ca-cert-hash sha256:xxxxxxxxx --control-plane --certificate-key xxxxxxxxx

安全移除节点

1. 节点标记为不可调度。

kubectl cordon nodeName

2. 将节点上的pod平滑迁移到其他节点。

kubectl drain nodeName --delete-local-data --force --ignore-daemonsets

3.移除节点。

kubectl delete node nodeName

4.清理etcd。

memberList

memberRemove memberID

集群证书过期

error

Unable to connect to the server: X509:certificate has expired or is not yet valid

1. 查看证书是否过期。

openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text

kubeadm alpha certs check-expiration

2.备份集群配置文件[可选]。

kubeadm config view > /root/kubeadm.yaml

3. 更新集群证书,或--config指定kubeadm-config目录。

kubeadm alpha certs renew all

4. 重启Kubelet。

systemctl restart kubelet

5. 同步配置文件,清除.kube下缓存。

cp /etc/kubernetes/admin.conf /root/.kube/config

6. 所有控制节点都需要更新。

集群升级

先升级master节点,再升级worker节点。

master节点升级

1.检查/etc/apt/source.list。

# 查看可安装版本

apt-update

apt-cache madison kubeadm |head -n 10

2. 升级kubeadm为制定版本。

UPVERSION=1.xx.x

apt-get install -y kubeadm=${UPVERSION}-00 && kubeadm version

3. [可选]检查确认升级信息。

kubeadm upgrade plan

输出:

ashin@master:~$ sudo kubeadm upgrade plan

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade] Fetching available versions to upgrade to

[upgrade/versions] Cluster version: v1.19.7

[upgrade/versions] kubeadm version: v1.19.12

I0626 14:37:02.893417 21588 version.go:255] remote version is much newer: v1.21.2; falling back to: stable-1.19

[upgrade/versions] Latest stable version: v1.19.12

[upgrade/versions] Latest stable version: v1.19.12

[upgrade/versions] Latest version in the v1.19 series: v1.19.12

[upgrade/versions] Latest version in the v1.19 series: v1.19.12

Components that must be upgraded manually after you have upgraded the control plane with 'kubeadm upgrade apply':

COMPONENT CURRENT AVAILABLE

kubelet 2 x v1.19.7 v1.19.12

Upgrade to the latest version in the v1.19 series:

COMPONENT CURRENT AVAILABLE

kube-apiserver v1.19.7 v1.19.12

kube-controller-manager v1.19.7 v1.19.12

kube-scheduler v1.19.7 v1.19.12

kube-proxy v1.19.7 v1.19.12

CoreDNS 1.7.0 1.7.0

etcd 3.4.13-0 3.4.13-0

You can now apply the upgrade by executing the following command:

kubeadm upgrade apply v1.19.12

_____________________________________________________________________

The table below shows the current state of component configs as understood by this version of kubeadm.

Configs that have a "yes" mark in the "MANUAL UPGRADE REQUIRED" column require manual config upgrade or

resetting to kubeadm defaults before a successful upgrade can be performed. The version to manually

upgrade to is denoted in the "PREFERRED VERSION" column.

API GROUP CURRENT VERSION PREFERRED VERSION MANUAL UPGRADE REQUIRED

kubeproxy.config.k8s.io v1alpha1 v1alpha1 no

kubelet.config.k8s.io v1beta1 v1beta1 no

_____________________________________________________________________

4. 执行升级。

tip: 仅第一个升级的控制节点需要upgrade plan->upgrade apply,后面均使用upgrade node升级

# --certificate-renewal=false 不对集群证书进行续约, 默认自动

# --dry-run 不应用更改,仅输出

kubeadm upgrade apply v${UPVERSION} --certificate-renewal=false

输出:

ashin@master:~$ sudo kubeadm upgrade apply v${UPVERSION} --certificate-renewal=false

[upgrade/config] Making sure the configuration is correct:

[upgrade/config] Reading configuration from the cluster...

[upgrade/config] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[preflight] Running pre-flight checks.

[upgrade] Running cluster health checks

[upgrade/version] You have chosen to change the cluster version to "v1.19.12"

[upgrade/versions] Cluster version: v1.19.7

[upgrade/versions] kubeadm version: v1.19.12

[upgrade/confirm] Are you sure you want to proceed with the upgrade? [y/N]: y

[upgrade/prepull] Pulling images required for setting up a Kubernetes cluster

[upgrade/prepull] This might take a minute or two, depending on the speed of your internet connection

[upgrade/prepull] You can also perform this action in beforehand using 'kubeadm config images pull'

[upgrade/apply] Upgrading your Static Pod-hosted control plane to version "v1.19.12"...

Static pod: kube-apiserver-master hash: 9d002bf9c7374d8dc4c04c4ddbc764dd

Static pod: kube-controller-manager-master hash: d2563b392c9c83de256e527e3d5b159a

Static pod: kube-scheduler-master hash: 8b9d324b0a3824b6f74dcac03a5ac11c

[upgrade/etcd] Upgrading to TLS for etcd

[upgrade/etcd] Non fatal issue encountered during upgrade: the desired etcd version "3.4.13-0" is not newer than the currently installed "3.4.13-0". Skipping etcd upgrade

[upgrade/staticpods] Writing new Static Pod manifests to "/etc/kubernetes/tmp/kubeadm-upgraded-manifests069730205"

[upgrade/staticpods] Preparing for "kube-apiserver" upgrade

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-apiserver.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-26-16-01-35/kube-apiserver.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-apiserver-master hash: 9d002bf9c7374d8dc4c04c4ddbc764dd

Static pod: kube-apiserver-master hash: bb25bc799af813b7b05b21afd6f730b7

[apiclient] Found 1 Pods for label selector component=kube-apiserver

[upgrade/staticpods] Component "kube-apiserver" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-controller-manager" upgrade

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-controller-manager.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-26-16-01-35/kube-controller-manager.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-controller-manager-master hash: d2563b392c9c83de256e527e3d5b159a

[apiclient] Found 1 Pods for label selector component=kube-controller-manager

[upgrade/staticpods] Component "kube-controller-manager" upgraded successfully!

[upgrade/staticpods] Preparing for "kube-scheduler" upgrade

[upgrade/staticpods] Moved new manifest to "/etc/kubernetes/manifests/kube-scheduler.yaml" and backed up old manifest to "/etc/kubernetes/tmp/kubeadm-backup-manifests-2021-06-26-16-01-35/kube-scheduler.yaml"

[upgrade/staticpods] Waiting for the kubelet to restart the component

[upgrade/staticpods] This might take a minute or longer depending on the component/version gap (timeout 5m0s)

Static pod: kube-scheduler-master hash: 8b9d324b0a3824b6f74dcac03a5ac11c

[apiclient] Found 1 Pods for label selector component=kube-scheduler

[upgrade/staticpods] Component "kube-scheduler" upgraded successfully!

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[addons] Applied essential addon: CoreDNS

[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address

[addons] Applied essential addon: kube-proxy

[upgrade/successful] SUCCESS! Your cluster was upgraded to "v1.19.12". Enjoy!

[upgrade/kubelet] Now that your control plane is upgraded, please proceed with upgrading your kubelets if you haven't already done so.

5. 升级kubelet和kubectl。

apt-get install -y kubelet=${UPVERSION}-00 kubectl=${UPVERSION}-00

5. 升级节点。

systemctl daemon-reload && systemctl restart kubelet

kubeadm upgrade node

检查升级结果,可以看到master成功升级了。

worker节点升级

1.检查/etc/apt/source.list。

# 查看可安装版本

apt-update

apt-cache madison kubeadm |head -n 10

2. 升级kubeadm、kubelet、kubectl。

UPVERSION=1.xx.x

apt-get install -y kubeadm=${UPVERSION}-00 kubelet=${UPVERSION}-00 kubectl=${UPVERSION}-00

3. 重启kubelet。

systemctl daemon-reload && systemctl restart kubelet

4. 升级节点。

kubeadm upgrade node

检查升级结果,可以看到node也成功升级了。

集群清理

# kubeadm rest

kubeadm reset -f

# 清理docker

docker ps |awk 'NR=1{print $1}'|xargs docker stop

docker ps --all |awk 'NR=1{print $1}'|xargs docker rm -f

docker ps

# 清理文件和软件缓存

rm -rf /raid/etcd/

rm -rf /etc/kubernetes/

rm -rf /etc/cni/net.d

rm -rf /var/lib/calico/

rm /root/.kube/config

systemctl daemon-reload

apt-get autoremove -y --purge kubeadm kubelet docker-ce containerd.io ceph-comm ceph-fuse

# 清理iptabels

iptables --flush

iptables -t nat -F

常见问题

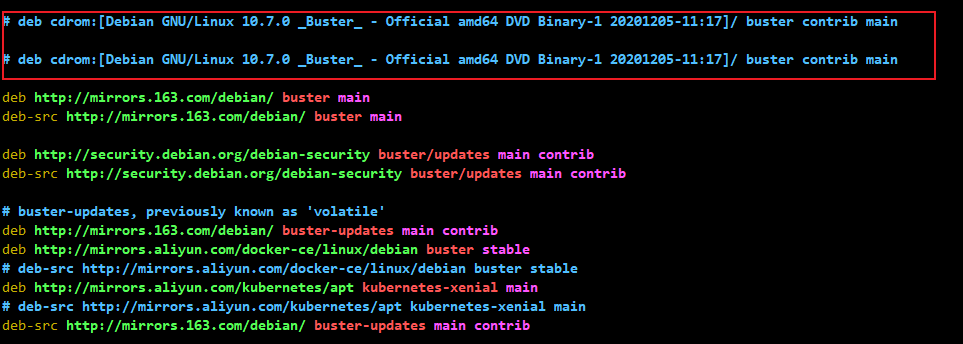

安装软件时报错:Media change : please insert the disk labeled

这时可以打开文件/etc/apt/sources.list文件,注释掉cdrom那一行。