在ceph目录中不小心删除了一个文件,因为文件是刚刚删除的,所以文件的对象元数据和数据所在的磁盘区域应该都还没有被覆盖掉,如果从对象所在的osd的db分区将该对象的元数据提取出来重新导入rocksdb,就可以找回对象的数据,最后我们再将数据重新导入集群就可以通过rados接口来访问这个文件的对象了,再通过对象将文件拼接出来。

[root@node101 cephfs]# pwd

/vcluster/cephfs

[root@node101 cephfs]# ll

total 1

drwxr-xr-x 1 root root 1048600 Dec 3 12:18 testdir1

-rw-r--r-- 1 root root 15 Dec 6 10:13 testfile1

-rw-r--r-- 1 root root 9 Dec 6 11:36 testfile2

[root@node101 cephfs]# md5sum testfile2

2360dcd27c52f97b6cf9676403f82ce3 testfile2

[root@node101 cephfs]# cat testfile2

dfdfdfdf

[root@node101 cephfs]# rm -f testfile2

一、查找文件对应的对象

首先我们要知道这个文件对应的对象名称,文件刚删除的时候,mds缓存还没有更新,可以通过查看文件所在目录的omap元数据信息,查找到文件对应的对象的名称,具体的查找办法可以见我的另外一篇博客文章。

[root@node101 cephfs]# rados -p metadata listomapvals 1.00000000

testdir1_head

value (490 bytes) :

00000000 02 00 00 00 00 00 00 00 49 10 06 bf 01 00 00 00 |........I.......|

00000010 00 00 00 00 01 00 00 00 00 00 00 ad 9a a9 61 70 |..............ap|

00000020 42 61 00 ed 41 00 00 00 00 00 00 00 00 00 00 01 |Ba..A...........|

00000030 00 00 00 00 02 00 00 00 00 00 00 00 02 02 18 00 |................|

00000040 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ff ff |................|

00000050 ff ff ff ff ff ff 00 00 00 00 00 00 00 00 00 00 |................|

00000060 00 00 01 00 00 00 ff ff ff ff ff ff ff ff 00 00 |................|

00000070 00 00 00 00 00 00 00 00 00 00 ad 9a a9 61 70 42 |.............apB|

00000080 61 00 f6 7e a8 61 c8 90 1e 29 00 00 00 00 00 00 |a..~.a...)......|

00000090 00 00 03 02 28 00 00 00 00 00 00 00 00 00 00 00 |....(...........|

000000a0 ad 9a a9 61 70 42 61 00 04 00 00 00 00 00 00 00 |...apBa.........|

000000b0 00 00 00 00 00 00 00 00 04 00 00 00 00 00 00 00 |................|

000000c0 03 02 38 00 00 00 00 00 00 00 00 00 00 00 18 00 |..8.............|

000000d0 10 00 00 00 00 00 04 00 00 00 00 00 00 00 01 00 |................|

000000e0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

000000f0 00 00 00 00 00 00 ad 9a a9 61 e8 2b f3 07 03 02 |.........a.+....|

00000100 38 00 00 00 00 00 00 00 00 00 00 00 18 00 10 00 |8...............|

00000110 00 00 00 00 04 00 00 00 00 00 00 00 01 00 00 00 |................|

00000120 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000130 00 00 00 00 ad 9a a9 61 e8 2b f3 07 12 00 00 00 |.......a.+......|

00000140 00 00 00 00 00 00 00 00 00 00 00 00 01 00 00 00 |................|

00000150 00 00 00 00 02 00 00 00 00 00 00 00 00 00 00 00 |................|

00000160 00 00 00 00 00 00 00 00 ff ff ff ff ff ff ff ff |................|

00000170 00 00 00 00 01 01 10 00 00 00 00 00 00 00 00 00 |................|

00000180 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000190 00 00 00 00 00 00 00 00 00 00 00 00 00 00 f6 7e |...............~|

000001a0 a8 61 c8 90 1e 29 04 00 00 00 00 00 00 00 ff ff |.a...)..........|

000001b0 ff ff 01 01 16 00 00 00 00 00 00 00 00 00 00 00 |................|

000001c0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

000001d0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 fe ff |................|

000001e0 ff ff ff ff ff ff 00 00 00 00 |..........|

000001ea

....

testfile2_head

value (528 bytes) :

00000000 02 00 00 00 00 00 00 00 49 10 06 e5 01 00 00 04 |........I.......|

00000010 00 00 00 00 01 00 00 00 00 00 00 48 85 ad 61 f8 |...........H..a.|

00000020 60 a9 0c a4 81 00 00 00 00 00 00 00 00 00 00 01 |`...............|

00000030 00 00 00 00 00 00 00 00 00 00 00 00 02 02 18 00 |................|

00000040 00 00 00 00 40 00 01 00 00 00 00 00 40 00 01 00 |....@.......@...|

00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000060 00 00 01 00 00 00 ff ff ff ff ff ff ff ff 00 00 |................|

00000070 00 00 00 00 00 00 00 00 00 00 48 85 ad 61 f8 60 |..........H..a.`|

00000080 a9 0c 48 85 ad 61 f8 60 a9 0c 00 00 00 00 01 00 |..H..a.`........|

00000090 00 00 b0 07 37 00 00 00 00 00 02 02 18 00 00 00 |....7...........|

000000a0 00 00 00 00 00 00 00 00 00 00 40 00 00 00 00 00 |..........@.....|

000000b0 01 00 00 00 00 00 00 00 03 02 28 00 00 00 00 00 |..........(.....|

000000c0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

000000e0 00 00 00 00 00 00 03 02 38 00 00 00 00 00 00 00 |........8.......|

000000f0 00 00 00 00 00 00 00 00 00 00 00 00 01 00 00 00 |................|

00000100 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000120 00 00 00 00 03 02 38 00 00 00 00 00 00 00 00 00 |......8.........|

00000130 00 00 00 00 00 00 00 00 00 00 01 00 00 00 00 00 |................|

00000140 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000160 00 00 1d 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

00000170 00 00 01 00 00 00 00 00 00 00 1d 00 00 00 00 00 |................|

00000180 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ff ff |................|

00000190 ff ff ff ff ff ff 00 00 00 00 01 01 10 00 00 00 |................|

000001a0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

000001c0 00 00 00 00 48 85 ad 61 f8 60 a9 0c 00 00 00 00 |....H..a.`......|

000001d0 00 00 00 00 ff ff ff ff 01 01 16 00 00 00 00 00 |................|

000001e0 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................|

*

00000200 00 00 00 00 fe ff ff ff ff ff ff ff 00 00 00 00 |................|

00000210

通过查找文件得到testfile2对应的对象名称是10000000004,现在我们只知道对象的名称,接下来要计算出这个对象之前存放的pg和osd的信息,ceph的crush算法是一个伪随机算法,只要对象所在的bucket和对象名称这些信息不变,那么计算出来的结果一定是一样的。

二、查找文件对象对应的存储池和pg

首先查看一下文件的目录对应的存储池,可以看到pool是jspool。

[root@node101 cephfs]# getfattr -n ceph.dir.layout .

# file: .

ceph.dir.layout="stripe_unit=4194304 stripe_count=1 object_size=4194304 pool=jspool"

根据对象名称和该对象存放的存储池名称,我们可以得出该对象之前是存放在id编号为[8,6,7]的这三个osd上,对象所处的pg编号是1.5。

[root@node101 cephfs]# ceph osd map jspool 10000000004.00000000

osdmap e273 pool 'jspool' (1) object '10000000004.00000000' -> pg 1.17c5e6c5 (1.5) -> up ([8,6,7], p8) acting ([8,6,7], p8)

用rados命令查看存储池jspool里面没有这个对象的信息,说明文件已经被彻底删除了。

[root@node101 cephfs]# rados -p jspool ls |grep 10000000004

[root@node101 cephfs]#

停掉对象所在的所有osd的服务,防止其他文件写入集群后将文件的对象数据覆盖掉,这里我们在所有节点上先创建一个以对象名称命名的目录,一会用来存放对象的相关文件。

[root@node101 cephfs]# ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-15 0.05099 root jishan_bucket

-9 0.01700 host node101_js

6 hdd 0.01700 osd.6 up 1.00000 1.00000

-11 0.01700 host node102_js

8 hdd 0.01700 osd.8 up 1.00000 1.00000

-13 0.01700 host node103_js

7 hdd 0.01700 osd.7 up 1.00000 1.00000

-1 0.09897 root default

-3 0.03299 host node101

0 hdd 0.01700 osd.0 up 1.00000 1.00000

3 hdd 0.01700 osd.3 up 1.00000 1.00000

-7 0.03299 host node102

1 hdd 0.01700 osd.1 up 1.00000 1.00000

5 hdd 0.01700 osd.5 up 1.00000 1.00000

-5 0.03299 host node103

2 hdd 0.01700 osd.2 up 1.00000 1.00000

4 hdd 0.01700 osd.4 up 1.00000 1.00000

[root@node101 cephfs]# cd

[root@node101 ~]# mkdir 10000000004.00000000

[root@node101 ~]# cd 10000000004.00000000

[root@node101 10000000004.00000000]# ls

[root@node101 10000000004.00000000]# systemctl stop ceph-osd@6

[root@node102 10000000004.00000000]# systemctl stop ceph-osd@8

[root@node103 10000000004.00000000]# systemctl stop ceph-osd@7

三、查找文件被删除前的版本号

接下来我们需要知道这个文件在被删除前对象的版本号,通过查看对象所在的pg的log日志可以查到,这里需要注意下,因为对象10000000004.00000000所在的存储池是纠删池,所以对应到每个osd上的pgid时需要加上一个分片序号,按照对象所在的osd顺序排列,对象所在的第一个osd上的pgid是1.5s0,第二个是1.5s1,后面的依次类推。

[root@node101 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-6/ --type bluestore --pgid 1.5s1 --op log

{

"pg_log_t": {

"head": "273'3",

"tail": "0'0",

"log": [

{

"op": "modify",

"object": "1:a367a3e8:::10000000004.00000000:head",

"version": "273'1",

"prior_version": "0'0",

"reqid": "mds.0.10:153",

"extra_reqids": [],

"mtime": "2021-12-06 11:36:46.637859",

"return_code": 0,

"mod_desc": {

"object_mod_desc": {

"can_local_rollback": true,

"rollback_info_completed": true,

"ops": [

{

"code": "CREATE"

}

]

}

}

},

{

"op": "modify",

"object": "1:a367a3e8:::10000000004.00000000:head",

"version": "273'2",

"prior_version": "273'1",

"reqid": "client.3606448.0:5",

"extra_reqids": [],

"mtime": "2021-12-06 11:36:40.223428",

"return_code": 0,

"mod_desc": {

"object_mod_desc": {

"can_local_rollback": true,

"rollback_info_completed": false,

"ops": [

{

"code": "SETATTRS",

"attrs": [

"_",

"hinfo_key",

"snapset"

]

},

{

"code": "APPEND",

"old_size": 0

}

]

}

}

},

{

"op": "delete",

"object": "1:a367a3e8:::10000000004.00000000:head",

"version": "273'3",

"prior_version": "273'2",

"reqid": "mds.0.10:177",

"extra_reqids": [],

"mtime": "2021-12-06 14:31:33.337004",

"return_code": 0,

"mod_desc": {

"object_mod_desc": {

"can_local_rollback": true,

"rollback_info_completed": true,

"ops": [

{

"code": "RMOBJECT",

"old_version": 3

}

]

}

}

}

],

"dups": []

},

"pg_missing_t": {

"missing": [],

"may_include_deletes": true

}

}

[root@node101 10000000004.00000000]#

从上面的pg日志中我们可以看到对象10000000004.00000000在被删除前,最后的版本号是273'2,先把这个记下来,同时我们可以看到对象的onode名称是 "1:a367a3e8:::10000000004.00000000:head",其中1表示对象所在的存储池的ID编号,a367a3e8是这个对象的onode名称标识。

四、从磁盘上导出对象的元数据文件

接下来我们在节点node101上的osd6的db分区磁盘中去查找该对象的元数据,可以看到这个osd的db分区是/dev/sdc2。

[root@node101 10000000004.00000000]# df

Filesystem 1K-blocks Used Available Use% Mounted on

devtmpfs 3804100 0 3804100 0% /dev

tmpfs 3821044 0 3821044 0% /dev/shm

tmpfs 3821044 25360 3795684 1% /run

tmpfs 3821044 0 3821044 0% /sys/fs/cgroup

/dev/mapper/centos-root 29128812 5272884 23855928 19% /

/dev/sda1 1038336 190540 847796 19% /boot

tmpfs 3821044 24 3821020 1% /var/lib/ceph/osd/ceph-0

tmpfs 3821044 24 3821020 1% /var/lib/ceph/osd/ceph-6

tmpfs 3821044 24 3821020 1% /var/lib/ceph/osd/ceph-3

192.168.30.101,192.168.30.102,192.168.30.103:/ 31690752 0 31690752 0% /vcluster/cephfs

tmpfs 764212 0 764212 0% /run/user/0

[root@node101 10000000004.00000000]# ll /var/lib/ceph/osd/ceph-6

total 24

lrwxrwxrwx 1 ceph ceph 93 Dec 2 08:55 block -> /dev/ceph-7a844e29-5415-443e-8376-e176c227cfc3/osd-block-5a489267-c3f9-4017-bfb3-29909066abb5

lrwxrwxrwx 1 ceph ceph 9 Dec 2 08:55 block.db -> /dev/sdc2

lrwxrwxrwx 1 ceph ceph 9 Dec 2 08:55 block.wal -> /dev/sdc1

-rw------- 1 ceph ceph 37 Dec 2 08:55 ceph_fsid

-rw------- 1 ceph ceph 37 Dec 2 08:55 fsid

-rw------- 1 ceph ceph 55 Dec 2 08:55 keyring

-rw------- 1 ceph ceph 6 Dec 2 08:55 ready

-rw------- 1 ceph ceph 10 Dec 2 08:55 type

-rw------- 1 ceph ceph 2 Dec 2 08:55 whoami

[root@node101 10000000004.00000000]#

开始查找对象的元数据。

[root@node101 10000000004.00000000]# hexedit -m --color /dev/sdc1

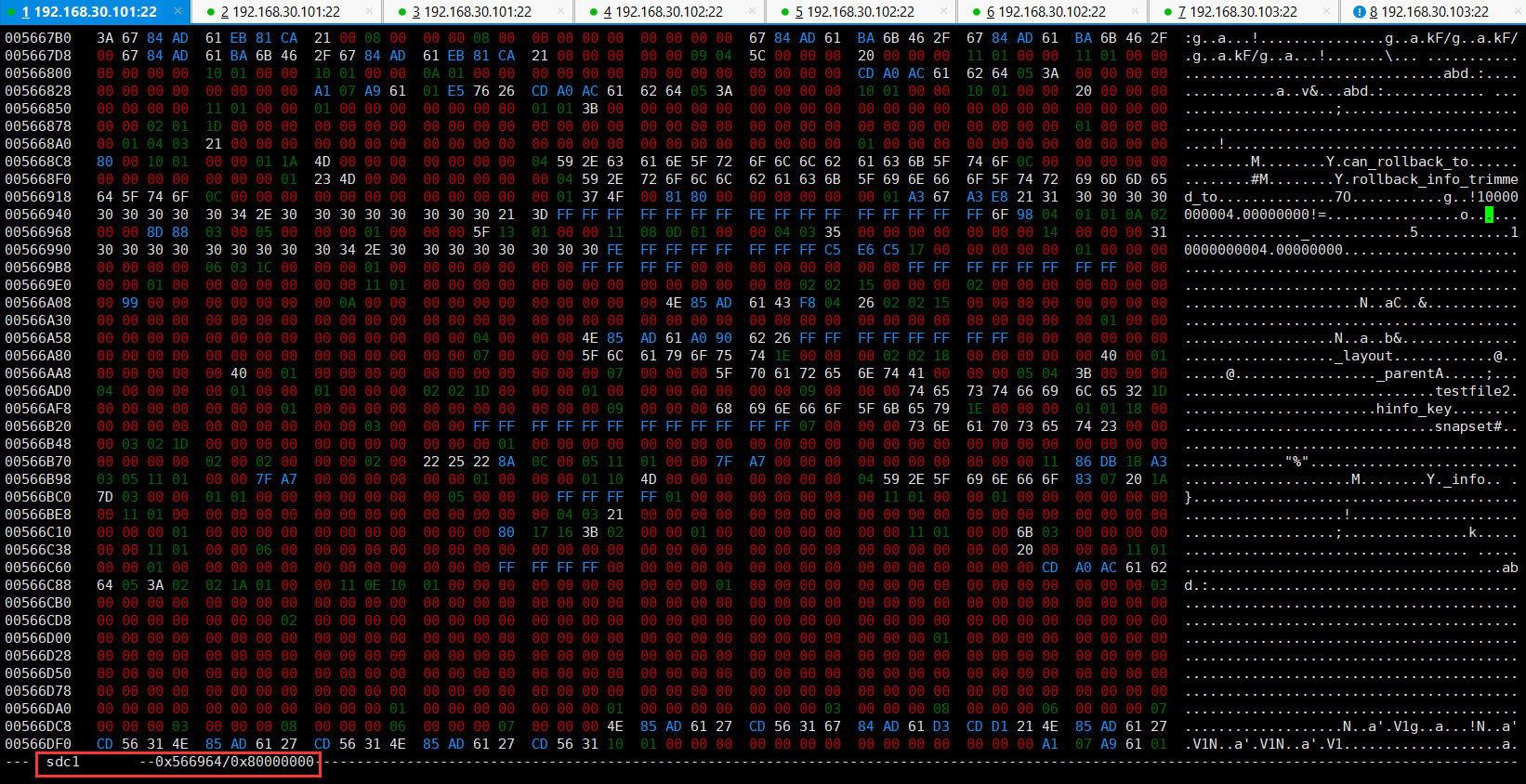

上面截图中显示是sdc1,是因为这个对象的onode元数据信息还没有来得及从wal分区刷新到db分区,所以要到wal分区去找。我们可以看到在sdc1分区的偏移地址0x566964的地方有一个10000000004.00000000对象的onode元数据。

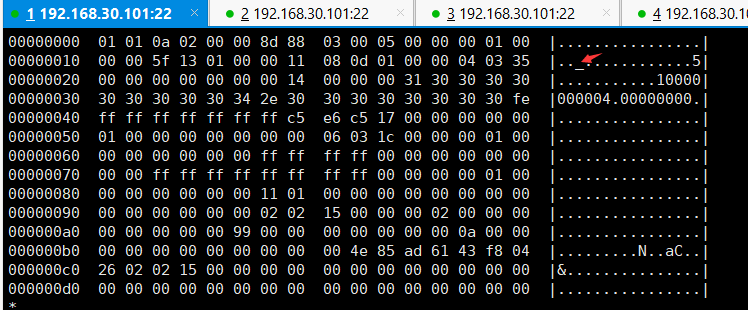

将这个元数据文件从磁盘中导出来,这里我们先假设onode文件的真实大小为1024字节。

[root@node101 10000000004.00000000]# dd if=/dev/sdc1 of=10000000004.00000000_onode bs=1 count=1024 skip=5663076

1024+0 records in

1024+0 records out

1024 bytes (1.0 kB) copied, 0.0101649 s, 101 kB/s

五、解析元数据信息

使用ceph-dencoder工具解析,可以看到对象onode文件的元数据了。

[root@node101 10000000004.00000000]# ceph-dencoder type bluestore_onode_t import 10000000004.00000000_onode decode dump_json

error: stray data at end of buffer, offset 528

[root@node101 10000000004.00000000]# dd if=10000000004.00000000_onode of=10000000004.00000000_onode_file bs=1 count=528

528+0 records in

528+0 records out

528 bytes (528 B) copied, 0.00501585 s, 105 kB/s

[root@node101 10000000004.00000000]# ceph-dencoder type bluestore_onode_t import 10000000004.00000000_onode_file decode dump_json

{

"nid": 50189,

"size": 0,

"attrs": {

"attr": {

"name": "_",

"len": 275

},

"attr": {

"name": "_layout",

"len": 30

},

"attr": {

"name": "_parent",

"len": 65

},

"attr": {

"name": "hinfo_key",

"len": 30

},

"attr": {

"name": "snapset",

"len": 35

}

},

"flags": "",

"extent_map_shards": [],

"expected_object_size": 0,

"expected_write_size": 0,

"alloc_hint_flags": 0

}

[root@node101 10000000004.00000000]#

从解析出来的信息中,我们可以看到该对象有5个attr属性名称,每个属性的大小也能看到,现在我们将第一个属性“_”提取出来,从attr属性的名称往后数4个字节,就是该attr属性的offset开始地址,大小是275个字节。

[root@node101 10000000004.00000000]# hexdump -C 10000000004.00000000_onode |less

[root@node101 10000000004.00000000]# dd if=10000000004.00000000_onode_file of=10000000004.00000000_ bs=1 skip=23 count=275

275+0 records in

275+0 records out

275 bytes (275 B) copied, 0.00345844 s, 79.5 kB/s

[root@node101 10000000004.00000000]# ceph-dencoder import 10000000004.00000000_ type object_info_t decode dump_json

{

"oid": {

"oid": "10000000004.00000000",

"key": "",

"snapid": -2,

"hash": 398845637,

"max": 0,

"pool": 1,

"namespace": ""

},

"version": "273'1",

"prior_version": "0'0",

"last_reqid": "mds.0.10:153",

"user_version": 1,

"size": 0,

"mtime": "2021-12-06 11:36:46.637859",

"local_mtime": "2021-12-06 11:36:46.643993",

"lost": 0,

"flags": [

"dirty"

],

"legacy_snaps": [],

"truncate_seq": 0,

"truncate_size": 0,

"data_digest": "0xffffffff",

"omap_digest": "0xffffffff",

"expected_object_size": 0,

"expected_write_size": 0,

"alloc_hint_flags": 0,

"manifest": {

"type": 0,

"redirect_target": {

"oid": "",

"key": "",

"snapid": 0,

"hash": 0,

"max": 0,

"pool": -9223372036854775808,

"namespace": ""

}

},

"watchers": {}

}

[root@node101 10000000004.00000000]#

使用ceph-dencoder工具解析对象的attr属性_,可以看到这个对象onode文件对应的对象版本号为273'1,因为上面从pg日志中查看的对象被删除前的最后版本是273'2,我们继续在sdc分区找。

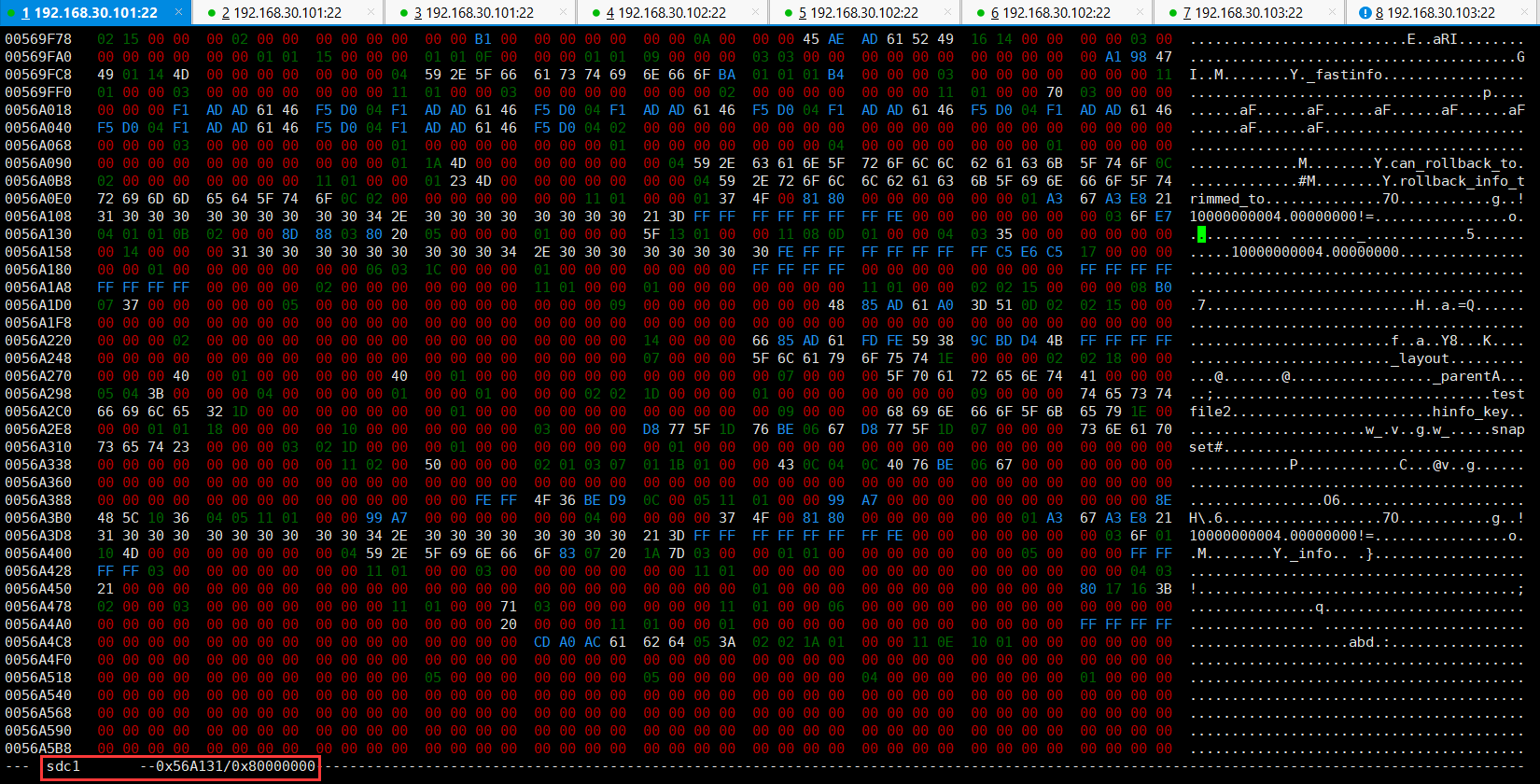

在偏移地址为56A131的地方又找到一个,用同样的方法把这个文件从sdc1分区导出来,并从这个文件中把attr属性“_”提取出来。

[root@node101 10000000004.00000000]# dd if=/dev/sdc1 of=10000000004.00000000_onode2 bs=1 count=1024 skip=5677361

1024+0 records in

1024+0 records out

1024 bytes (1.0 kB) copied, 0.0493573 s, 20.7 kB/s

[root@node101 10000000004.00000000]# ceph-dencoder type bluestore_onode_t import 10000000004.00000000_onode2 decode dump_json

error: stray data at end of buffer, offset 529

[root@node101 10000000004.00000000]# hexdump -C 10000000004.00000000_onode2|less

[root@node101 10000000004.00000000]# dd if=10000000004.00000000_onode2 of=10000000004.00000000_2 bs=1 skip=24 count=275

275+0 records in

275+0 records out

275 bytes (275 B) copied, 0.00267783 s, 103 kB/s

[root@node101 10000000004.00000000]# ceph-dencoder import 10000000004.00000000_2 type object_info_t decode dump_json

{

"oid": {

"oid": "10000000004.00000000",

"key": "",

"snapid": -2,

"hash": 398845637,

"max": 0,

"pool": 1,

"namespace": ""

},

"version": "273'2",

"prior_version": "273'1",

"last_reqid": "client.3606448.0:5",

"user_version": 2,

"size": 9,

"mtime": "2021-12-06 11:36:40.223428",

"local_mtime": "2021-12-06 11:37:10.945422",

"lost": 0,

"flags": [

"dirty",

"data_digest"

],

"legacy_snaps": [],

"truncate_seq": 0,

"truncate_size": 0,

"data_digest": "0x4bd4bd9c",

"omap_digest": "0xffffffff",

"expected_object_size": 0,

"expected_write_size": 0,

"alloc_hint_flags": 0,

"manifest": {

"type": 0,

"redirect_target": {

"oid": "",

"key": "",

"snapid": 0,

"hash": 0,

"max": 0,

"pool": -9223372036854775808,

"namespace": ""

}

},

"watchers": {}

}

[root@node101 10000000004.00000000]#

这次使用ceph-dencoder工具解析出来的attr属性_,可以看到这个对象onode文件对应的对象版本号为273'2,说明这个文件就是对象最后那个版本的onode文件。

将这个onode文件导入到kv数据库中,就可以查看到这个对象的onode信息了,但这里我们还需要先知道这个对象的onode在rocksdb中的名称,这个后面我们再来弄,我们先将对象在节点node103上面的onode文件找出来。

在节点node103上面查看pg日志。

[root@node103 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-7/ --type bluestore --pgid 1.5s2 --op log

{

"pg_log_t": {

"head": "273'3",

"tail": "0'0",

"log": [

{

"op": "modify",

"object": "1:a367a3e8:::10000000004.00000000:head",

"version": "273'1",

"prior_version": "0'0",

"reqid": "mds.0.10:153",

"extra_reqids": [],

"mtime": "2021-12-06 11:36:46.637859",

"return_code": 0,

"mod_desc": {

"object_mod_desc": {

"can_local_rollback": true,

"rollback_info_completed": true,

"ops": [

{

"code": "CREATE"

}

]

}

}

},

{

"op": "modify",

"object": "1:a367a3e8:::10000000004.00000000:head",

"version": "273'2",

"prior_version": "273'1",

"reqid": "client.3606448.0:5",

"extra_reqids": [],

"mtime": "2021-12-06 11:36:40.223428",

"return_code": 0,

"mod_desc": {

"object_mod_desc": {

"can_local_rollback": true,

"rollback_info_completed": false,

"ops": [

{

"code": "SETATTRS",

"attrs": [

"_",

"hinfo_key",

"snapset"

]

},

{

"code": "APPEND",

"old_size": 0

}

]

}

}

},

{

"op": "delete",

"object": "1:a367a3e8:::10000000004.00000000:head",

"version": "273'3",

"prior_version": "273'2",

"reqid": "mds.0.10:177",

"extra_reqids": [],

"mtime": "2021-12-06 14:31:33.337004",

"return_code": 0,

"mod_desc": {

"object_mod_desc": {

"can_local_rollback": true,

"rollback_info_completed": true,

"ops": [

{

"code": "RMOBJECT",

"old_version": 3

}

]

}

}

}

],

"dups": []

},

"pg_missing_t": {

"missing": [],

"may_include_deletes": true

}

}

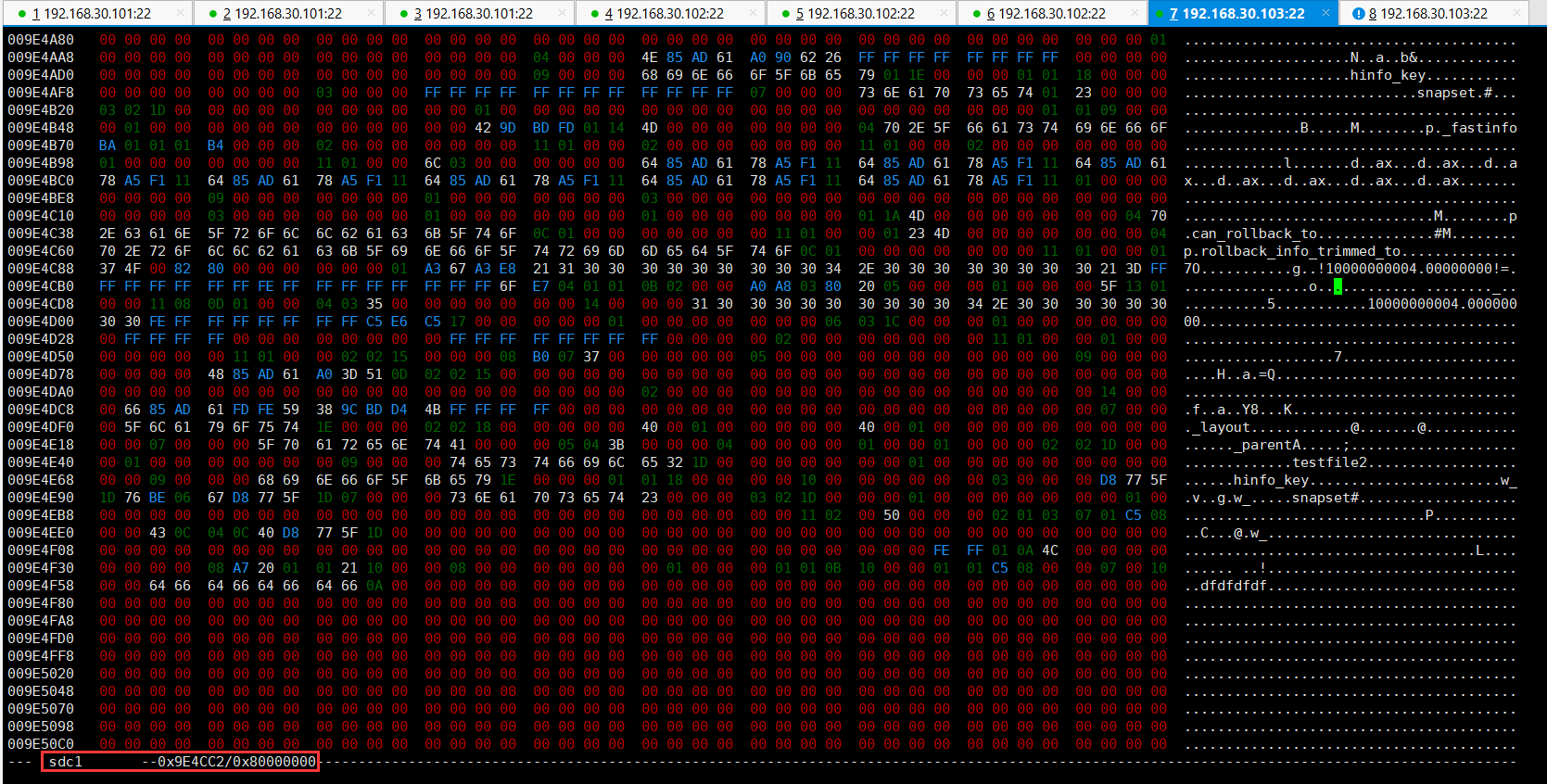

pg日志中显示对象在节点node103上的被删除前的版本也是273'2,接下来我们在节点node103上的osd7的db分区磁盘中去查找该对象的元数据。

[root@node103 10000000004.00000000]# df

Filesystem 1K-blocks Used Available Use% Mounted on

devtmpfs 3804104 0 3804104 0% /dev

tmpfs 3821048 0 3821048 0% /dev/shm

tmpfs 3821048 17140 3803908 1% /run

tmpfs 3821048 0 3821048 0% /sys/fs/cgroup

/dev/mapper/centos-root 29128812 5332208 23796604 19% /

/dev/sda1 1038336 190544 847792 19% /boot

tmpfs 3821048 24 3821024 1% /var/lib/ceph/osd/ceph-2

tmpfs 3821048 24 3821024 1% /var/lib/ceph/osd/ceph-4

tmpfs 3821048 24 3821024 1% /var/lib/ceph/osd/ceph-7

192.168.30.101,192.168.30.102,192.168.30.103:/ 31682560 0 31682560 0% /vcluster/cephfs

tmpfs 764212 0 764212 0% /run/user/0

[root@node103 10000000004.00000000]# ll /var/lib/ceph/osd/ceph-7

total 24

lrwxrwxrwx 1 ceph ceph 93 Dec 2 08:55 block -> /dev/ceph-62d00893-de35-4aca-89a8-c7597d39cb2f/osd-block-eeeb3d1a-c77e-4057-8f18-51e2e6b97145

lrwxrwxrwx 1 ceph ceph 9 Dec 2 08:55 block.db -> /dev/sdc2

lrwxrwxrwx 1 ceph ceph 9 Dec 2 08:55 block.wal -> /dev/sdc1

-rw------- 1 ceph ceph 37 Dec 2 08:55 ceph_fsid

-rw------- 1 ceph ceph 37 Dec 2 08:55 fsid

-rw------- 1 ceph ceph 55 Dec 2 08:55 keyring

-rw------- 1 ceph ceph 6 Dec 2 08:55 ready

-rw------- 1 ceph ceph 10 Dec 2 08:55 type

-rw------- 1 ceph ceph 2 Dec 2 08:55 whoami

同样因为对象元数据没有从wal刷新到db分区,所以要在wal分区中找。

上图中显示在sdc1分区的偏移地址0x9E4CC2的地方有一个10000000004.00000000对象的onode元数据,我们将它从磁盘上导出来。

[root@node103 10000000004.00000000]# ceph-dencoder type bluestore_onode_t import 10000000004.00000000_onode decode dump_json

error: stray data at end of buffer, offset 529

[root@node103 10000000004.00000000]# hexdump -C 10000000004.00000000_onode |less

[root@node103 10000000004.00000000]# dd if=10000000004.00000000_onode of=10000000004.00000000_ bs=1 skip=24 count=275

275+0 records in

275+0 records out

275 bytes (275 B) copied, 0.00210391 s, 131 kB/s

[root@node103 10000000004.00000000]# ceph-dencoder import 10000000004.00000000_ type object_info_t decode dump_json

{

"oid": {

"oid": "10000000004.00000000",

"key": "",

"snapid": -2,

"hash": 398845637,

"max": 0,

"pool": 1,

"namespace": ""

},

"version": "273'2",

"prior_version": "273'1",

"last_reqid": "client.3606448.0:5",

"user_version": 2,

"size": 9,

"mtime": "2021-12-06 11:36:40.223428",

"local_mtime": "2021-12-06 11:37:10.945422",

"lost": 0,

"flags": [

"dirty",

"data_digest"

],

"legacy_snaps": [],

"truncate_seq": 0,

"truncate_size": 0,

"data_digest": "0x4bd4bd9c",

"omap_digest": "0xffffffff",

"expected_object_size": 0,

"expected_write_size": 0,

"alloc_hint_flags": 0,

"manifest": {

"type": 0,

"redirect_target": {

"oid": "",

"key": "",

"snapid": 0,

"hash": 0,

"max": 0,

"pool": -9223372036854775808,

"namespace": ""

}

},

"watchers": {}

}

通过ceph-dencoder工具解析出来的attr属性_,可以看到这个对象onode文件对应的对象版本号为273'2,说明这个文件就是对象最后那个版本的onode文件。

六、找回onode文件的key名称

现在我们已经有两个对象的onode文件了,因为这个纠删存储池的规则是2:1,只需要有2个节点的对象数据就可以了,下面我们把对象的onode文件在rocksdb中的名称找出来。

先将所有osd服务开起来。

[root@node101 10000000004.00000000]# systemctl start ceph-osd@6

[root@node102 10000000004.00000000]# systemctl start ceph-osd@8

[root@node103 10000000004.00000000]# systemctl start ceph-osd@7

使用rados命令创建一个名称为10000000004.00000000的对象,原理跟上面一样,因为每个对象在集群中的oid都是唯一的,而对象的onode文件在rocksdb数据库中的名称又是根据对象的oid和对象所在的pg信息算出来的,所以只要对象名称和存储池名称一样,那么在同一个集群中计算出来的对象onode文件在rocksdb数据库中的名称也是一样的。

[root@node103 10000000004.00000000]# rados -p jspool create 10000000004.00000000

再次停掉所有的osd服务。

[root@node101 10000000004.00000000]# systemctl stop ceph-osd@6

[root@node102 10000000004.00000000]# systemctl stop ceph-osd@8

[root@node103 10000000004.00000000]# systemctl stop ceph-osd@7

在节点node101上查看对象10000000004.00000000的onode信息。

[root@node101 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-6 list O 2>/dev/null | grep 10000000004.00000000

O %81%80%00%00%00%00%00%00%01%a3g%a3%e8%2110000000004.00000000%21%3d%ff%ff%ff%ff%ff%ff%ff%fe%ff%ff%ff%ff%ff%ff%ff%ffo

[root@node101 10000000004.00000000]# ceph-objectstore-tool --op list --data-path /var/lib/ceph/osd/ceph-6 --type bluestore |grep 10000000004.00000000

["1.5s1",{"oid":"10000000004.00000000","key":"","snapid":-2,"hash":398845637,"max":0,"pool":1,"namespace":"","shard_id":1,"max":0}]

[root@node101 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-6 --type bluestore 10000000004.00000000 list-attrs

_

hinfo_key

snapset

[root@node101 10000000004.00000000]#

七、导入对象元数据

将我们在磁盘上找到的10000000004.00000000的onode文件导入osd的rocksdb数据库,在导入前要先把新创建的这个对象的元数据信息删掉。

[root@node101 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-6/ --type bluestore 10000000004.00000000 remove

remove 1#1:a367a3e8:::10000000004.00000000:head#

[root@node101 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-6 list O 2>/dev/null | grep 10000000004.00000000

[root@node101 10000000004.00000000]# ceph-objectstore-tool --op list --data-path /var/lib/ceph/osd/ceph-6 --type bluestore |grep 10000000004.00000000

[root@node101 10000000004.00000000]#

开始导入onode文件。

[root@node101 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-6 set O %81%80%00%00%00%00%00%00%01%a3g%a3%e8%2110000000004.00000000%21%3d%ff%ff%ff%ff%ff%ff%ff%fe%ff%ff%ff%ff%ff%ff%ff%ffo in 10000000004.00000000_onode2 2>/dev/null

[root@node101 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-6 list O 2>/dev/null | grep 10000000004.00000000

O %81%80%00%00%00%00%00%00%01%a3g%a3%e8%2110000000004.00000000%21%3d%ff%ff%ff%ff%ff%ff%ff%fe%ff%ff%ff%ff%ff%ff%ff%ffo

[root@node101 10000000004.00000000]# ceph-objectstore-tool --op list --data-path /var/lib/ceph/osd/ceph-6 --type bluestore |grep 10000000004.00000000

["1.5s1",{"oid":"10000000004.00000000","key":"","snapid":-2,"hash":398845637,"max":0,"pool":1,"namespace":"","shard_id":1,"max":0}]

[root@node101 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-6 --type bluestore 10000000004.00000000 list-attrs

_

_layout

_parent

hinfo_key

snapset

[root@node101 10000000004.00000000]#

上面可以看到onode文件导入完成之后,再次查看对象的attr扩展属性变成了5个,说明文件已经导入成功了,我们使用fsck命令检查一下osd上的对象数据,看有没有错误。

[root@node101 10000000004.00000000]# ceph-bluestore-tool --log-level 30 -l osd6.log1 --path /var/lib/ceph/osd/ceph-6 fsck

2021-12-06 17:30:14.719051 7f718091cec0 -1 bluestore(/var/lib/ceph/osd/ceph-6) 1#1:a367a3e8:::10000000004.00000000:head# extent 0x2300000~10000 or a subset is already allocated

2021-12-06 17:30:14.723530 7f718091cec0 -1 bluestore(/var/lib/ceph/osd/ceph-6) fsck error: actual store_statfs(0x3fd470000/0x43fc00000, stored 0x22c945/0x2780000, compress 0x0/0x0/0x0) != expected store_statfs(0x3fd470000/0x43fc00000, stored 0x22d945/0x2790000, compress 0x0/0x0/0x0)

fsck success

通过fsck命令检查发现这个对象原来所在的blob区域已经被其他对象占用了,这个就是说数据已经被覆盖了,看来这个osd上面的数据是没办法恢复了,为避免影响现有的数据,我们将导入的onode文件信息删除。

试试节点node103上的osd,发现这个也被占用了,唉,看来没办法恢复了。

[root@node103 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-7 list O 2>/dev/null | grep 10000000004.00000000

O %82%80%00%00%00%00%00%00%01%a3g%a3%e8%2110000000004.00000000%21%3d%ff%ff%ff%ff%ff%ff%ff%fe%ff%ff%ff%ff%ff%ff%ff%ffo

[root@node103 10000000004.00000000]# ceph-objectstore-tool --op list --data-path /var/lib/ceph/osd/ceph-7 --type bluestore |grep 10000000004.00000000

Error getting attr on : 1.11s0_head,0#-3:88000000:::scrub_1.11s0:head#, (61) No data available

["1.5s2",{"oid":"10000000004.00000000","key":"","snapid":-2,"hash":398845637,"max":0,"pool":1,"namespace":"","shard_id":2,"max":0}]

[root@node103 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-7 --type bluestore 10000000004.00000000 list-attrs

Error getting attr on : 1.11s0_head,0#-3:88000000:::scrub_1.11s0:head#, (61) No data available

_

hinfo_key

snapset

[root@node103 10000000004.00000000]# ceph-objectstore-tool --data-path /var/lib/ceph/osd/ceph-7/ --type bluestore 10000000004.00000000 remove

Error getting attr on : 1.11s0_head,0#-3:88000000:::scrub_1.11s0:head#, (61) No data available

remove 2#1:a367a3e8:::10000000004.00000000:head#

[root@node103 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-7 set O %82%80%00%00%00%00%00%00%01%a3g%a3%e8%2110000000004.00000000%21%3d%ff%ff%ff%ff%ff%ff%ff%fe%ff%ff%ff%ff%ff%ff%ff%ffo in 10000000004.00000000_onode 2>/dev/null

[root@node103 10000000004.00000000]# ceph-kvstore-tool bluestore-kv /var/lib/ceph/osd/ceph-7 list O 2>/dev/null | grep 10000000004.00000000

O %82%80%00%00%00%00%00%00%01%a3g%a3%e8%2110000000004.00000000%21%3d%ff%ff%ff%ff%ff%ff%ff%fe%ff%ff%ff%ff%ff%ff%ff%ffo

[root@node103 10000000004.00000000]# ceph-bluestore-tool --log-level 30 -l osd7.log1 --path /var/lib/ceph/osd/ceph-7 fsck

2021-12-06 17:43:54.426073 7f32b5e73ec0 -1 bluestore(/var/lib/ceph/osd/ceph-7) 2#1:a367a3e8:::10000000004.00000000:head# extent 0x2310000~10000 or a subset is already allocated

2021-12-06 17:43:54.427021 7f32b5e73ec0 -1 bluestore(/var/lib/ceph/osd/ceph-7) fsck error: actual store_statfs(0x3fd4f0000/0x43fc00000, stored 0x227279/0x2700000, compress 0x0/0x0/0x0) != expected store_statfs(0x3fd4f0000/0x43fc00000, stored 0x228279/0x2710000, compress 0x0/0x0/0x0)

fsck success

八、恢复要点

1、恢复对象数据时,最好第一时间停止所有对象所在的osd服务,之后就不要启动osd了,因为频繁的重启osd,对象的数据所在的磁盘区域很容易会被osdmap文件覆盖,覆盖后就不能找回对象文件的数据了。

2021-12-06 17:30:14.563304 7f718091cec0 20 bluestore(/var/lib/ceph/osd/ceph-6).collection(meta 0x55ded8e69c00) get_onode oid #-1:43573e63:::inc_osdmap.274:0# key 0x7f7fffffffffffffff43573e'

c!inc_osdmap.274!='0x0000000000000000ffffffffffffffff'o'

2021-12-06 17:30:14.563355 7f718091cec0 20 bluestore(/var/lib/ceph/osd/ceph-6).collection(meta 0x55ded8e69c00) r 0 v.len 110

2021-12-06 17:30:14.563377 7f718091cec0 20 bluestore.blob(0x55ded8d6f0a0) get_ref 0x0~614 Blob(0x55ded8d6f0a0 blob([0x2300000~10000] csum+has_unused crc32c/0x1000 unused=0xfffe) use_tracker(0x0 0x0) SharedBlob(0x55ded8d6f110 sbid 0x0))

2021-12-06 17:30:14.563392 7f718091cec0 20 bluestore.blob(0x55ded8d6f0a0) get_ref init 0x10000, 10000

2021-12-06 17:30:14.563398 7f718091cec0 30 bluestore.OnodeSpace(0x55ded8e69d48 in 0x55ded8b32460) add #-1:43573e63:::inc_osdmap.274:0# 0x55ded8d68240

2021-12-06 17:30:14.563410 7f718091cec0 30 bluestore.extentmap(0x55ded8d68330) fault_range 0x0~ffffffff

2021-12-06 17:30:14.563415 7f718091cec0 30 bluestore(/var/lib/ceph/osd/ceph-6) _dump_onode 0x55ded8d68240 #-1:43573e63:::inc_osdmap.274:0# nid 51204 size 0x614 (1556) expected_object_size 0 expected_write_size 0 in 0 shards, 0 spanning blobs

2021-12-06 17:30:14.563425 7f718091cec0 30 bluestore(/var/lib/ceph/osd/ceph-6) _dump_extent_map 0x0~614: 0x0~614 Blob(0x55ded8d6f0a0 blob([0x2300000~10000] csum+has_unused crc32c/0x1000 unused=0xfffe) use_tracker(0x10000 0x614) SharedBlob(0x55ded8d6f110 sbid 0x0))

2021-12-06 17:30:14.563439 7f718091cec0 30 bluestore(/var/lib/ceph/osd/ceph-6) _dump_extent_map csum: [c905fabd,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0]

2021-12-06 17:30:14.563448 7f718091cec0 20 bluestore(/var/lib/ceph/osd/ceph-6) _fsck 0x0~614: 0x0~614 Blob(0x55ded8d6f0a0 blob([0x2300000~10000] csum+has_unused crc32c/0x1000 unused=0xfffe) use_tracker(0x10000 0x614) SharedBlob(0x55ded8d6f110 sbid 0x0))

2021-12-06 17:30:14.563460 7f718091cec0 20 bluestore(/var/lib/ceph/osd/ceph-6) _fsck referenced 0x1 for Blob(0x55ded8d6f0a0 blob([0x2300000~10000] csum+has_unused crc32c/0x1000 unused=0xfffe) use_tracker(0x10000 0x614) SharedBlob(0x55ded8d6f110 sbid 0x0))

2021-12-06 17:30:14.563473 7f718091cec0 30 bluestore(/var/lib/ceph/osd/ceph-6) _fsck_check_extents oid #-1:43573e63:::inc_osdmap.274:0# extents [0x2300000~10000]

2、如果数据在副本存储池下,只需要有一个osd上面存在对象的完整数据就可以了,把这个osd上面的对象数据恢复后,通过修复命令就可以将其余副本的数据恢复回来。

3、如果数据在纠删存储池下,可以多试几个osd。

4、对象元数据恢复之后,使用ceph-objectstore-tool工具将对象的数据文件和onode的attr属性全部导出来,然后再将手动导入的对象元数据删除,再使用ceph-objectstore-tool将对象的数据和attr属性导入osd,才能完全恢复数据,因为原来的元数据中指定的blob和nid都不能用了(被标记为了删除),要重新将对象数据导入osd生成新的blob和nid。