在colab上使用yolo v3训练自己的数据集

本次用到的数据集是天池:零基础入门CV - 街景字符编码识别的数据集

其实这个项目中yolo3yolo4等都有,但是这里就只用yolo3做测试了,yolo3和yolo4的使用方法差不多

关于那个竞赛,有位博主已经写过了如何使用yolo获得较好的效果:https://tianchi.aliyun.com/notebook-ai/detail?spm=5176.12586969.1002.108.2ce879de4cKZcz&postId=118780

但是我这里主要关注于把项目先跑通,最佳实践之后可以参考

reference:

- https://www.cnblogs.com/monologuesmw/p/13035442.html

- https://blog.csdn.net/weixin_38353277/article/details/105841023

因为是ipunb转的markdown,所以阅读起来可能不是很好看,可以下载源文件:https://files.cnblogs.com/files/jiading/yolo_in_colab.zip。注意源文件不包括本文最后的结论部分

#查看colab分配的gpu

!/opt/bin/nvidia-smi

Mon Sep 28 05:14:09 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.67 Driver Version: 418.67 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:04.0 Off | 0 |

| N/A 36C P8 9W / 70W | 0MiB / 15079MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

#下载项目

!git clone https://github.com/AlexeyAB/darknet

Cloning into 'darknet'...

remote: Enumerating objects: 14321, done.[K

remote: Total 14321 (delta 0), reused 0 (delta 0), pack-reused 14321[K

Receiving objects: 100% (14321/14321), 12.87 MiB | 22.64 MiB/s, done.

Resolving deltas: 100% (9772/9772), done.

# 修改makefile 将OpenCV和GPU设置为可用

%cd darknet

'''

Linux sed 命令是利用脚本来处理文本文件

sed 可依照脚本的指令来处理、编辑文本文件

i :插入, i 的后面可以接字串,而这些字串会在新的一行出现(目前的上一行);

'''

!sed -i 's/OPENCV=0/OPENCV=1/' Makefile

!sed -i 's/GPU=0/GPU=1/' Makefile

!sed -i 's/CUDNN=0/CUDNN=1/' Makefile

/content/darknet

#验证CUDA版本

!/usr/local/cuda/bin/nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2019 NVIDIA Corporation

Built on Sun_Jul_28_19:07:16_PDT_2019

Cuda compilation tools, release 10.1, V10.1.243

运行demo显示bbox

此步只为了检验环境和编译成功与否

#下载训练好的coco数据集权重,保存到darknet文件夹中

#!wget https://pjreddie.com/media/files/yolov3.weights

#可以把权重保存下来,之后直接从google drive拉就可以了,比下载快点

#先保存

#!cp /content/darknet/yolov3.weights '/content/drive/My Drive/cvComp1Realted/yolov3.weights'

#再拉取

!cp '/content/drive/My Drive/cvComp1Realted/yolov3.weights' /content/darknet/yolov3.weights

#编译项目生成darknet运行程序

!make

#定义imshow 调用opencv显示图片

def imShow(path):

import cv2

import matplotlib.pyplot as plt

%matplotlib inline

image = cv2.imread(path)

height, width = image.shape[:2]

resized_image = cv2.resize(image,(3*width, 3*height), interpolation = cv2.INTER_CUBIC)

fig = plt.gcf()

fig.set_size_inches(18, 10)

plt.axis("off")

plt.imshow(cv2.cvtColor(resized_image, cv2.COLOR_BGR2RGB))

plt.show()

#运行demo

!./darknet detect cfg/yolov3.cfg yolov3.weights data/person.jpg

imShow('predictions.jpg')

CUDA-version: 10010 (10010), cuDNN: 7.6.5, GPU count: 1

OpenCV version: 3.2.0

0 : compute_capability = 750, cudnn_half = 0, GPU: Tesla T4

net.optimized_memory = 0

mini_batch = 1, batch = 1, time_steps = 1, train = 0

layer filters size/strd(dil) input output

0 conv 32 3 x 3/ 1 416 x 416 x 3 -> 416 x 416 x 32 0.299 BF

1 conv 64 3 x 3/ 2 416 x 416 x 32 -> 208 x 208 x 64 1.595 BF

2 conv 32 1 x 1/ 1 208 x 208 x 64 -> 208 x 208 x 32 0.177 BF

3 conv 64 3 x 3/ 1 208 x 208 x 32 -> 208 x 208 x 64 1.595 BF

4 Shortcut Layer: 1, wt = 0, wn = 0, outputs: 208 x 208 x 64 0.003 BF

5 conv 128 3 x 3/ 2 208 x 208 x 64 -> 104 x 104 x 128 1.595 BF

6 conv 64 1 x 1/ 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BF

7 conv 128 3 x 3/ 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BF

8 Shortcut Layer: 5, wt = 0, wn = 0, outputs: 104 x 104 x 128 0.001 BF

9 conv 64 1 x 1/ 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BF

10 conv 128 3 x 3/ 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BF

11 Shortcut Layer: 8, wt = 0, wn = 0, outputs: 104 x 104 x 128 0.001 BF

12 conv 256 3 x 3/ 2 104 x 104 x 128 -> 52 x 52 x 256 1.595 BF

13 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

14 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

15 Shortcut Layer: 12, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

16 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

17 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

18 Shortcut Layer: 15, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

19 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

20 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

21 Shortcut Layer: 18, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

22 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

23 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

24 Shortcut Layer: 21, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

25 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

26 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

27 Shortcut Layer: 24, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

28 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

29 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

30 Shortcut Layer: 27, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

31 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

32 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

33 Shortcut Layer: 30, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

34 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

35 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

36 Shortcut Layer: 33, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

37 conv 512 3 x 3/ 2 52 x 52 x 256 -> 26 x 26 x 512 1.595 BF

38 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

39 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

40 Shortcut Layer: 37, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

41 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

42 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

43 Shortcut Layer: 40, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

44 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

45 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

46 Shortcut Layer: 43, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

47 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

48 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

49 Shortcut Layer: 46, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

50 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

51 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

52 Shortcut Layer: 49, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

53 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

54 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

55 Shortcut Layer: 52, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

56 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

57 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

58 Shortcut Layer: 55, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

59 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

60 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

61 Shortcut Layer: 58, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

62 conv 1024 3 x 3/ 2 26 x 26 x 512 -> 13 x 13 x1024 1.595 BF

63 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

64 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

65 Shortcut Layer: 62, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

66 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

67 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

68 Shortcut Layer: 65, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

69 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

70 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

71 Shortcut Layer: 68, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

72 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

73 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

74 Shortcut Layer: 71, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

75 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

76 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

77 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

78 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

79 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

80 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

81 conv 255 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 255 0.088 BF

82 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.00

83 route 79 -> 13 x 13 x 512

84 conv 256 1 x 1/ 1 13 x 13 x 512 -> 13 x 13 x 256 0.044 BF

85 upsample 2x 13 x 13 x 256 -> 26 x 26 x 256

86 route 85 61 -> 26 x 26 x 768

87 conv 256 1 x 1/ 1 26 x 26 x 768 -> 26 x 26 x 256 0.266 BF

88 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

89 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

90 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

91 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

92 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

93 conv 255 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 255 0.177 BF

94 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.00

95 route 91 -> 26 x 26 x 256

96 conv 128 1 x 1/ 1 26 x 26 x 256 -> 26 x 26 x 128 0.044 BF

97 upsample 2x 26 x 26 x 128 -> 52 x 52 x 128

98 route 97 36 -> 52 x 52 x 384

99 conv 128 1 x 1/ 1 52 x 52 x 384 -> 52 x 52 x 128 0.266 BF

100 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

101 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

102 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

103 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

104 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

105 conv 255 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 255 0.353 BF

106 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.00

Total BFLOPS 65.879

avg_outputs = 532444

Allocate additional workspace_size = 52.43 MB

Loading weights from yolov3.weights...

seen 64, trained: 32013 K-images (500 Kilo-batches_64)

Done! Loaded 107 layers from weights-file

Detection layer: 82 - type = 28

Detection layer: 94 - type = 28

Detection layer: 106 - type = 28

data/person.jpg: Predicted in 41.478000 milli-seconds.

dog: 99%

person: 100%

horse: 100%

Unable to init server: Could not connect: Connection refused

(predictions:1181): Gtk-[1;33mWARNING[0m **: [34m05:16:13.455[0m: cannot open display:

将我们cv入门赛的资料解压到目录下

#先查看一下当前位置

!pwd

/content/darknet

#把原始数据从google drive拉下来

!mkdir /content/input

!mkdir /content/input/train

!mkdir /content/input/val

!mkdir /content/input/test

#路径中有空格的话需要用引号引住

!unzip '/content/drive/My Drive/cvComp1Realted/mchar_train.zip' -d /content/input/train

!unzip '/content/drive/My Drive/cvComp1Realted/mchar_val.zip' -d /content/input/val

!unzip '/content/drive/My Drive/cvComp1Realted/mchar_test_a.zip' -d /content/input/test

!cp '/content/drive/My Drive/cvComp1Realted/mchar_train.json' -d /content/input

!cp '/content/drive/My Drive/cvComp1Realted/mchar_val.json' -d /content/input

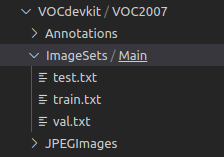

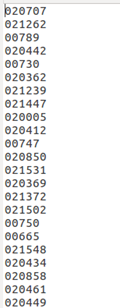

按照yolo的格式要求构造数据集

Anontations用于存放标签xml文件

JPEGImage用于存放图像

ImageSets内的Main文件夹用于存放生成的图片名字,例如:

#按照voc数据集的格式创建文件夹

!mkdir /content/VOCdevkit/

!mkdir /content/VOCdevkit/VOC2007

!mkdir /content/VOCdevkit/VOC2007/Annotations

!mkdir /content/VOCdevkit/VOC2007/ImageSets

!mkdir /content/VOCdevkit/VOC2007/JPEGImages

!mkdir /content/VOCdevkit/VOC2007/ImageSets/Main

!mkdir /content/VOCdevkit/VOC2007/labels

import glob

#构造图片名称保存到ImageSets中

test_path = glob.glob('/content/input/test/mchar_test_a/*.png')

train_path=glob.glob('/content/input/train/mchar_train/*.png')

val_path=glob.glob('/content/input/val/mchar_val/*.png')

#路径长这样

train_path[0]

'/content/input/train/mchar_train/000653.png'

#把原始图片重命名(因为原始图片在trainval est中都是从00000.png开始的,所以放在一起会有重名)并且都拷贝到JPEGImages中,且写入到txt文件中

from shutil import copyfile

import os

path='/content/VOCdevkit/VOC2007/JPEGImages'

#training part

with open('/content/VOCdevkit/VOC2007/ImageSets/Main/train.txt','w') as f:

for item in train_path:

splited=item.split('/')

filename='train_'+splited[5].split('.')[0]

topath=os.path.join(path,filename+'.png')

f.write(os.path.join(path,filename)+'.png

')

copyfile(item,topath)

#val part

with open('/content/VOCdevkit/VOC2007/ImageSets/Main/val.txt','w') as f:

for item in val_path:

splited=item.split('/')

filename='val_'+splited[5].split('.')[0]

topath=os.path.join(path,filename+'.png')

f.write(os.path.join(path,filename)+'.png

')

copyfile(item,topath)

#test part

with open('/content/VOCdevkit/VOC2007/ImageSets/Main/test.txt','w') as f:

for item in test_path:

splited=item.split('/')

filename='test_'+splited[5].split('.')[0]

topath=os.path.join(path,filename+'.png')

f.write(os.path.join(path,filename)+'.png

')

copyfile(item,topath)

构造xml格式的label文件

xml文件格式要求如下图:

#读取我们json格式的label文件

import json

train_labels=json.load(open('/content/input/mchar_train.json'))

val_labels=json.load(open('/content/input/mchar_val.json'))

#读一个label看看

train_labels['000000.png']

{'height': [219, 219],

'label': [1, 9],

'left': [246, 323],

'top': [77, 81],

'width': [81, 96]}

#key为不加前缀的文件名,type_name为train/test/val

#pic_path填粘贴后的地址即可

#这里看着代码多,其实是用纯代码的方式写了一个xml文件,要简化的话其实可以弄一个xml模板然后往里面填内容

def create_xml(key,value,type_name,xml_path,pic_path):

from PIL import Image

import os

import xml.dom.minidom as minidom

filename=type_name+'_'+key.split('.')[0]

with open(os.path.join(xml_path,filename+'.xml'),'w') as f:

dom=minidom.Document()

annotation_node=dom.createElement('annotation')

folder_node=dom.createElement('folder')

name_text_value = dom.createTextNode("VOC2007")

folder_node.appendChild(name_text_value)

annotation_node.appendChild(folder_node)

filename_node=dom.createElement('filename')

name_text_value = dom.createTextNode(filename+'.png')

filename_node.appendChild(name_text_value)

annotation_node.appendChild(filename_node)

source_node=dom.createElement('source')

database_node=dom.createElement('database')

name_text_value = dom.createTextNode("My Database")

database_node.appendChild(name_text_value)

source_node.appendChild(database_node)

annotation_node_2=dom.createElement('annotation')

name_text_value = dom.createTextNode("PASCAL VOC2007")

annotation_node_2.appendChild(name_text_value)

source_node.appendChild(annotation_node_2)

image_node=dom.createElement('image')

name_text_value = dom.createTextNode("flickr")

image_node.appendChild(name_text_value)

source_node.appendChild(image_node)

flickrid_node=dom.createElement('flickrid')

name_text_value = dom.createTextNode("NULL")

flickrid_node.appendChild(name_text_value)

source_node.appendChild(flickrid_node)

annotation_node.appendChild(source_node)

owner_node=dom.createElement('owner')

flickrid_node_2=dom.createElement('flickrid')

name_text_value = dom.createTextNode("NULL")

flickrid_node_2.appendChild(name_text_value)

owner_node.appendChild(flickrid_node_2)

name_node=dom.createElement('name')

name_text_value = dom.createTextNode("company")

name_node.appendChild(name_text_value)

owner_node.appendChild(name_node)

annotation_node.appendChild(owner_node)

size_node=dom.createElement('size')

img = Image.open(os.path.join(pic_path,filename+'.png'))

width_node=dom.createElement('width')

name_text_value = dom.createTextNode(str(img.width))

width_node.appendChild(name_text_value)

height_node=dom.createElement('height')

name_text_value = dom.createTextNode(str(img.height))

height_node.appendChild(name_text_value)

depth_node=dom.createElement('depth')

name_text_value = dom.createTextNode(str(3))

depth_node.appendChild(name_text_value)

size_node.appendChild(width_node)

size_node.appendChild(height_node)

size_node.appendChild(depth_node)

annotation_node.appendChild(size_node)

segmented_node=dom.createElement('segmented')

name_text_value = dom.createTextNode(str(0))

segmented_node.appendChild(name_text_value)

annotation_node.appendChild(segmented_node)

if value is not None:

labels=value['label']

index=0

for label in labels:

object_node=dom.createElement('object')

name_node_2=dom.createElement('name')

name_text_value = dom.createTextNode(str(label))

name_node_2.appendChild(name_text_value)

object_node.appendChild(name_node_2)

pose_node=dom.createElement('pose')

name_text_value = dom.createTextNode('Unspecified')

pose_node.appendChild(name_text_value)

object_node.appendChild(pose_node)

truncated_node=dom.createElement('truncated')

name_text_value = dom.createTextNode(str(0))

truncated_node.appendChild(name_text_value)

object_node.appendChild(truncated_node)

difficult_node=dom.createElement('difficult')

name_text_value = dom.createTextNode(str(0))

difficult_node.appendChild(name_text_value)

object_node.appendChild(difficult_node)

bndbox_node=dom.createElement('bndbox')

xmin_node=dom.createElement('xmin')

name_text_value = dom.createTextNode(str(value['left'][index]))

xmin_node.appendChild(name_text_value)

bndbox_node.appendChild(xmin_node)

ymin_node=dom.createElement('ymin')

name_text_value = dom.createTextNode(str(value['top'][index]))

ymin_node.appendChild(name_text_value)

bndbox_node.appendChild(ymin_node)

xmax_node=dom.createElement('xmax')

name_text_value = dom.createTextNode(str(value['left'][index]+value['width'][index]))

xmax_node.appendChild(name_text_value)

bndbox_node.appendChild(xmax_node)

ymax_node=dom.createElement('ymax')

name_text_value = dom.createTextNode(str(value['top'][index]+value['height'][index]))

ymax_node.appendChild(name_text_value)

bndbox_node.appendChild(ymax_node)

object_node.appendChild(bndbox_node)

annotation_node.appendChild(object_node)

index+=1

dom.appendChild(annotation_node)

dom.writexml(f, addindent='

', encoding='utf-8')

#创建一个xml试一试

#def create_xml(key,value,type_name,xml_path,pic_path):

xml_path='/content/VOCdevkit/VOC2007/Annotations'

pic_path='/content/VOCdevkit/VOC2007/JPEGImages'

create_xml('000000.png',train_labels['000000.png'],'train',xml_path=xml_path,pic_path=pic_path)

#没有问题了,就把所有的都转换掉

for (key,value) in train_labels.items():

try:

create_xml(key,value,'train',xml_path=xml_path,pic_path=pic_path)

except (FileNotFoundError):

print(key)

continue

for (key,value) in val_labels.items():

try:

create_xml(key,value,'val',xml_path=xml_path,pic_path=pic_path)

except (FileNotFoundError):

print(key)

continue

#test的xml做不做都行,我在voc_label.py中把test部分删了

'''

test_path = glob.glob('/content/input/test/mchar_test_a/*.png')

for i in test_path:

name=i.split('/')

try:

create_xml(name[len(name)-1],None,'test',xml_path=xml_path,pic_path=pic_path)

except (FileNotFoundError):

print(name[len(name)-1])

continue

'''

"

test_path = glob.glob('/content/input/test/mchar_test_a/*.png')

for i in test_path:

name=i.split('/')

try:

create_xml(name[len(name)-1],None,'test',xml_path=xml_path,pic_path=pic_path)

except (FileNotFoundError):

print(name[len(name)-1])

continue

"

#这个colab环境里面是有的

!pip install opencv-python

Requirement already satisfied: opencv-python in /usr/local/lib/python3.6/dist-packages (4.1.2.30)

Requirement already satisfied: numpy>=1.11.3 in /usr/local/lib/python3.6/dist-packages (from opencv-python) (1.18.5)

修改参数:

进入cfg文件夹,修改yolov3.cfg中:

- 文本最开始的batch和subdivision

(colab使用的是16GB显存的Tesla T4,batch设置为128比较合适) - 文本最后三处[yolo]标签中的classes和classes前面的一个filters(等于(5+类别数)*3)

!pwd

/content/darknet

#写train.data文件

with(open('train.data','w')) as f:

f.write('classes=10

train=/content/VOCdevkit/VOC2007/ImageSets/Main/train.txt

valid=/content/VOCdevkit/VOC2007/ImageSets/Main/val.txt

names=train.names

backup=/content/drive/My Drive/cvComp1Realted/backup')

#写train.names文件

with(open('train.names','w'))as f:

f.write('0

1

2

3

4

5

6

7

8

9')

#!cp /content/darknet/scripts/voc_label.py /content/

这里对voc_label.py文件进行了修改,修改后的文件如下:

import xml.etree.ElementTree as ET

import pickle

import os

from os import listdir, getcwd

from os.path import join

#sets=[('2012', 'train'), ('2012', 'val'), ('2007', 'train'), ('2007', 'val'), ('2007', 'test')]

sets=[ ('2007', 'train'), ('2007', 'val')]

#classes = ["aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"]

classes=['0','1','2','3','4','5','6','7','8','9']

def convert(size, box):

dw = 1./(size[0])

dh = 1./(size[1])

x = (box[0] + box[1])/2.0 - 1

y = (box[2] + box[3])/2.0 - 1

w = box[1] - box[0]

h = box[3] - box[2]

x = x*dw

w = w*dw

y = y*dh

h = h*dh

return (x,y,w,h)

def convert_annotation(year, image_id):

image_ids=image_id.split('/')

image_id=image_ids[len(image_ids)-1]

image_id=image_id.split('.')[0]

in_file = open('/content/VOCdevkit/VOC%s/Annotations/%s.xml'%(year, image_id))

out_file = open('/content/VOCdevkit/VOC%s/labels/%s.txt'%(year, image_id), 'w')

tree=ET.parse(in_file)

root = tree.getroot()

size = root.find('size')

w = int(size.find('width').text)

h = int(size.find('height').text)

for obj in root.iter('object'):

difficult = obj.find('difficult').text

cls = obj.find('name').text

if cls not in classes or int(difficult)==1:

continue

cls_id = classes.index(cls)

xmlbox = obj.find('bndbox')

b = (float(xmlbox.find('xmin').text), float(xmlbox.find('xmax').text), float(xmlbox.find('ymin').text), float(xmlbox.find('ymax').text))

bb = convert((w,h), b)

out_file.write(str(cls_id) + " " + " ".join([str(a) for a in bb]) + '

')

wd = getcwd()

for year, image_set in sets:

if not os.path.exists('/content/VOCdevkit/VOC%s/labels/'%(year)):

os.makedirs('/content/VOCdevkit/VOC%s/labels/'%(year))

image_ids = open('/content/VOCdevkit/VOC%s/ImageSets/Main/%s.txt'%(year, image_set)).read().strip().split()

list_file = open('%s_%s.txt'%(year, image_set), 'w')

for image_id in image_ids:

list_file.write(image_id+'

')

convert_annotation(year, image_id)

list_file.close()

os.system("cat 2007_train.txt 2007_val.txt > train.txt")

os.system("cat 2007_train.txt 2007_val.txt 2007_test.txt > train.all.txt")

#保存修改后的voc_label.py

#!cp /content/voc_label.py '/content/drive/My Drive/cvComp1Realted/voc_label.py'

#加载

!cp '/content/drive/My Drive/cvComp1Realted/voc_label.py' /content/voc_label.py

!python /content/voc_label.py

cat: 2007_test.txt: No such file or directory

#开启训练

!./darknet detector train /content/darknet/train.data cfg/yolov3.cfg yolov3.weights -dont_show -map

#!cp /content/darknet/backup/yolov3_last.weights '/content/drive/My Drive/cvComp1Realted/backup/yolov3_last.weights'

#load back

!cp '/content/drive/My Drive/cvComp1Realted/backup/yolov3_last.weights' /content/darknet/backup/yolov3_last.weights

#测试一张

!./darknet detector test /content/darknet/train.data /content/darknet/cfg/yolov3.cfg /content/darknet/backup/yolov3_last.weights /content/input/val/mchar_val/000001.png -i 0 -thresh 0.05

imShow('predictions.jpg')

CUDA-version: 10010 (10010), cuDNN: 7.6.5, GPU count: 1

OpenCV version: 3.2.0

0 : compute_capability = 750, cudnn_half = 0, GPU: Tesla T4

net.optimized_memory = 0

mini_batch = 1, batch = 1, time_steps = 1, train = 0

layer filters size/strd(dil) input output

0 conv 32 3 x 3/ 1 416 x 416 x 3 -> 416 x 416 x 32 0.299 BF

1 conv 64 3 x 3/ 2 416 x 416 x 32 -> 208 x 208 x 64 1.595 BF

2 conv 32 1 x 1/ 1 208 x 208 x 64 -> 208 x 208 x 32 0.177 BF

3 conv 64 3 x 3/ 1 208 x 208 x 32 -> 208 x 208 x 64 1.595 BF

4 Shortcut Layer: 1, wt = 0, wn = 0, outputs: 208 x 208 x 64 0.003 BF

5 conv 128 3 x 3/ 2 208 x 208 x 64 -> 104 x 104 x 128 1.595 BF

6 conv 64 1 x 1/ 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BF

7 conv 128 3 x 3/ 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BF

8 Shortcut Layer: 5, wt = 0, wn = 0, outputs: 104 x 104 x 128 0.001 BF

9 conv 64 1 x 1/ 1 104 x 104 x 128 -> 104 x 104 x 64 0.177 BF

10 conv 128 3 x 3/ 1 104 x 104 x 64 -> 104 x 104 x 128 1.595 BF

11 Shortcut Layer: 8, wt = 0, wn = 0, outputs: 104 x 104 x 128 0.001 BF

12 conv 256 3 x 3/ 2 104 x 104 x 128 -> 52 x 52 x 256 1.595 BF

13 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

14 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

15 Shortcut Layer: 12, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

16 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

17 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

18 Shortcut Layer: 15, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

19 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

20 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

21 Shortcut Layer: 18, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

22 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

23 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

24 Shortcut Layer: 21, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

25 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

26 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

27 Shortcut Layer: 24, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

28 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

29 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

30 Shortcut Layer: 27, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

31 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

32 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

33 Shortcut Layer: 30, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

34 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

35 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

36 Shortcut Layer: 33, wt = 0, wn = 0, outputs: 52 x 52 x 256 0.001 BF

37 conv 512 3 x 3/ 2 52 x 52 x 256 -> 26 x 26 x 512 1.595 BF

38 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

39 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

40 Shortcut Layer: 37, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

41 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

42 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

43 Shortcut Layer: 40, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

44 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

45 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

46 Shortcut Layer: 43, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

47 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

48 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

49 Shortcut Layer: 46, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

50 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

51 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

52 Shortcut Layer: 49, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

53 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

54 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

55 Shortcut Layer: 52, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

56 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

57 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

58 Shortcut Layer: 55, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

59 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

60 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

61 Shortcut Layer: 58, wt = 0, wn = 0, outputs: 26 x 26 x 512 0.000 BF

62 conv 1024 3 x 3/ 2 26 x 26 x 512 -> 13 x 13 x1024 1.595 BF

63 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

64 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

65 Shortcut Layer: 62, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

66 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

67 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

68 Shortcut Layer: 65, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

69 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

70 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

71 Shortcut Layer: 68, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

72 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

73 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

74 Shortcut Layer: 71, wt = 0, wn = 0, outputs: 13 x 13 x1024 0.000 BF

75 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

76 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

77 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

78 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

79 conv 512 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 512 0.177 BF

80 conv 1024 3 x 3/ 1 13 x 13 x 512 -> 13 x 13 x1024 1.595 BF

81 conv 45 1 x 1/ 1 13 x 13 x1024 -> 13 x 13 x 45 0.016 BF

82 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.00

83 route 79 -> 13 x 13 x 512

84 conv 256 1 x 1/ 1 13 x 13 x 512 -> 13 x 13 x 256 0.044 BF

85 upsample 2x 13 x 13 x 256 -> 26 x 26 x 256

86 route 85 61 -> 26 x 26 x 768

87 conv 256 1 x 1/ 1 26 x 26 x 768 -> 26 x 26 x 256 0.266 BF

88 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

89 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

90 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

91 conv 256 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 256 0.177 BF

92 conv 512 3 x 3/ 1 26 x 26 x 256 -> 26 x 26 x 512 1.595 BF

93 conv 45 1 x 1/ 1 26 x 26 x 512 -> 26 x 26 x 45 0.031 BF

94 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.00

95 route 91 -> 26 x 26 x 256

96 conv 128 1 x 1/ 1 26 x 26 x 256 -> 26 x 26 x 128 0.044 BF

97 upsample 2x 26 x 26 x 128 -> 52 x 52 x 128

98 route 97 36 -> 52 x 52 x 384

99 conv 128 1 x 1/ 1 52 x 52 x 384 -> 52 x 52 x 128 0.266 BF

100 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

101 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

102 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

103 conv 128 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 128 0.177 BF

104 conv 256 3 x 3/ 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BF

105 conv 45 1 x 1/ 1 52 x 52 x 256 -> 52 x 52 x 45 0.062 BF

106 yolo

[yolo] params: iou loss: mse (2), iou_norm: 0.75, obj_norm: 1.00, cls_norm: 1.00, delta_norm: 1.00, scale_x_y: 1.00

Total BFLOPS 65.370

avg_outputs = 518514

Allocate additional workspace_size = 52.43 MB

Loading weights from /content/darknet/backup/yolov3_last.weights...

seen 64, trained: 32038 K-images (500 Kilo-batches_64)

Done! Loaded 107 layers from weights-file

Detection layer: 82 - type = 28

Detection layer: 94 - type = 28

Detection layer: 106 - type = 28

/content/input/val/mchar_val/000001.png: Predicted in 40.634000 milli-seconds.

1: 6%

Unable to init server: Could not connect: Connection refused

(predictions:1358): Gtk-[1;33mWARNING[0m **: [34m05:21:04.194[0m: cannot open display:

结论

其实我在尝试的时候,最后的预测如果使用默认的置信度(0.25)是出不来框的,我这里在预测时将置信度通过-thresh 0.05调节到了0.05,当然不能应用(误差太大),只是为了证明项目的流程没有问题。但精度不足的可能原因有:

-

训练不足:实测colab在使用shell命令训练一段时间后会断开,这一点我尝试了三次都是如此,所以保存的是一个训练不足的版本

此外,默认的训练好像是只有在完成时才会保存参数,没有找到设置多少epoch自动保存的设置,这个有待研究

-

输入尺寸有问题:理论上图片的输入应该是什么尺寸都可以的,但是根据这篇博文的讨论,yolo的效果在输入图片和它配置文件(指cfg/yolo3.cfg)中的尺寸一致时候效果最好,而我这里用的数据集各个图片的尺寸都不一样,和配置文件中的尺寸更就不一样了,我猜测这也是在训练时只有小尺寸的检测框能检测到物体,大尺寸的框就不行的原因(训练的时候每次会输出三个值,就是三个yolo输出,采集的不同尺度的结果)。因为目标检测的resize还涉及到label中定位框的坐标变化,比较复杂,我这里就没做,当然要做的话其实也不难,图片和label中的定位框尺寸同比例缩放就好了。

另外有一点要注意,根据其他博主的文章 (例如https://blog.csdn.net/qq_44166805/article/details/105876028),我们自己在数据集的ImageSet/Main文件夹下的txt文件中是只需要写不含后缀的文件名的,而在模型的如下的配置文件中我们需要写两个txt的路径,这两个文件是由voc_label.py随着各个图片的txt一起生成的,里面是各个图片的全路径。但我因为是第一次用,不熟悉,自己在Main文件夹下生成的txt其实就是全路径的,而我手动修改了voc_label.py的代码,让它输出了一个和我的txt完全相同的文件,当然这两种在使用上没有什么区别,都是可以用的

classes= 2 #classes为训练样本集的类别总数

train = scripts/train.txt #train的路径为训练样本集所在的路径,前面生成的

valid = scripts/test.txt #valid的路径为验证样本集所在的路径,前面生成的

names = data/safe.names #names的路径为***.names文件所在的路径

backup = backup/

不管精度怎样,模型起码是通了